-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

Any software that can read nvidia core voltage, Pascal and onwards?

- Thread starter :D:D

- Start date

- Joined

- Feb 20, 2020

- Messages

- 9,340 (4.83/day)

- Location

- Louisiana

| System Name | Ghetto Rigs z490|x99|Acer 17 Nitro 7840hs/ 5600c40-2x16/ 4060/ 1tb acer stock m.2/ 4tb sn850x |

|---|---|

| Processor | 10900k w/Optimus Foundation | 5930k w/Black Noctua D15 |

| Motherboard | z490 Maximus XII Apex | x99 Sabertooth |

| Cooling | oCool D5 res-combo/280 GTX/ Optimus Foundation/ gpu water block | Blk D15 |

| Memory | Trident-Z Royal 4000c16 2x16gb | Trident-Z 3200c14 4x8gb |

| Video Card(s) | Titan Xp-water | evga 980ti gaming-w/ air |

| Storage | 970evo+500gb & sn850x 4tb | 860 pro 256gb | Acer m.2 1tb/ sn850x 4tb| Many2.5" sata's ssd 3.5hdd's |

| Display(s) | 1-AOC G2460PG 24"G-Sync 144Hz/ 2nd 1-ASUS VG248QE 24"/ 3rd LG 43" series |

| Case | D450 | Cherry Entertainment center on Test bench |

| Audio Device(s) | Built in Realtek x2 with 2-Insignia 2.0 sound bars & 1-LG sound bar |

| Power Supply | EVGA 1000P2 with APC AX1500 | 850P2 with CyberPower-GX1325U |

| Mouse | Redragon 901 Perdition x3 |

| Keyboard | G710+x3 |

| Software | Win-7 pro x3 and win-10 & 11pro x3 |

| Benchmark Scores | Are in the benchmark section |

Hi,

GPU-Z

www.techpowerup.com

Hwinfo64

www.techpowerup.com

Hwinfo64

SIV64x

HWiNFO - Download

GPU-Z

TechPowerUp GPU-Z v2.66.0 Download

GPU-Z is a lightweight utility designed to give you all information about your video card and GPU.

SIV64x

HWiNFO - Download

- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

Thanks but those do not measure actual voltage for me. For instance my 1050Ti is HW modded to give an extra ~20% voltage so when requesting 1.0000V it's nearer 1.2V but software still shows 1.0000V. Thought HWiNFO might have had something but no. Can and have checked with a meter but would prefer a SW method if possible.

- Joined

- Feb 20, 2020

- Messages

- 9,340 (4.83/day)

- Location

- Louisiana

| System Name | Ghetto Rigs z490|x99|Acer 17 Nitro 7840hs/ 5600c40-2x16/ 4060/ 1tb acer stock m.2/ 4tb sn850x |

|---|---|

| Processor | 10900k w/Optimus Foundation | 5930k w/Black Noctua D15 |

| Motherboard | z490 Maximus XII Apex | x99 Sabertooth |

| Cooling | oCool D5 res-combo/280 GTX/ Optimus Foundation/ gpu water block | Blk D15 |

| Memory | Trident-Z Royal 4000c16 2x16gb | Trident-Z 3200c14 4x8gb |

| Video Card(s) | Titan Xp-water | evga 980ti gaming-w/ air |

| Storage | 970evo+500gb & sn850x 4tb | 860 pro 256gb | Acer m.2 1tb/ sn850x 4tb| Many2.5" sata's ssd 3.5hdd's |

| Display(s) | 1-AOC G2460PG 24"G-Sync 144Hz/ 2nd 1-ASUS VG248QE 24"/ 3rd LG 43" series |

| Case | D450 | Cherry Entertainment center on Test bench |

| Audio Device(s) | Built in Realtek x2 with 2-Insignia 2.0 sound bars & 1-LG sound bar |

| Power Supply | EVGA 1000P2 with APC AX1500 | 850P2 with CyberPower-GX1325U |

| Mouse | Redragon 901 Perdition x3 |

| Keyboard | G710+x3 |

| Software | Win-7 pro x3 and win-10 & 11pro x3 |

| Benchmark Scores | Are in the benchmark section |

Hi,

Modded how ? shunt mod ?

If so you'd have to use a volt meter because it fakes the system vbios.

Modded how ? shunt mod ?

If so you'd have to use a volt meter because it fakes the system vbios.

- Joined

- Jun 3, 2008

- Messages

- 957 (0.15/day)

- Location

- Pacific Coast

| System Name | Z77 Rev. 1 |

|---|---|

| Processor | Intel Core i7 3770K |

| Motherboard | ASRock Z77 Extreme4 |

| Cooling | Water Cooling |

| Memory | 2x G.Skill F3-2400C10D-16GTX |

| Video Card(s) | EVGA GTX 1080 |

| Storage | Samsung 850 Pro |

| Display(s) | Samsung 28" UE590 UHD |

| Case | Silverstone TJ07 |

| Audio Device(s) | Onboard |

| Power Supply | Seasonic PRIME 600W Titanium |

| Mouse | EVGA TORQ X10 |

| Keyboard | Leopold Tenkeyless |

| Software | Windows 10 Pro 64-bit |

| Benchmark Scores | 3DMark Time Spy: 7695 |

Link the HW mod you used.

Often a HW mod has to do with altering the feedback voltage by dumping some of it to ground in order to have it compensate by giving it extra voltage to offset what was dumped. In such an instance, it is obvious why no software could provide an accurate voltage reading, since you are purposely giving it a false reading to the place responsible for making readings.

Often a HW mod has to do with altering the feedback voltage by dumping some of it to ground in order to have it compensate by giving it extra voltage to offset what was dumped. In such an instance, it is obvious why no software could provide an accurate voltage reading, since you are purposely giving it a false reading to the place responsible for making readings.

- Joined

- Feb 20, 2020

- Messages

- 9,340 (4.83/day)

- Location

- Louisiana

| System Name | Ghetto Rigs z490|x99|Acer 17 Nitro 7840hs/ 5600c40-2x16/ 4060/ 1tb acer stock m.2/ 4tb sn850x |

|---|---|

| Processor | 10900k w/Optimus Foundation | 5930k w/Black Noctua D15 |

| Motherboard | z490 Maximus XII Apex | x99 Sabertooth |

| Cooling | oCool D5 res-combo/280 GTX/ Optimus Foundation/ gpu water block | Blk D15 |

| Memory | Trident-Z Royal 4000c16 2x16gb | Trident-Z 3200c14 4x8gb |

| Video Card(s) | Titan Xp-water | evga 980ti gaming-w/ air |

| Storage | 970evo+500gb & sn850x 4tb | 860 pro 256gb | Acer m.2 1tb/ sn850x 4tb| Many2.5" sata's ssd 3.5hdd's |

| Display(s) | 1-AOC G2460PG 24"G-Sync 144Hz/ 2nd 1-ASUS VG248QE 24"/ 3rd LG 43" series |

| Case | D450 | Cherry Entertainment center on Test bench |

| Audio Device(s) | Built in Realtek x2 with 2-Insignia 2.0 sound bars & 1-LG sound bar |

| Power Supply | EVGA 1000P2 with APC AX1500 | 850P2 with CyberPower-GX1325U |

| Mouse | Redragon 901 Perdition x3 |

| Keyboard | G710+x3 |

| Software | Win-7 pro x3 and win-10 & 11pro x3 |

| Benchmark Scores | Are in the benchmark section |

Hi,

Yeah the main GPU power reading might show the increase but you'd have to of known the original amount before the mod.

Yeah the main GPU power reading might show the increase but you'd have to of known the original amount before the mod.

- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

@ty_ger see what your saying, no output voltage only FB voltage. Now I'm wondering if no voltage is measured at all but just goes by it's own reference. Sorry no link, it's just something I made myself using one resistor, no dumping to ground but does of course adjust FB. Could look for a pic or try and find the data sheet and draw it in, was from a long time ago, if you want to see?

That still leaves a problem with my 1660 super which only shows up to maximum default of 1.068V or 1.093V but have gone higher than that without using a HW mod. I think there was something back during pascal days maybe from EVGA or someone else that read voltage from the VRM or maybe I'm imagining things as my memory is not so good.

That still leaves a problem with my 1660 super which only shows up to maximum default of 1.068V or 1.093V but have gone higher than that without using a HW mod. I think there was something back during pascal days maybe from EVGA or someone else that read voltage from the VRM or maybe I'm imagining things as my memory is not so good.

- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

Tried some other SW such as Asus GPUTweaker III and MSI Afterburner with I2C commands but no joy.

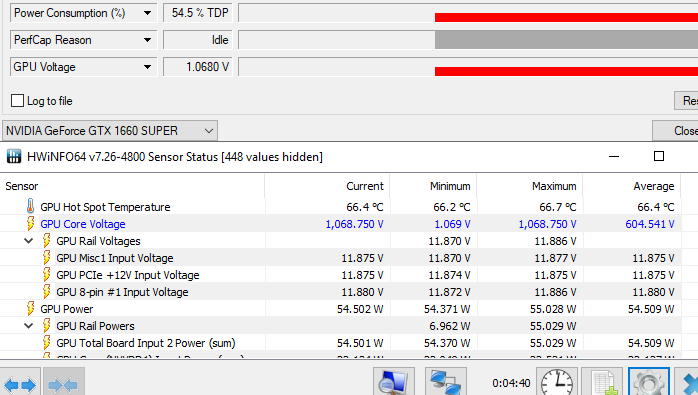

Found a datasheet for VRM on 1660 Super and does report FB voltage as VOUT as per @ty_ger post. It has a resolution of 10mV with range of 0 - 2.55V while reported nvidia voltage appears to use a resolution of 10uV so not measuring actual voltage. With maximum default voltage nvidia reports 1.06875V

With HWiNFO customized to multiply GPU core voltage by 1000 we get

Small bug with minimum showing rounding up to 1.069V, maybe not updated after custom value set. Seems to be a small bug with GPU-Z too showing 1.0680V

Martin of HWiNFO reports that likely nvidia have locked down reading VRM. Was hoping to get secondhand 3000 GPU for further testing without having to make electrical contact with rail / sense.

Was hoping to get secondhand 3000 GPU for further testing without having to make electrical contact with rail / sense.

Found a datasheet for VRM on 1660 Super and does report FB voltage as VOUT as per @ty_ger post. It has a resolution of 10mV with range of 0 - 2.55V while reported nvidia voltage appears to use a resolution of 10uV so not measuring actual voltage. With maximum default voltage nvidia reports 1.06875V

With HWiNFO customized to multiply GPU core voltage by 1000 we get

Small bug with minimum showing rounding up to 1.069V, maybe not updated after custom value set. Seems to be a small bug with GPU-Z too showing 1.0680V

Martin of HWiNFO reports that likely nvidia have locked down reading VRM.

Was hoping to get secondhand 3000 GPU for further testing without having to make electrical contact with rail / sense.

Was hoping to get secondhand 3000 GPU for further testing without having to make electrical contact with rail / sense.- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

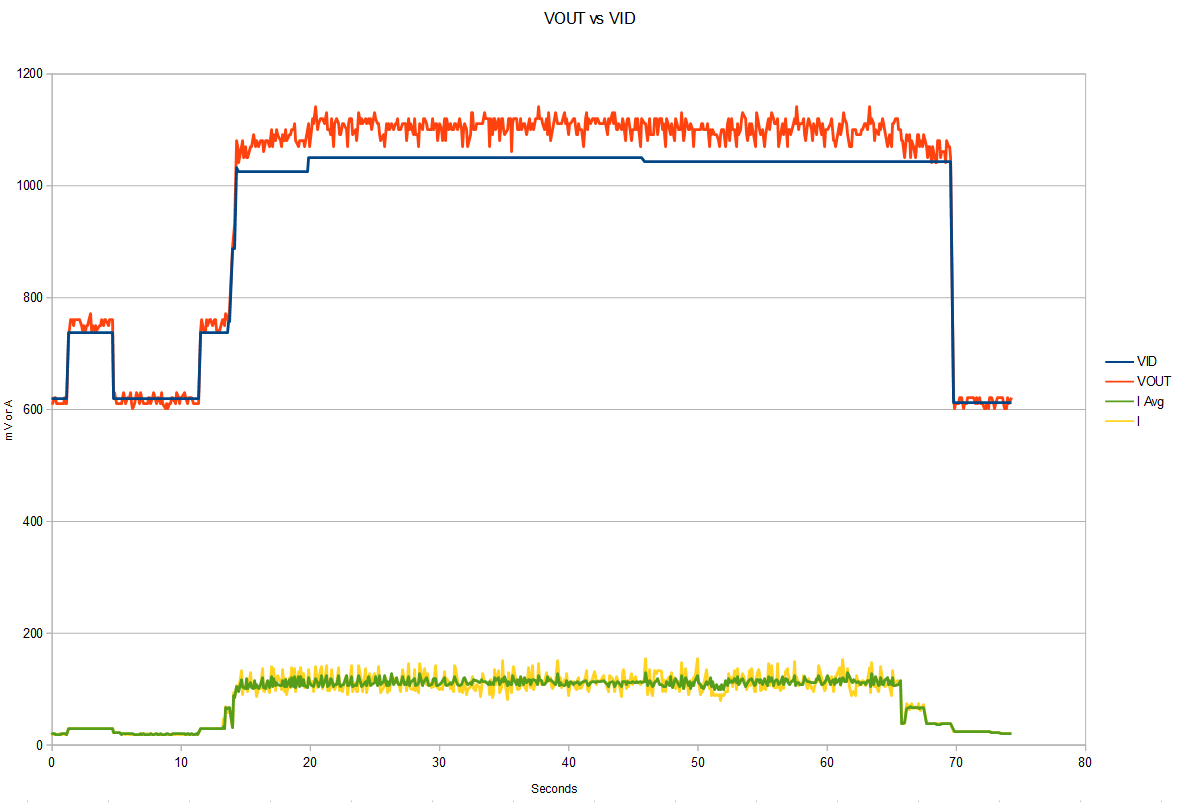

Finally managed to develop some comm's software for 1660 and now can read VOUT, seems more likely reporting VOUT than VSense, will have to confirm. Still this is nice, I now need to look for a second hand 30/40 card with similar comm's. Did see a 3060m going relatively cheap but a little concerned with unknown build. The 1050Ti is a lost cause with it's ermm cost effective VRM so no comm's / data to be had.

A little 1660 Super heaven run at 150W limit and 100% fan, otherwise all stock. Average current at default of 50us.

A little 1660 Super heaven run at 150W limit and 100% fan, otherwise all stock. Average current at default of 50us.

- Joined

- Nov 7, 2017

- Messages

- 2,230 (0.81/day)

- Location

- Ibiza, Spain.

| System Name | Main |

|---|---|

| Processor | R7 5950x |

| Motherboard | MSI x570S Unify-X Max |

| Cooling | converted Eisbär 280, two F14 + three F12S intake, two P14S + two P14 + two F14 as exhaust |

| Memory | 16 GB Corsair LPX bdie @3600/16 1.35v |

| Video Card(s) | GB 2080S WaterForce WB |

| Storage | six M.2 pcie gen 4 |

| Display(s) | Sony 50X90J |

| Case | Tt Level 20 HT |

| Audio Device(s) | Asus Xonar AE, modded Sennheiser HD 558, Klipsch 2.1 THX |

| Power Supply | Corsair RMx 750w |

| Mouse | Logitech G903 |

| Keyboard | GSKILL Ripjaws |

| VR HMD | NA |

| Software | win 10 pro x64 |

| Benchmark Scores | TimeSpy score Fire Strike Ultra SuperPosition CB20 |

understand the want to get numbers, but unless getting to max gpu load, why increase vcore for it?

ignoring that those chips get binned and not so good ending up in lower tier will need max just to do a little better, and those going into oced units, wont have much "headroom" before other limiters kick in.

or is it just for "measuring" (as in diagnostics) not related to tweaking?

just curious.

ignoring that those chips get binned and not so good ending up in lower tier will need max just to do a little better, and those going into oced units, wont have much "headroom" before other limiters kick in.

or is it just for "measuring" (as in diagnostics) not related to tweaking?

just curious.

- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

@Waldorf For monitoring.

There's a bug I'm looking into that removes limits, see https://www.techpowerup.com/forums/threads/throttling-gtx-1660-super-oc-uv.320586/post-5223303

As @ThrashZone said an increase in power output can be a telltale for higher voltage and while I see this, the core voltage (VID) never reported above the cards max. I had to strip the card down and connect some wires to verify. I want to check if this bug is happening with just this card or also happens on later ones and I would prefer not to have to add wires. I plan to do that when/if I can find some affordable secondhand cards.

I passed on to Martin at HWiNFO about reading VRM values so maybe that feature will be looked at and added but not straight forward so idk.

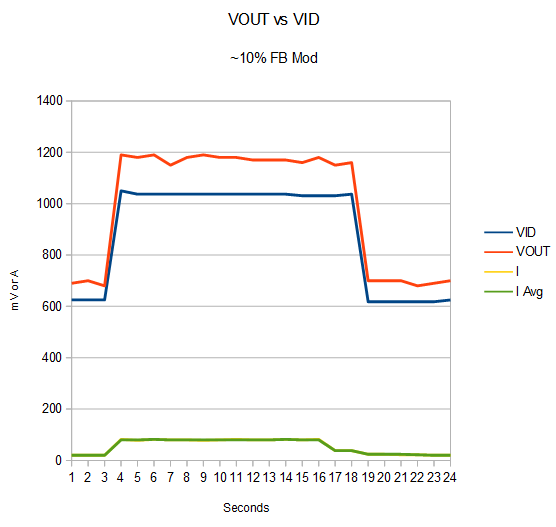

Edit: Okay with a ~10% FB mod have verified it's Vout given and not FB sense voltage so one can read the voltage with software although it will usually be a few mV higher than sense voltage with load.

There's a bug I'm looking into that removes limits, see https://www.techpowerup.com/forums/threads/throttling-gtx-1660-super-oc-uv.320586/post-5223303

As @ThrashZone said an increase in power output can be a telltale for higher voltage and while I see this, the core voltage (VID) never reported above the cards max. I had to strip the card down and connect some wires to verify. I want to check if this bug is happening with just this card or also happens on later ones and I would prefer not to have to add wires. I plan to do that when/if I can find some affordable secondhand cards.

I passed on to Martin at HWiNFO about reading VRM values so maybe that feature will be looked at and added but not straight forward so idk.

Edit: Okay with a ~10% FB mod have verified it's Vout given and not FB sense voltage so one can read the voltage with software although it will usually be a few mV higher than sense voltage with load.

Last edited:

- Joined

- Aug 27, 2023

- Messages

- 302 (0.47/day)

Well I got hold of a secondhand RTX3070 and preliminary testing shows some differences in particular xBAR clock and others dropping. This results in about 10% drop in frame-rate for identical GPU and Memory clocks.

Example with 3DMark Steel Nomad

Note the big drop with xBAR and Video clock. Back in the earlier days of Pascal and cross flashing FE the XOC vbios gave lower performance clock for clock and it was noticed the video clock was lower. At the time xBAR clock wasn't measured but probably was lower too. The effective GPU clock (E-GPU) drops possibly due to temperature, need to check further.

Normally I would expect 34-35 FPS here.

Note also how 3DMark appears to be using requested GPU clock (R-GPU) instead of effective GPU clock. Surprising considering it's a bespoke benchmark!

Some more testing needs to be done and still need to get and try 40 series.

Example with 3DMark Steel Nomad

Note the big drop with xBAR and Video clock. Back in the earlier days of Pascal and cross flashing FE the XOC vbios gave lower performance clock for clock and it was noticed the video clock was lower. At the time xBAR clock wasn't measured but probably was lower too. The effective GPU clock (E-GPU) drops possibly due to temperature, need to check further.

Normally I would expect 34-35 FPS here.

Note also how 3DMark appears to be using requested GPU clock (R-GPU) instead of effective GPU clock. Surprising considering it's a bespoke benchmark!

Some more testing needs to be done and still need to get and try 40 series.