Or if Apple made Windows and Linux drivers.If they opened up to run games and were better for enterprise (better AD/MDM/MDS integration, and better compatibility with microsoft apps) they would crush it on the consumer side.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Atlas Fallen Optimization Fail: Gain 50% Additional Performance by Turning off the E-cores

- Thread starter btarunr

- Start date

- Joined

- Aug 16, 2005

- Messages

- 27,906 (3.84/day)

- Location

- Alabama

| System Name | RogueOne |

|---|---|

| Processor | Xeon W9-3495x |

| Motherboard | ASUS w790E Sage SE |

| Cooling | SilverStone XE360-4677 |

| Memory | 128gb Gskill Zeta R5 DDR5 RDIMMs |

| Video Card(s) | MSI SUPRIM Liquid 5090 |

| Storage | 1x 2TB WD SN850X | 2x 8TB GAMMIX S70 |

| Display(s) | 49" Philips Evnia OLED (49M2C8900) |

| Case | Thermaltake Core P3 Pro Snow |

| Audio Device(s) | Moondrop S8's on Schitt Gunnr |

| Power Supply | Seasonic Prime TX-1600 |

| Mouse | Razer Viper mini signature edition (mercury white) |

| Keyboard | Wooting 80 HE White, Gateron Jades |

| VR HMD | Quest 3 |

| Software | Windows 11 Pro Workstation |

| Benchmark Scores | I dont have time for that. |

Atlas Fallen developers either forgot that E-Cores exist (and simply designed the game to load all cores, no matter their capability), or thought they'd be smarter than Intel

Middle of last year I rotated with a team of nothing but SDEs. They were having issues with performance on one of the new services we were spinning up. We were recording remote sessions and encoding them into video to be retrieved later.

They were not understanding why we were burning 192 core AMD systems and still getting poor performance. All of these guys were pretty removed from HW in general. I explained to them that we need to switch to our GPU compute cluster instead of using CPU threads since the GPUs can do HW En/Decode they were legit shocked.

We switched. Saved hundreds of thousands in internal costs took like 2 weeks for them to recode for GPUs. I got a promotion out of it. I rotated off the team not understanding how they made it that far.

Sometimes these guys literally just sit infront of a game engine and check a box "use all available CPU cores" I swear to god. I was on another team about 8 months later. I had to explain to a TAM (thankfully not an engineer) why 10gb/s links on our storage offload system did NOT mean 10 gigaBYTES/s and that the time quotes they were giving were going to be drastically off.

They got paid more than me.

Always shoot for the stars in your careers people. Even if you dont think you can cut it. The sky is already full of some pretty dim ones.

- Joined

- May 9, 2012

- Messages

- 8,584 (1.78/day)

- Location

- Ovronnaz, Wallis, Switzerland

| System Name | main/SFFHTPCARGH!(tm)/Xiaomi Mi TV Stick/Samsung Galaxy S25/Ally |

|---|---|

| Processor | Ryzen 7 5800X3D/i7-3770/S905X/Snapdragon 8 Elite/Ryzen Z1 Extreme |

| Motherboard | MSI MAG B550 Tomahawk/HP SFF Q77 Express/uh?/uh?/Asus |

| Cooling | Enermax ETS-T50 Axe aRGB /basic HP HSF /errr.../oh! liqui..wait, no:sizable vapor chamber/a nice one |

| Memory | 64gb DDR4 3600/8gb DDR3 1600/2gbLPDDR3/12gbLPDDR5x/16gb(10 sys)LPDDR5 6400 |

| Video Card(s) | Hellhound Spectral White RX 7900 XTX 24gb/GT 730/Mali 450MP5/Adreno 830/Radeon 780M 6gb LPDDR5 |

| Storage | 250gb870EVO/500gb860EVO/2tbSandisk/NVMe2tb+1tb/4tbextreme V2/1TB Arion/500gb/8gb/512gb/4tb SN850X |

| Display(s) | X58222 32" 2880x1620/32"FHDTV/273E3LHSB 27" 1920x1080/6.67"/LTPO AMOLED panel FHD+120hz/7" FHD 120hz |

| Case | Cougar Panzer Max/Elite 8300 SFF/None/Gorilla Glass Victus 2/front-stock back-JSAUX RGB transparent |

| Audio Device(s) | Logi Z333/SB Audigy RX/HDMI/HDMI/Dolby Atmos/CVJ NightElf/Moondrop Chu II+BT20S/Nekocake GfL QBZ-191 |

| Power Supply | Chieftec Proton BDF-1000C /HP 240w/12v 1.5A/USAMS GAN PD 33w/USAMS GAN 100w |

| Mouse | Speedlink Sovos Vertical-Asus ROG Spatha-Logi Ergo M575/Xiaomi XMRM-006/touch/touch |

| Keyboard | Endorfy Thock 75%/Lofree Edge/none/touch/virtual |

| VR HMD | Medion Erazer |

| Software | Win11/Win8.1/Android TV 8.1/Android 15/Win11 |

| Benchmark Scores | bench...mark? i do leave mark on bench sometime, to remember which one is the most comfortable. :o |

yep, but they will aim them where it's actually needed (laptop/mobile ) if i am not mistakenMore accurate to say AMD for now.

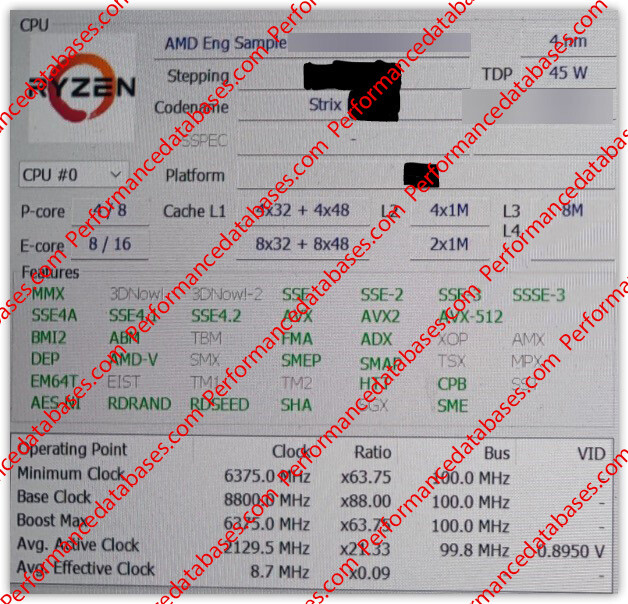

AMD "Strix Point" Company's First Hybrid Processor, 4P+8E ES Surfaces

Beating previous reports that AMD is increasing the CPU core count of its mobile monolithic processors from the present 8-core/16-thread to 12-core/24-thread; we are learning that the next-gen processor from the company, codenamed "Strix Point," will in fact be the company's first hybrid...www.techpowerup.com

- Joined

- Apr 14, 2016

- Messages

- 288 (0.09/day)

AMD right about now!

OS scheduling is independent of thread director, I'm yet to see what TD actually does & how efficient/better it is to a similar but much better software solution I posted in the other thread!

Are you sure? https://www.techpowerup.com/312237/amd-strix-point-companys-first-hybrid-processor-4p-8e-es-surfaces

If this come to be true, are they screwed together, or even worse? Since intel is dominating the technology way earlier?

How are they dominating? They literally had to disable AVX512 in ADL, in fact RPL could also have it just permanently(?) disabled.

Also I'm yet to see how/what thread director does? Does anyone actually have any benchmarks for it

Also I'm yet to see how/what thread director does? Does anyone actually have any benchmarks for it

Most of them I have worked with have no idea about the latest & greatest in hardware, not that they always need to, but you'd think they'd at least try to make themselves acquainted with something as fundamental to their work?Middle of last year I rotated with a team of nothing but SDEs. They were having issues with performance on one of the new services we were spinning up. We were recording remote sessions and encoding them into video to be retrieved later.

- Joined

- Mar 18, 2023

- Messages

- 1,071 (1.27/day)

| System Name | Never trust a socket with less than 2000 pins |

|---|

Would be interesting to run the same test on a 7950x, once with all 16 cores and once with just 8 cores enabled.

That would tell you whether this is a thread synchronization problem that ends up having so much locking overhead that adding cores makes it slower instead of faster.

That would tell you whether this is a thread synchronization problem that ends up having so much locking overhead that adding cores makes it slower instead of faster.

- Joined

- Jun 18, 2019

- Messages

- 138 (0.06/day)

4 E-Cores=1 P-CoreThey're a win for people running mutlithreaded workloads. Not because they're "efficient", but because they can squeeze more perf per sq mm (i.e. you can fit 3-4 E-cores where only 2 P-cores would fit and get better performance as a result).

E cores are not a failure, but, like any heterogenous design, results are not uniform anymore, they will vary with workload.

- Joined

- Apr 28, 2020

- Messages

- 104 (0.05/day)

I skimmed through this but I don't see you mentioning if you disabled E-Cores via Bios or just via task-manager affinity

- Joined

- Dec 12, 2012

- Messages

- 843 (0.18/day)

- Location

- Poland

| System Name | THU |

|---|---|

| Processor | Intel Core i5-13600KF |

| Motherboard | ASUS PRIME Z790-P D4 |

| Cooling | SilentiumPC Fortis 3 v2 + Arctic Cooling MX-2 |

| Memory | Crucial Ballistix 2x16 GB DDR4-3600 CL16 (dual rank) |

| Video Card(s) | MSI GeForce RTX 4070 Ventus 3X OC 12 GB GDDR6X (2505/21000 @ 0.91 V) |

| Storage | Lexar NM790 2 TB + Corsair MP510 960 GB + PNY XLR8 CS3030 500 GB + Toshiba E300 3 TB |

| Display(s) | LG OLED C8 55" + ASUS VP229Q |

| Case | Fractal Design Define R6 |

| Audio Device(s) | Yamaha RX-V4A + Monitor Audio Bronze 6 + Bronze FX | FiiO E10K-TC + Koss Porta Pro |

| Power Supply | Corsair RM650 |

| Mouse | Logitech M705 Marathon |

| Keyboard | Corsair K55 RGB PRO |

| Software | Windows 10 Home |

| Benchmark Scores | Benchmarks in 2025? |

They're a win for people running mutlithreaded workloads. Not because they're "efficient", but because they can squeeze more perf per sq mm (i.e. you can fit 3-4 E-cores where only 2 P-cores would fit and get better performance as a result).

Using the 13600K as an example, 8 E-cores offer ~60% more performance compared to 2 P-cores, while using ~40% more power. That's roughly a ~15% gain in efficiency, which is completely irrelevant on desktop.

That's why it actually makes no sense to have a 6P+8E SKU on desktop, when you could have an 8P+0E SKU offering very similar performance and power consumption.

Desktops are always getting the same chips as laptops. They could easily make a 12-core die instead of 8P+16E, but they wouldn't do it just for desktops. Besides, it's great marketing when your top CPU has 24 cores while the competition only has 16.

I don't mind them putting E-cores into i7's and i9's to offer more than 8 cores total, but including E-cores with fewer than 8 P-cores is just IDIOTIC.

There's also a reason why Sapphire Rapids server CPUs don't have E-cores. There's no need whatsoever.

- Joined

- Nov 13, 2007

- Messages

- 11,344 (1.76/day)

- Location

- Austin Texas

| System Name | Arrow in the Knee |

|---|---|

| Processor | 265KF -50mv, 32 NGU 34 D2D 40 ring |

| Motherboard | ASUS PRIME Z890-M |

| Cooling | Thermalright Phantom Spirit EVO (Intake) |

| Memory | 64GB DDR5 7200 CL34-44-44-44-88 TREFI 65535 |

| Video Card(s) | RTX 4090 FE |

| Storage | 2TB WD SN850, 4TB WD SN850X |

| Display(s) | Alienware 32" 4k 240hz OLED |

| Case | Jonsbo Z20 |

| Audio Device(s) | Yes |

| Power Supply | Corsair SF750 |

| Mouse | DeathadderV2 X Hyperspeed |

| Keyboard | Aula F75 cream switches |

| Software | Windows 11 |

| Benchmark Scores | They're pretty good, nothing crazy. |

Using the 13600K as an example, 8 E-cores offer ~60% more performance compared to 2 P-cores, while using ~40% more power. That's roughly a ~15% gain in efficiency, which is completely irrelevant on desktop.

That's why it actually makes no sense to have a 6P+8E SKU on desktop, when you could have an 8P+0E SKU offering very similar performance and power consumption.

Desktops are always getting the same chips as laptops. They could easily make a 12-core die instead of 8P+16E, but they wouldn't do it just for desktops. Besides, it's great marketing when your top CPU has 24 cores while the competition only has 16.

I don't mind them putting E-cores into i7's and i9's to offer more than 8 cores total, but including E-cores with fewer than 8 P-cores is just IDIOTIC.

There's also a reason why Sapphire Rapids server CPUs don't have E-cores. There's no need

13600K got priced out ultimately, but e cores are not efficiency, they're space saving with a little efficiency sprinkled in. When the 13600 cost as much as the 7700x, and with early AM5 it was a much better value.

Nowadays not so much.

- Joined

- Jun 24, 2017

- Messages

- 224 (0.08/day)

I think the real strategy behind is widen the gap between desktop pcs and workstation-server computers. As it is: reduce pcie lanes, drop AVX512, drop ECC, increase M2 slots, etc.

Camouflage? The last 100 or 200 Mhz you can squezee from 50-100W on 8 "performance" cores.

No desktop consumer wins with E cores. No when you can park your cores for specific package power usages.

Camouflage? The last 100 or 200 Mhz you can squezee from 50-100W on 8 "performance" cores.

No desktop consumer wins with E cores. No when you can park your cores for specific package power usages.

- Joined

- Feb 15, 2019

- Messages

- 1,754 (0.75/day)

| System Name | Personal Gaming Rig |

|---|---|

| Processor | Ryzen 7800X3D |

| Motherboard | MSI X670E Carbon |

| Cooling | MO-RA 3 420 |

| Memory | 32GB 6000MHz |

| Video Card(s) | RTX 4090 ICHILL FROSTBITE ULTRA |

| Storage | 4x 2TB Nvme |

| Display(s) | Samsung G8 OLED |

| Case | Silverstone FT04 |

For those who seems confusing about AMD's version of P&E core :

AMD is using a regular core (P) and cache-reduced core (E)

They are in the SAME architecture, supports the SAME instructions, and works the SAME way in computing tasks.

The only difference is the cache size, which affects the speed of data fetching, so the small cores is slower in certain tasks.

So the system can treat them as "Faster core & Slower core" .

Modern OS deal with that approach for years ever since core boosting is introduced.

Therefore should have no problem loading multi threads within a single programme into AMD's P&E cores simultaneously.

Intel's P & E cores are in completely DIFFERENT architectures, supports DIFFERENT instructions and works in DIFFERENT ways in computing tasks.

And this is the origin of all the scheduling problems we saw since ADL, and the reason behind the AVX512 drama.

The scheduler cannot just simply treat them as "Faster and slower cores" when they are inherently different architectures.

And programmes tend to have problems trying to load multi threads into them simultaneously (Except a few programmes worked very hard in optimizing like Cinebench)

So the approach in Intel side of things is usually treat it as "Faster CPU and Slower CPU".

When you need something fast, slap it into P cores and P cores only

When it is a "Minor Task", slap it into E cores and E cores only

However, no one wants to be a "Minor Task", so everyone (programme) requested working in the P cores when they are loaded.

Then the OS forcefully picked what it think is "Minor" and slap it into E-cores.

Thus creating the situation of "P core working, E cores watching" and vice versa.

And sometimes creating compatibility problems when the scheduler loaded multi threads within a single programme into Intel's P&E cores simultaneously.

And it is tediously horrible in virtualization and the root cause why Intel's own SR Xeon CPUs are either "P cores only" or "E cores only" but not both.

So think twice when someone asking "Strix or not Strix ?" when looking at problems introduced by Intel P&E approach.

Those problems are mainly caused by the DIFFERENT architectures, not different speed.

AMD is using a regular core (P) and cache-reduced core (E)

They are in the SAME architecture, supports the SAME instructions, and works the SAME way in computing tasks.

The only difference is the cache size, which affects the speed of data fetching, so the small cores is slower in certain tasks.

So the system can treat them as "Faster core & Slower core" .

Modern OS deal with that approach for years ever since core boosting is introduced.

Therefore should have no problem loading multi threads within a single programme into AMD's P&E cores simultaneously.

Intel's P & E cores are in completely DIFFERENT architectures, supports DIFFERENT instructions and works in DIFFERENT ways in computing tasks.

And this is the origin of all the scheduling problems we saw since ADL, and the reason behind the AVX512 drama.

The scheduler cannot just simply treat them as "Faster and slower cores" when they are inherently different architectures.

And programmes tend to have problems trying to load multi threads into them simultaneously (Except a few programmes worked very hard in optimizing like Cinebench)

So the approach in Intel side of things is usually treat it as "Faster CPU and Slower CPU".

When you need something fast, slap it into P cores and P cores only

When it is a "Minor Task", slap it into E cores and E cores only

However, no one wants to be a "Minor Task", so everyone (programme) requested working in the P cores when they are loaded.

Then the OS forcefully picked what it think is "Minor" and slap it into E-cores.

Thus creating the situation of "P core working, E cores watching" and vice versa.

And sometimes creating compatibility problems when the scheduler loaded multi threads within a single programme into Intel's P&E cores simultaneously.

And it is tediously horrible in virtualization and the root cause why Intel's own SR Xeon CPUs are either "P cores only" or "E cores only" but not both.

So think twice when someone asking "Strix or not Strix ?" when looking at problems introduced by Intel P&E approach.

Those problems are mainly caused by the DIFFERENT architectures, not different speed.

- Joined

- Jul 5, 2013

- Messages

- 31,813 (7.25/day)

Seems clear that they do. Doesn't take much in the coding department to do so either.Do games themselves have to be optimised/aware of P- versus E-cores?

- Joined

- Dec 1, 2020

- Messages

- 562 (0.33/day)

| Processor | Ryzen 5 7600X |

|---|---|

| Motherboard | ASRock B650M PG Riptide |

| Cooling | Noctua NH-D15 |

| Memory | DDR5 6000Mhz CL28 32GB |

| Video Card(s) | Nvidia Geforce RTX 3070 Palit GamingPro OC |

| Storage | Corsair MP600 Force Series Gen.4 1TB |

8e cores take more space than 2P, in the space of 2P you can fit 6.5 or 7 e cores. 7e cores would be still faster than 2p cores in cinebench, but the main problem is tha lack of instructions and there are task which will work faster on the 2P cores. That is the whole problem with the e cores, because the e cores are not always faster.Using the 13600K as an example, 8 E-cores offer ~60% more performance compared to 2 P-cores, while using ~40% more power. That's roughly a ~15% gain in efficiency, which is completely irrelevant on desktop.

That's why it actually makes no sense to have a 6P+8E SKU on desktop, when you could have an 8P+0E SKU offering very similar performance and power consumption.

- Joined

- Aug 6, 2023

- Messages

- 12 (0.02/day)

| System Name | Intel PC simple |

|---|---|

| Processor | i3 12100F |

| Motherboard | Gigabyte H610m V2 |

| Cooling | Stock cooling |

| Memory | ADATA 24gb dual channel |

| Video Card(s) | Asus dual rx 6600 xt |

| Storage | Nvme 512gb + SSD 1TB + hdd WD 1TB + 2 hdd ext 2tb |

| Display(s) | Viewsonic 24" 1080p vx2452 |

| Case | Darkflash DLM 21 |

| Audio Device(s) | logitech z607 |

| Power Supply | Evga 450 br |

| Mouse | HP m100 |

| Keyboard | Ozone strikebattle |

| Software | Windows 11 |

So is it worth it or not to choose cpu with e-core? If you must choose 13400f or 5700x which is the best choice?

Does e-core can be disable only specifically for one game for example at this atlas game?

Does intel 15th gen will still use e-core?

Does e-core can be disable only specifically for one game for example at this atlas game?

Does intel 15th gen will still use e-core?

- Joined

- Aug 22, 2007

- Messages

- 3,703 (0.57/day)

- Location

- Terra

| System Name | :) |

|---|---|

| Processor | Intel 13700k |

| Motherboard | Gigabyte z790 UD AC |

| Cooling | Noctua NH-D15 |

| Memory | 64GB GSKILL DDR5 |

| Video Card(s) | Gigabyte RTX 4090 Gaming OC |

| Storage | 960GB Optane 905P U.2 SSD + 4TB PCIe4 U.2 SSD |

| Display(s) | Alienware AW3423DW 175Hz QD-OLED + AOC Agon Pro AG276QZD2 240Hz QD-OLED |

| Case | Fractal Design Torrent |

| Audio Device(s) | MOTU M4 - JBL 305P MKII w/2x JL Audio 10 Sealed --- X-Fi Titanium HD - Presonus Eris E5 - JBL 4412 |

| Power Supply | Silverstone 1000W |

| Mouse | Roccat Kain 122 AIMO |

| Keyboard | KBD67 Lite / Mammoth75 |

| VR HMD | Reverb G2 V2 / Quest 3 |

| Software | Win 11 Pro |

Middle of last year I rotated with a team of nothing but SDEs. They were having issues with performance on one of the new services we were spinning up. We were recording remote sessions and encoding them into video to be retrieved later.

They were not understanding why we were burning 192 core AMD systems and still getting poor performance. All of these guys were pretty removed from HW in general. I explained to them that we need to switch to our GPU compute cluster instead of using CPU threads since the GPUs can do HW En/Decode they were legit shocked.

We switched. Saved hundreds of thousands in internal costs took like 2 weeks for them to recode for GPUs. I got a promotion out of it. I rotated off the team not understanding how they made it that far.

Sometimes these guys literally just sit infront of a game engine and check a box "use all available CPU cores" I swear to god. I was on another team about 8 months later. I had to explain to a TAM (thankfully not an engineer) why 10gb/s links on our storage offload system did NOT mean 10 gigaBYTES/s and that the time quotes they were giving were going to be drastically off.

They got paid more than me.

Always shoot for the stars in your careers people. Even if you dont think you can cut it. The sky is already full of some pretty dim ones.

Yeah, it really is shocking how many people in tech and even computer tech(semiconductor/etc) have no idea about HW. Many times at work I facepalm internally.

- Joined

- May 14, 2004

- Messages

- 28,948 (3.75/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

The E-Cores were disabled through BIOS, I'll mention that in the articleThe article doesn't describe if the E-core are disabled or if they used something like Process Affinity to limit the process to only use P-cores. If it's the former, then it's very possibly a ring bus issue where if E-cores are active, the clocks of the ring bus are forced to be considerably lower, thus lowering the performance of the P-cores.

Also @Battler624 for the same question

Last edited:

- Joined

- Aug 3, 2011

- Messages

- 2,342 (0.46/day)

- Location

- Walkabout Creek

| System Name | Raptor Baked |

|---|---|

| Processor | 14900k w.c. |

| Motherboard | Z790 Hero |

| Cooling | w.c. |

| Memory | 48GB G.Skill 7200 |

| Video Card(s) | Zotac 4080 w.c. |

| Storage | 2TB Kingston kc3k |

| Display(s) | Samsung 34" G8 |

| Case | Corsair 460X |

| Audio Device(s) | Onboard |

| Power Supply | PCIe5 850w |

| Mouse | Razor Basilisk v3 |

| Keyboard | Corsair |

| Software | Win 11 |

| Benchmark Scores | Cool n Quiet. |

The E-Cores were disabled through BIOS, I'll mention that in the article

Did you try disabling lots of 4 E-Cores?

Like 8P + 4E, 8P + 8E & 8P + 12E to see what that does or no point?

- Joined

- May 14, 2004

- Messages

- 28,948 (3.75/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

Do you have a source for that? Afaik Cinebench just splits the load, without being aware of anything, which is easy to do, especially if you have "just fast and slower cores". P-Cores will create more pixels, E-Cores fewer, but still contribute as much as they canExcept a few programmes worked very hard in optimizing like Cinebench

13400F is slightly faster than 5700X for gaming (when not GPU limited, https://www.techpowerup.com/review/intel-core-i5-13400f/17.html)So is it worth it or not to choose cpu with e-core? If you must choose 13400f or 5700x which is the best choice?

Does e-core can be disable only specifically for one game for example at this atlas game?

Does intel 15th gen will still use e-core?

But what you actually want is RPL with the larger cache (13600K in the same chart), it's not about the cores or the mhz, but about the cache.

Which is why 7800X3D is so good: https://www.techpowerup.com/review/amd-ryzen-7-7800x3d/19.html

- Joined

- May 22, 2015

- Messages

- 14,414 (3.89/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

There's still work involved into splitting the load into chunks (they're not spinning off one task for each pixel, nor are are they spinning off a task for a whole screen/scene). And the work to wait for all tasks to finish to put a scene back together (synchronization) still exists, even if it's probably simpler than what happens in a game engine.Do you have a source for that? Afaik Cinebench just splits the load, without being aware of anything, which is easy to do, especially if you have "just fast and slower cores". P-Cores will create more pixels, E-Cores fewer, but still contribute as much as they can

Though a game engine shouldn't that much different: a faster core can compute the updated path for 12 characters while a slower core will only handle 6, or compute the geometry for 100 objects while the other only computes for 50...

Atlas Fallen has some internal weirdness, I mean, it requires a 6600k for FHD@30fps on low settings.

- Joined

- Feb 1, 2019

- Messages

- 4,053 (1.72/day)

- Location

- UK, Midlands

| System Name | Main PC |

|---|---|

| Processor | 13700k |

| Motherboard | Asrock Z690 Steel Legend D4 - Bios 13.02 |

| Cooling | Noctua NH-D15S |

| Memory | 32 Gig 3200CL14 |

| Video Card(s) | 4080 RTX SUPER FE 16G |

| Storage | 1TB 980 PRO, 2TB SN850X, 2TB DC P4600, 1TB 860 EVO, 4TB WD SA510, 2x 3TB WD Red, 1x 4TB WD Red |

| Display(s) | LG 27GL850 |

| Case | Fractal Define R4 |

| Audio Device(s) | Soundblaster AE-9 |

| Power Supply | Antec HCG 750 Gold |

| Software | Windows 10 21H2 LTSC |

Not sure if cinebench did any specific optimisations for intel's hybrid CPU's I still agree with w1zzard. That itself behaved wrong until I adjusted the CPU scheduler settings in the power schemes to prefer p cores. (no issue on win 11 due to its out of the box intel thread director).

Just the dev's of this game tried to be over clever.

Just the dev's of this game tried to be over clever.

- Joined

- Dec 12, 2012

- Messages

- 843 (0.18/day)

- Location

- Poland

| System Name | THU |

|---|---|

| Processor | Intel Core i5-13600KF |

| Motherboard | ASUS PRIME Z790-P D4 |

| Cooling | SilentiumPC Fortis 3 v2 + Arctic Cooling MX-2 |

| Memory | Crucial Ballistix 2x16 GB DDR4-3600 CL16 (dual rank) |

| Video Card(s) | MSI GeForce RTX 4070 Ventus 3X OC 12 GB GDDR6X (2505/21000 @ 0.91 V) |

| Storage | Lexar NM790 2 TB + Corsair MP510 960 GB + PNY XLR8 CS3030 500 GB + Toshiba E300 3 TB |

| Display(s) | LG OLED C8 55" + ASUS VP229Q |

| Case | Fractal Design Define R6 |

| Audio Device(s) | Yamaha RX-V4A + Monitor Audio Bronze 6 + Bronze FX | FiiO E10K-TC + Koss Porta Pro |

| Power Supply | Corsair RM650 |

| Mouse | Logitech M705 Marathon |

| Keyboard | Corsair K55 RGB PRO |

| Software | Windows 10 Home |

| Benchmark Scores | Benchmarks in 2025? |

But what you actually want is RPL with the larger cache (13600K in the same chart), it's not about the cores or the mhz, but about the cache.

MHz is just as important as cache, though. The 13600K has a 24% higher clock speed. You wouldn't get 22% more performance just from the slight cache increase. That's why the 13600K is slightly faster than the 12900K. Its clock speed is 200 MHz higher and it has more L2 cache, but the L3 cache is 20% smaller.

It's also why the 7600 is faster than the 5800X3D on average (there are exceptions in very cache-sensitive games).

But in relation to the original question, a newer 6C/12T CPU is always better than an older 8C/16T for gaming. The 13400 has better IPC than the 5700X, that's why it's faster, not because of the 4 E-cores.

Fewer cores allows you to push the frequency higher, and that has always been the most important thing for gaming.

Fun fact - Destiny 2 has small hitches when you load between different zones while traversing the world. They were usually very noticeable on my 9700K. On my 13600K @ 3.3 GHz they are still there, but much smaller and less frequent. But at 5.1 GHz they never happen at all.

I'm playing at 4K60, which means that even if a faster CPU doesn't increase your framerate, it can still help with other things like stutters and hitches.

I expect the CPU-attached NVMe drive helps as well to some degree. On the 9700K it was going through the chipset.

- Joined

- May 14, 2004

- Messages

- 28,948 (3.75/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

They split it into blocks of pixels, but same thing.. this is trivial, it's just a few lines of codeThere's still work involved into splitting the load into chunks (they're not spinning off one task for each pixel, nor are are they spinning off a task for a whole screen/scene).

There is just one synchronization per run, so one after a few minutes, this isn't even worth calling "synchronization". I doubt that it submits the last chunk onto a faster core, if it's waiting for a slower core to finish that last pieceAnd the work to wait for all tasks to finish to put a scene back together (synchronization) still exists, even if it's probably simpler than what happens in a game engine.

- Joined

- Feb 15, 2019

- Messages

- 1,754 (0.75/day)

| System Name | Personal Gaming Rig |

|---|---|

| Processor | Ryzen 7800X3D |

| Motherboard | MSI X670E Carbon |

| Cooling | MO-RA 3 420 |

| Memory | 32GB 6000MHz |

| Video Card(s) | RTX 4090 ICHILL FROSTBITE ULTRA |

| Storage | 4x 2TB Nvme |

| Display(s) | Samsung G8 OLED |

| Case | Silverstone FT04 |

No I don't.Do you have a source for that?

Maybe I should re-iterate my sentense.

Except a few programmes which Intel themselves optimized their thread director very heavily on like Cinebench.