D

Deleted member 50521

Guest

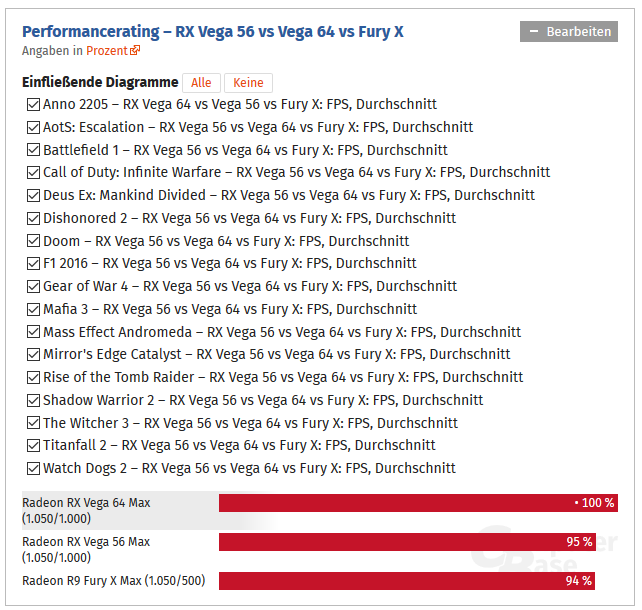

https://www.computerbase.de/2017-08...ts-escalation-rx-vega-56-vs-vega-64-vs-fury-x

So basically the performance per clock cycle, which in theory should be purely from improved design, only gives a tiny amount of performance boost. The most performance boost comes from the bumped clock rate.

http://www.anandtech.com/show/11717/the-amd-radeon-rx-vega-64-and-56-review/2

This feels like Pentium4 and Faildozer to me. RTG tries to maximize clock hoping it will give them some performance boost. What they ended up getting is a power hungry monster. I am sure @W1zzard would do some in depth analysis of Vega design in the future.

So basically the performance per clock cycle, which in theory should be purely from improved design, only gives a tiny amount of performance boost. The most performance boost comes from the bumped clock rate.

http://www.anandtech.com/show/11717/the-amd-radeon-rx-vega-64-and-56-review/2

Anandtech said:That space is put to good use however, as it contains a staggering 12.5 billion transistors. This is 3.9B more than Fiji, and still 500M more than NVIDIA’s GP102 GPU. So outside of NVIDIA’s dedicated compute GPUs, the GP100 and GV100, Vega 10 is now the largest consumer & professional GPU on the market.

Given the overall design similarities between Vega 10 and Fiji, this gives us a very rare opportunity to look at the cost of Vega’s architectural features in terms of transistors. Without additional functional units, the vast majority of the difference in transistor counts comes down to enabling new features.

Talking to AMD’s engineers, what especially surprised me is where the bulk of those transistors went; the single largest consumer of the additional 3.9B transistors was spent on designing the chip to clock much higher than Fiji. Vega 10 can reach 1.7GHz, whereas Fiji couldn’t do much more than 1.05GHz. Additional transistors are needed to add pipeline stages at various points or build in latency hiding mechanisms, as electrons can only move so far on a single (ever shortening) clock cycle; this is something we’ve seen in NVIDIA’s Pascal, not to mention countless CPU designs. Still, what it means is that those 3.9B transistors are serving a very important performance purpose: allowing AMD to clock the card high enough to see significant performance gains over Fiji.

This feels like Pentium4 and Faildozer to me. RTG tries to maximize clock hoping it will give them some performance boost. What they ended up getting is a power hungry monster. I am sure @W1zzard would do some in depth analysis of Vega design in the future.