- Joined

- Oct 9, 2007

- Messages

- 47,683 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

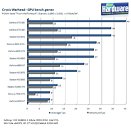

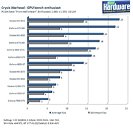

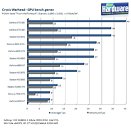

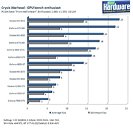

Crytek has worked closely with NVIDIA in the development of the Crysis franchise, and it is a known fact that the original title was optimised for GeForce hardware. The original title, however, was criticised for being too demanding with hardware requirements, which may have contributed to the luke-warm sales of the game. With Crysis Warhead however, Crytek promises to have improved the game engine to work better with today's hardware. PC Games Hardware (PCGH) put the new game to test, not with the prime objective to review it, but to review its performance with today's hardware. There are positives that can be drawn from the findings of the review. The first being, that the game performs to the potential of installed hardware, be it GeForce or Radeon. There were very minor deviations of the hardware's performances from synthetic tests that show their capabilities. For example, Radeon HD 4870 performed neck and neck with GeForce GTX 260 in the "gamer mode", with the former achieving a higher minimum frame-rate. This was also seen with the game's "enthusiast mode" albeit the GeForce chipping away with a higher average frame-rate. The trend continued with the rest of today's GPUs, which indeed is a positive sign.

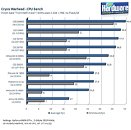

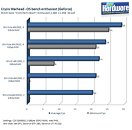

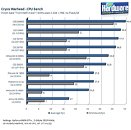

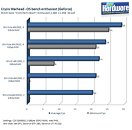

With CPU, the game's performance in many ways was proportional the CPUs' performance. However, quad-core and dual-core processors nearly exchanged blows to bring out an interesting mix of scores. Core 2 Extreme QX6850 exchanged blows with Core 2 Duo E8400 that shares the same clock speed of 3.00 GHz, with QX6850 providing only a nominal improvement over the E8400. The exact opposite happened with Core 2 Quad Q6700 and Core 2 Duo E6750, with the dual-core chipping away a 1 fps lead. The CPU scores go on to show that the game is still largely comfortable with today's dual-core processors, with quad-core ones not offering any real advantage. With system memory, it was seen that in a 64-bit Windows Vista environment, having 4 GB of system memory did help step up performance, the increment wasn't all that nominal either.

View at TechPowerUp Main Site

With CPU, the game's performance in many ways was proportional the CPUs' performance. However, quad-core and dual-core processors nearly exchanged blows to bring out an interesting mix of scores. Core 2 Extreme QX6850 exchanged blows with Core 2 Duo E8400 that shares the same clock speed of 3.00 GHz, with QX6850 providing only a nominal improvement over the E8400. The exact opposite happened with Core 2 Quad Q6700 and Core 2 Duo E6750, with the dual-core chipping away a 1 fps lead. The CPU scores go on to show that the game is still largely comfortable with today's dual-core processors, with quad-core ones not offering any real advantage. With system memory, it was seen that in a 64-bit Windows Vista environment, having 4 GB of system memory did help step up performance, the increment wasn't all that nominal either.

View at TechPowerUp Main Site

Last edited:

That second screen just made my day, beating HD4870, GTX260 and GTX280.

That second screen just made my day, beating HD4870, GTX260 and GTX280.