- Joined

- Jan 31, 2010

- Messages

- 5,702 (1.02/day)

- Location

- Gougeland (NZ)

| System Name | Cumquat 2021 |

|---|---|

| Processor | AMD RyZen R7 7800X3D |

| Motherboard | Asus Strix X670E - E Gaming WIFI |

| Cooling | Deep Cool LT720 + CM MasterGel Pro TP + Lian Li Uni Fan V2 |

| Memory | 32GB GSkill Trident Z5 Neo 6000 |

| Video Card(s) | PowerColor HellHound RX7800XT 2550cclk/2450mclk |

| Storage | 1x Adata SX8200PRO NVMe 1TB gen3 x4 1X Samsung 980 Pro NVMe Gen 4 x4 1TB, 12TB of HDD Storage |

| Display(s) | AOC 24G2 IPS 144Hz FreeSync Premium 1920x1080p |

| Case | Lian Li O11D XL ROG edition |

| Audio Device(s) | RX7800XT via HDMI + Pioneer VSX-531 amp Technics 100W 5.1 Speaker set |

| Power Supply | EVGA 1000W G5 Gold |

| Mouse | Logitech G502 Proteus Core Wired |

| Keyboard | Logitech G915 Wireless |

| Software | Windows 11 X64 PRO (build 24H2) |

| Benchmark Scores | it sucks even more less now ;) |

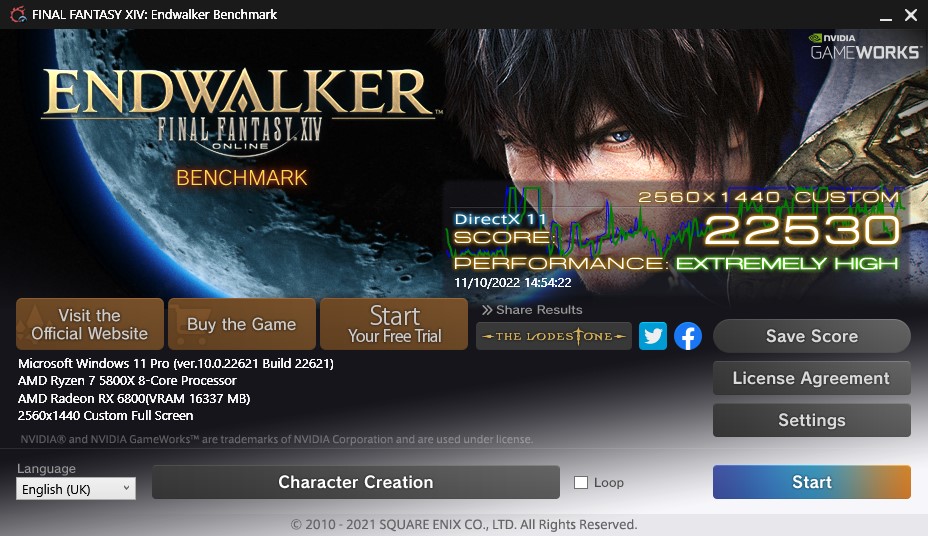

Hmm seem AMD have done some DX11 magic hoodoo with newer drivers

Previous score was 18546 latest score with 22.9.2 drivers

Previous score was 18546 latest score with 22.9.2 drivers

even though they took a very long time until Ryzen 5000 was finally supported on my old boi the Asus Crosshair VI Hero with X370 chipset.

even though they took a very long time until Ryzen 5000 was finally supported on my old boi the Asus Crosshair VI Hero with X370 chipset.