it's not

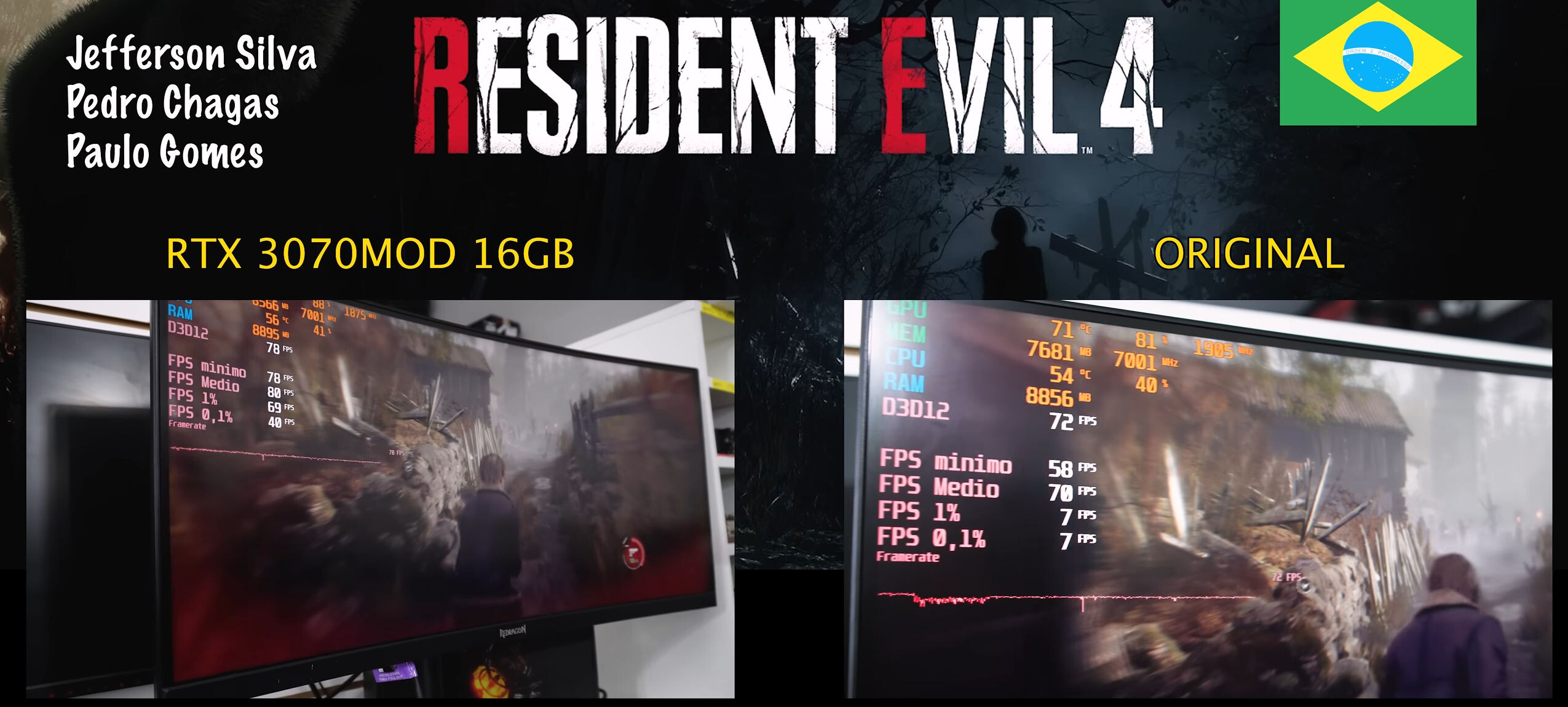

personal views. it's independent benchmarks, independent from my views. you want to max everything, 12gb ain't enough and that's a fact. benchmarks aren;'t views, are facts, scientific measurements if you want.

the implications here is for instance rtx 4070/super, rtx 5070, rx 7700 xt, b570, b580 aren't great in present, not mentioning long term (at least 2-3years).

the're simply budget cards at big prices . check here prices for 8gb gddr6

https://www.dramexchange.com/ and see how much would cost to add 8gb gddr6

draw your own conclusions

There's a big difference

There's a big difference