intel rules: on z590 cpu x16 lanes can be split into different slots, b560 cant.Well, at least you're clear on contradicting yourself - Intel determines the rules for implementing its platforms, after all. Allowed bifurcated layouts is something Intel is entirely within its power to determine if they want to - and there is plenty of evidence that they want to. (Though of course the more complex BIOS programming is no doubt also a turn-off for some motherboard makers.) But generally, bifurcated layouts outside of x8+x8 are very rare. You might be right that their rules are more relaxed now than they used to be, but most boards still don't split things three ways from the CPU.

The Z390 Designare has a really weird layout where the bottom x4 slot is connected to the chipset for an x8+x8+x4 layout, but you can switch its connection to the CPU instead for an x8+x4+x4 layout. I've never seen anything like that, but I guess it provides an option for very latency-sensitive scenarios. Definitely extremely niche, that's for sure.

As for what you're saying about switches: no. The term PCIe switch is generally used for PLX switches, which allow for simultaneous lane multiplication (i.e. x number of x16 slots from a single x16 controller). Bifurcation from the CPU does not require any switches whatsoever. This is handled by the CPU, through the x16 controller actually consisting of several smaller x8 or x4 controllers working together. You need multiplexers/muxes for the lanes that are assigned to multiple ports, but no actual PCIe switches. And PCIe muxes are cheap and simple. (Of course not cheaper than not having them in the first place, but nowhere near the price of a PLX switch, which easily runs $100 or more.) That Designare must actually have a really fascinating mux layout, as the x16 must have 1:2 muxes on four lanes and 1:3 muxes on the last four, with 2:1 muxes further down the same lanes for swapping that slot between CPU and PCIe.

Either way, bifurcated layouts beyond x8+x8 are very rare - though with more differentiated PCIe connectivity between the CPU and PCH than before I can see them becoming a bit more common in the future. It certainly didn't matter much when you either got PCIe 3.0 from the CPU or slightly higher latency PCIe 3.0 through the chipset, but 5.0 on one and 3.0 on the other is definitely another story. Still, I don't think this is likely to be relevant to the discussion at hand: chances are the bottom x4 slot (which is labeled PCIEX4, not PCIEX16_3 or similar) comes from the PCH and not the CPU.

(An interesting side note: the leaked Z690 Aorus Master has a single x16 + two slots labeled PCIEX4 and PCIEX4_2, which makes me think both those slots are PCH-based. Which again begs the question of whether there's no bifurcation of the CPU lanes at all, or if they're split into m.2 slots instead. IMO the latter would be sensible, as no GPU in the useful lifetime of this board will make use of PCIe 5.0x16 anhow.)

so when we talk about an eligible chipset like z590, there is no further limitation on how x16 can be split into different slots by mobo vendors.

actually, the way gigabyte and msi did on their z490 to upgrade the same m.2 slot from gen3 by chpipset to gen4 by CPU while 11th gen CPU is installed, is almost identical to what gigabyte did on z390 designare bottom x4 pcie slot.

we don't discuss 8+4+4 on b560 as intel has it completely banned on b560, not even 8+8, no matter how many mux/demux used.

btw thanks to intel, intel still locks the first x8 to be x8 but not x4+x4 like what amd allows on b550/x570 (4+4+4+4). so only three devices can be recognized on the same first pcie x16 slot even having x16 lanes inside.

8+4+4 is actually quite common these days on z490 z590 b550 x570 because m.2 is involved. so many z490 & z590 mobos' m.2 slots got lanes by cpu.

strix e / f / a are all the same, they all support 8+4+4, the last x4 goes to m.2 slot.

strix e is unique as it support also support 8+8 on two pcie slots, by having total six "ic" responsible for that. strix f and strix a got four ic only.

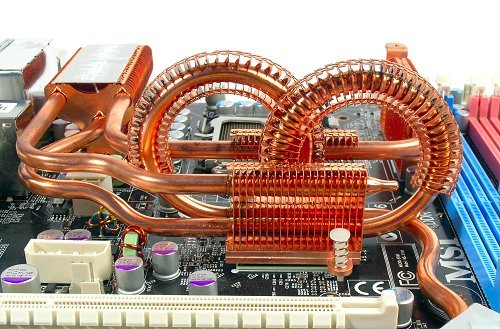

this type of ic are quite expensive otherwise we should have seen them being used more on entry b550 models to compensate only 10 lanes provided by b550 chipset, of course plx's prices is mad.

pi3dbs16412, a popular one on x570 b550 z490, is called "multiplexer/demultiplexer switch" by diodes/pericom.

since they are responsible for pcie x2 each, we generally call them pcie switch...(switch/quick swich/pcie switch/mux demux).

Seems like they've abandoned that marketing language since Z490 though - I can't find a single mention of "PCIe switches" or muxes or anything to that effect (nor any similar illustrations) on any of their Z590 or Z690 boards. To me that reads a lot like someone in PR trying to find a consumer-friendly word for a mux, asking an engineer "but what does it do?", and then latching onto the word "switch" form an explanation along the lines of "it switches between two signal paths". Though I might of course be overthinking this.

Seems like they've abandoned that marketing language since Z490 though - I can't find a single mention of "PCIe switches" or muxes or anything to that effect (nor any similar illustrations) on any of their Z590 or Z690 boards. To me that reads a lot like someone in PR trying to find a consumer-friendly word for a mux, asking an engineer "but what does it do?", and then latching onto the word "switch" form an explanation along the lines of "it switches between two signal paths". Though I might of course be overthinking this.