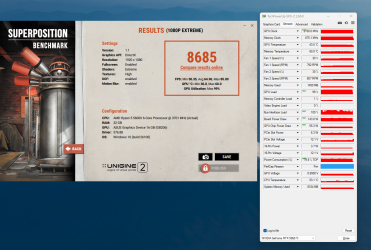

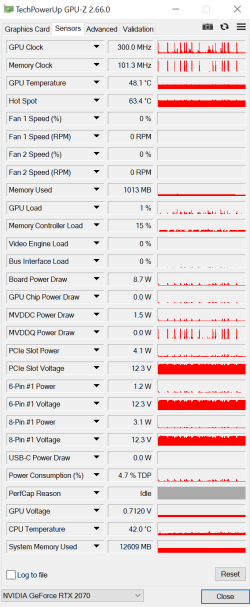

Maximum Watts when gaming was normal for 20, 30, 40, and many 50-series GPUs, but you are seeing what I'm seeing and it's also apparent in reviews. Look at W1zzard's power consumption charts - The power draw is lower than the 180W TBP in gaming loads, only showing up as 180W in raytracing or Furmark.

Vsync, GSync, and your monitor refresh rate can have an impact on power draw if you're hitting your refresh rate.

You haven't said how many fps you're gaining when you use the performance preset. If it's a ~5-10%, then that's expected when the driver override disables AA, AF, and texture LOD settings. If it's more then you might have some kind of power management issue with your GPU+Motherboard combination. At that point I would wipe the slate clean and do (in this order):

- The latest BIOS update for your motherboard - one that's ideally newer than the 5060Ti

- Enable "Above 4G Decoding" and "Resizable BAR" in your freshly-reset BIOS

- Update to the latest AMD chipset driver for your A520 board

- Remove the current graphics driver using DDU in safe mode

- Install 576.88 WHQL

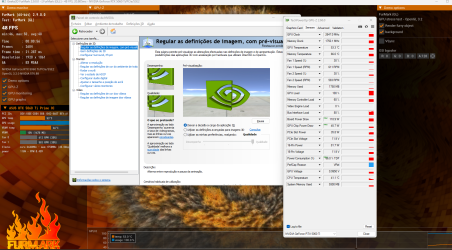

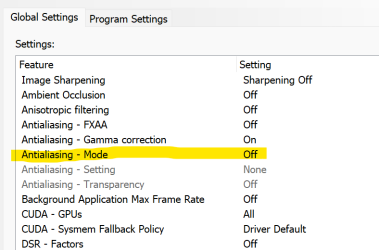

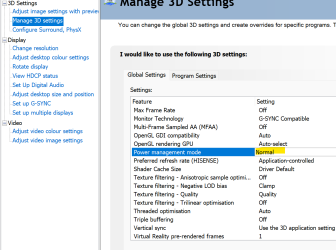

If you really want to test whether it's a power management problem, then don't use the slider in the control panel because that turns off all kinds of other stuff that

also impacts performance, which means you can't isolate the variable you're trying to test. Go to the advanced "manage 3D settings" section and just change that one value:

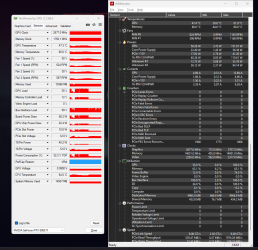

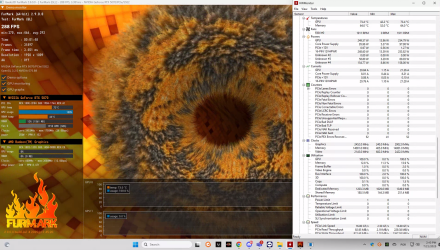

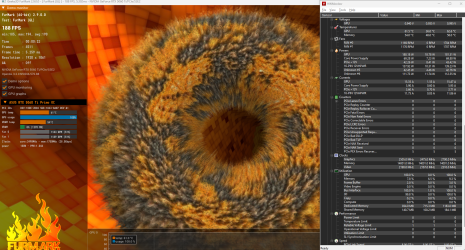

View attachment 408535