- Joined

- Jul 28, 2008

- Messages

- 3,300 (0.53/day)

| System Name | Primary|Secondary|Poweredge r410|Dell XPS|SteamDeck |

|---|---|

| Processor | i7 11700k|i7 9700k|2 x E5620 |i5 5500U|Zen 2 4c/8t |

| Memory | 32GB DDR4|16GB DDR4|16GB DDR4|32GB ECC DDR3|8GB DDR4|16GB LPDDR5 |

| Video Card(s) | RX 7800xt|RX 6700xt |On-Board|On-Board|8 RDNA 2 CUs |

| Storage | 2TB m.2|512GB SSD+1TB SSD|2x256GBSSD 2x2TBGB|256GB sata|512GB nvme |

| Display(s) | 50" 4k TV | Dell 27" |22" |3.3"|7" |

| VR HMD | Samsung Odyssey+ | Oculus Quest 2 |

| Software | Windows 11 Pro|Windows 10 Pro|Windows 10 Home| Server 2012 r2|Windows 10 Pro |

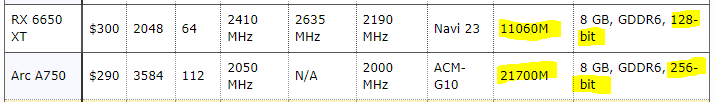

For where they priced from a customer standpoint it's not bad from Intel's standpoint it's a complete fail.

Needs twice the memory width and transistors to achieve the same performance that's absolutely terrible.

I would like nothing more then for them to stick around in the GPU space but if they don't make huuuuge improvement with the next gen (if we ever get another one) this is unsustainable.

Needs twice the memory width and transistors to achieve the same performance that's absolutely terrible.

I would like nothing more then for them to stick around in the GPU space but if they don't make huuuuge improvement with the next gen (if we ever get another one) this is unsustainable.