- Joined

- Oct 9, 2007

- Messages

- 47,681 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

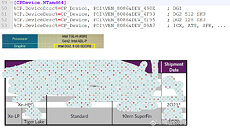

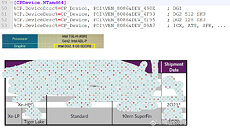

Intel's return to discrete gaming GPUs may have had a modest beginning with the Iris Xe MAX, but the company is looking to take a real stab at the gaming market. Driver code from the latest 100.9126 graphics driver, and OEM data-sheets pieced together by VideoCardz, reveal that its next attempt will be substantially bigger. Called "DG2," and based on the Xe-HPG graphics architecture, a derivative of Xe targeting gaming graphics, the new GPU allegedly features 512 Xe execution units. To put this number into perspective, the Iris Xe MAX features 96, as does the Iris Xe iGPU found in Intel's "Tiger Lake" mobile processors. The upcoming 11th Gen Core "Rocket Lake-S" is rumored to have a Xe-based iGPU with 48. Subject to comparable clock speeds, this alone amounts to a roughly 5x compute power uplift over DG1, 10x over the "Rocket Lake-S" iGPU. 512 EUs convert to 4,096 programmable shaders.

A leaked OEM data-sheet referencing the DG2 also mentions a rather contemporary video memory setup, with 8 GB of GDDR6 memory. While the Iris Xe MAX is built on Intel's homebrew 10 nm SuperFin node, Intel announced that its Xe-HPG chips will use third-party foundries. With these specs, Intel potentially has a GPU to target competitive e-sports gaming (where the money is). Sponsorship of major e-sports clans could help with the popularity of Intel Graphics. With enough beans on the pole, Intel could finally invest in scaling up the architecture to even higher client graphics market segments. As for availability, VideoCardz predicts a launch roughly coinciding with that of Intel's "Tiger Lake-H" mobile processor series, possibly slated for mid-2021.

View at TechPowerUp Main Site

A leaked OEM data-sheet referencing the DG2 also mentions a rather contemporary video memory setup, with 8 GB of GDDR6 memory. While the Iris Xe MAX is built on Intel's homebrew 10 nm SuperFin node, Intel announced that its Xe-HPG chips will use third-party foundries. With these specs, Intel potentially has a GPU to target competitive e-sports gaming (where the money is). Sponsorship of major e-sports clans could help with the popularity of Intel Graphics. With enough beans on the pole, Intel could finally invest in scaling up the architecture to even higher client graphics market segments. As for availability, VideoCardz predicts a launch roughly coinciding with that of Intel's "Tiger Lake-H" mobile processor series, possibly slated for mid-2021.

View at TechPowerUp Main Site