- Joined

- Oct 9, 2007

- Messages

- 47,769 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

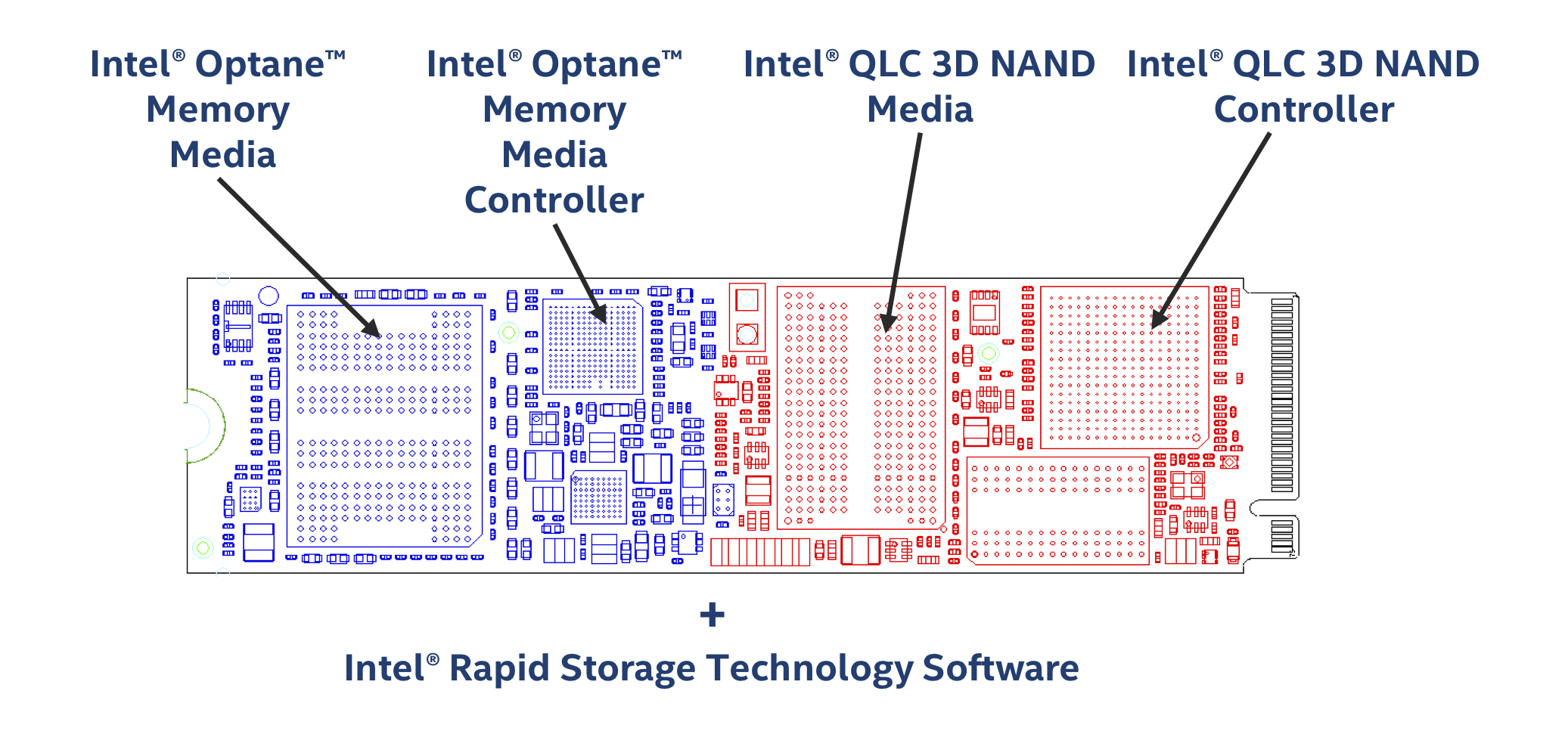

Intel today revealed details about Intel Optane memory H10 with solid-state storage - an innovative device that combines the superior responsiveness of Intel Optane technology with the storage capacity of Intel Quad Level Cell (QLC) 3D NAND technology in a single space-saver M.2 form factor. "Intel Optane memory H10 with solid-state storage features the unique combination of Intel Optane technology and Intel QLC 3D NAND - exemplifying our disruptive approach to memory and storage that unleashes the full power of Intel-connected platforms in a way no else can provide," said Rob Crooke, Intel senior vice president and general manager of the Non-Volatile Memory Solutions Group.

Combining Intel Optane technology with Intel QLC 3D NAND technology on a single M.2 module enables Intel Optane memory expansion into thin and light notebooks and certain space-constrained desktop form factors - such as all-in-one PCs and mini PCs. The new product also offers a higher level of performance not met by traditional Triple Level Cell (TLC) 3D NAND SSDs today and eliminates the need for a secondary storage device.

Intel's leadership in computing infrastructure and design allows the company to utilize the value of the platform in its entirety (software, chipset, processor, memory and storage) and deliver that value to the customer. The combination of high-speed acceleration and large SSD storage capacity on a single drive will benefit everyday computer users, whether they use their systems to create, game or work. Compared to a standalone TLC 3D NAND SSD system, Intel Optane memory H10 with solid-state storage enables both faster access to frequently used applications and files and better responsiveness with background activity.

8th Generation Intel Core U-series mobile platforms featuring Intel Optane memory H10 with solid state storage will be arriving through major OEMs starting this quarter. With these platforms, everyday users will be able to:

The Intel Optane memory H10 with solid-state storage will come in the following capacities, 16GB (Intel Optane memory) + 256GB (storage); 32GB (Intel Optane memory) + 512GB (storage); and 32GB (Intel Optane memory) + 1TB storage.

For more information, visit this page.

View at TechPowerUp Main Site

Combining Intel Optane technology with Intel QLC 3D NAND technology on a single M.2 module enables Intel Optane memory expansion into thin and light notebooks and certain space-constrained desktop form factors - such as all-in-one PCs and mini PCs. The new product also offers a higher level of performance not met by traditional Triple Level Cell (TLC) 3D NAND SSDs today and eliminates the need for a secondary storage device.

Intel's leadership in computing infrastructure and design allows the company to utilize the value of the platform in its entirety (software, chipset, processor, memory and storage) and deliver that value to the customer. The combination of high-speed acceleration and large SSD storage capacity on a single drive will benefit everyday computer users, whether they use their systems to create, game or work. Compared to a standalone TLC 3D NAND SSD system, Intel Optane memory H10 with solid-state storage enables both faster access to frequently used applications and files and better responsiveness with background activity.

8th Generation Intel Core U-series mobile platforms featuring Intel Optane memory H10 with solid state storage will be arriving through major OEMs starting this quarter. With these platforms, everyday users will be able to:

- Launch documents up to 2 times faster while multitasking.

- Launch games 60% faster while multitasking.

- Open media files up to 90% faster while multitasking.

The Intel Optane memory H10 with solid-state storage will come in the following capacities, 16GB (Intel Optane memory) + 256GB (storage); 32GB (Intel Optane memory) + 512GB (storage); and 32GB (Intel Optane memory) + 1TB storage.

For more information, visit this page.

View at TechPowerUp Main Site