- Joined

- Jan 5, 2006

- Messages

- 18,584 (2.61/day)

| System Name | AlderLake |

|---|---|

| Processor | Intel i7 12700K P-Cores @ 5Ghz |

| Motherboard | Gigabyte Z690 Aorus Master |

| Cooling | Noctua NH-U12A 2 fans + Thermal Grizzly Kryonaut Extreme + 5 case fans |

| Memory | 32GB DDR5 Corsair Dominator Platinum RGB 6000MT/s CL36 |

| Video Card(s) | MSI RTX 2070 Super Gaming X Trio |

| Storage | Samsung 980 Pro 1TB + 970 Evo 500GB + 850 Pro 512GB + 860 Evo 1TB x2 |

| Display(s) | 23.8" Dell S2417DG 165Hz G-Sync 1440p |

| Case | Be quiet! Silent Base 600 - Window |

| Audio Device(s) | Panasonic SA-PMX94 / Realtek onboard + B&O speaker system / Harman Kardon Go + Play / Logitech G533 |

| Power Supply | Seasonic Focus Plus Gold 750W |

| Mouse | Logitech MX Anywhere 2 Laser wireless |

| Keyboard | RAPOO E9270P Black 5GHz wireless |

| Software | Windows 11 |

| Benchmark Scores | Cinebench R23 (Single Core) 1936 @ stock Cinebench R23 (Multi Core) 23006 @ stock |

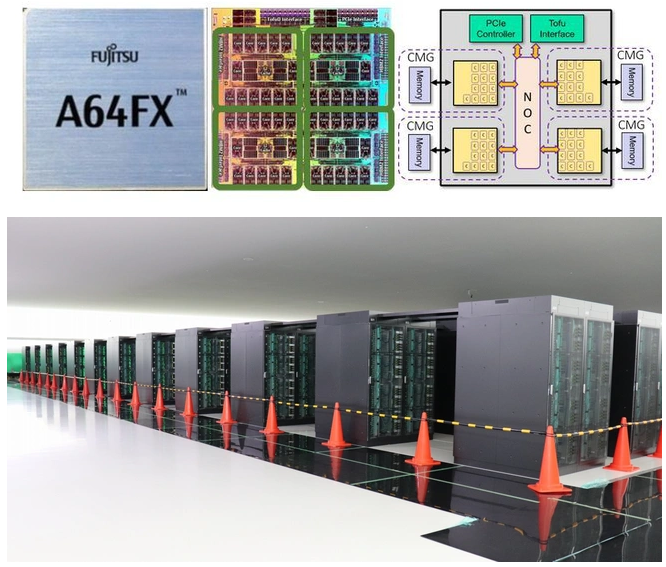

Japanese Riken gets supercomputer with almost 160,000 nodes with 48 Arm cores

Four hundred racks were delivered to the Japanese research institute Riken for the Fugaku, a super computer with chips based on Arm. The system gets nearly 16,000 nodes with 48-core Arm chips.

The installation of Fugaku started in early December. All parts have been delivered this week, reports Riken. The supercomputer must be ready for use in 2021. Then the system will be used for research calculations, including research projects to combat Covid-19.

The entire system will consist of 158,976 nodes with A64FX processors from Fujitsu. These are Armv8.2-A SVE based processors with 48 cores for calculations and 2 or 4 cores for OS activity. The chips run at 2GHz, with a boost to 2.2GHz and are combined with 32GB hbm2 memory. The entire system should provide half an exaflops of 64bits double precision floatping point performance.

Fugaku is the nickname of Mount Fuji in Japan.

tweakers.net

tweakers.net

Four hundred racks were delivered to the Japanese research institute Riken for the Fugaku, a super computer with chips based on Arm. The system gets nearly 16,000 nodes with 48-core Arm chips.

The installation of Fugaku started in early December. All parts have been delivered this week, reports Riken. The supercomputer must be ready for use in 2021. Then the system will be used for research calculations, including research projects to combat Covid-19.

The entire system will consist of 158,976 nodes with A64FX processors from Fujitsu. These are Armv8.2-A SVE based processors with 48 cores for calculations and 2 or 4 cores for OS activity. The chips run at 2GHz, with a boost to 2.2GHz and are combined with 32GB hbm2 memory. The entire system should provide half an exaflops of 64bits double precision floatping point performance.

Fugaku is the nickname of Mount Fuji in Japan.

Japanse Riken krijgt supercomputer met bijna 160.000 nodes met 48 Arm-cores

In het Japanse onderzoeksinstituut Riken zijn vierhonderd racks afgeleverd voor de Fugaku, een supercomputer met chips op basis van Arm. Het systeem krijgt bijna 160.000 nodes met Arm-chips met 48 cores.