Oh I see what you mean, what I'd like to see is that same graph while showing the OC'd settings W1zzard ran with.

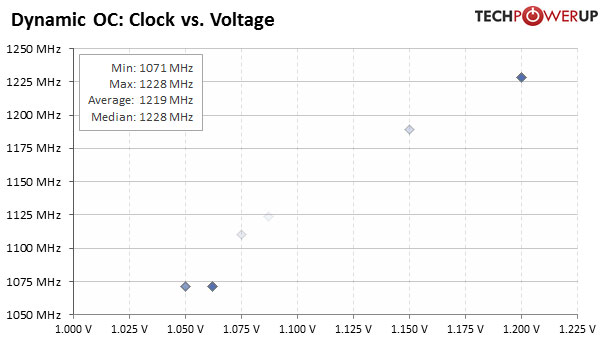

But what you post explains little. I'd like someone to explain how on the first EVGA chart the Max and median can have the same 1228Mhz?

While those charts are interesting that doesn't dispel the fact that the EVGA GTX 760 SC at 1220MHz base clock (14% overclocking) and 1840 MHz memory (23% overclock), then only offers a 16% increase to BF3. I think what we see here is that when OC'd if there's temperature head-room the dynamic OC will force it to increase voltage to maintain that highest plateau no matter if the render load actually requires it. It be interesting to see the power it uses to hit that factored in.

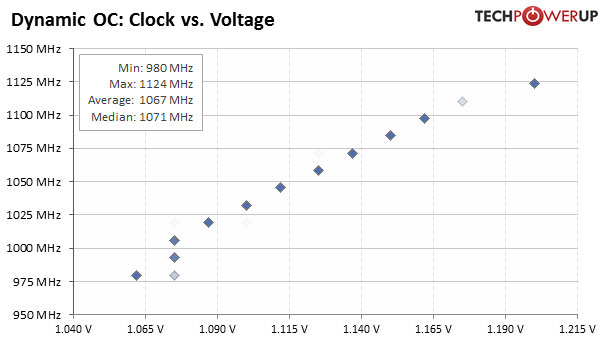

As W1zzard, charts are indicating "A light color means the clock / voltage combination is rarely used and a dark color means it's active a lot."

What I find odd on the reference card graph is that from base of 980Mhz it isn't Boosting the claim 1033Mhz right off, but running at less than that... more often as show by the dark diamonds? I thought your suppose to get at least as minimum a 1033Mhz that’s advertise. It seems strange for Nvidia to state and advertise the reference 980/1033Mhz Boost when clearly by W1rrards' chart it appears the card runs fairly often below the 1033Mhz, while averages 1067Mhz Boost? I would say they could logically advertise that as the average/nominal. By the EVGA chart it shows stock it maintains higher more often than W1zzards 1220Mhz OC'd number from the previous page. While W1zzards' chart never even has even light diamond showing the 1137 MHz GPU Boost the card is advertised at?

So yes I clearly don't get their Boost algorithms. Please point me to a good and compressive article that explain Nvidia Boost 2.0, so I/we can fully understand what you already must completely grasp. If you could spend sometime to provide explanations to what I'm pointing out that would be helpful. Posting some graphs and saying I don’t understand is your prerogative. I've searched, basically I come up with the marketing graphs that Nvidia has provided; although that just skims the surface.

http://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_Titan/30.html

http://www.hardwarecanucks.com/foru...-geforce-gtx-titan-gk110-s-opening-act-4.html