- Joined

- Feb 18, 2005

- Messages

- 6,396 (0.87/day)

- Location

- Ikenai borderline!

| System Name | Firelance. |

|---|---|

| Processor | Threadripper 3960X |

| Motherboard | ROG Strix TRX40-E Gaming |

| Cooling | IceGem 360 + 6x Arctic Cooling P12 |

| Memory | 8x 16GB Patriot Viper DDR4-3200 CL16 |

| Video Card(s) | MSI GeForce RTX 4060 Ti Ventus 2X OC |

| Storage | 2TB WD SN850X (boot), 4TB Crucial P3 (data) |

| Display(s) | Dell S3221QS(A) (32" 38x21 60Hz) + 2x AOC Q32E2N (32" 25x14 75Hz) |

| Case | Enthoo Pro II Server Edition (Closed Panel) + 6 fans |

| Power Supply | Fractal Design Ion+ 2 Platinum 760W |

| Mouse | Logitech G604 |

| Keyboard | Razer Pro Type Ultra |

| Software | Windows 10 Professional x64 |

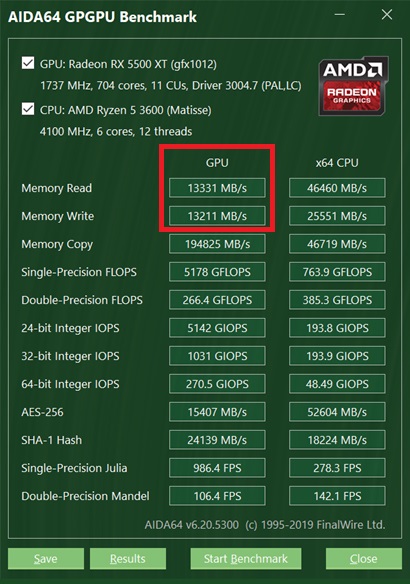

So AMD's lack of good delta compression finally bites them.

People aren't expecting playable framerates on a 1030. Take your whataboutism elsewhere.

Inb4 people forgets the GT1030 suffers the same problem.

People aren't expecting playable framerates on a 1030. Take your whataboutism elsewhere.