-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RTX 3080 Users Report Crashes to Desktop While Gaming

- Thread starter Raevenlord

- Start date

legoliveira

New Member

- Joined

- Dec 3, 2018

- Messages

- 2 (0.00/day)

I think the problem is over-reving. When it goes past 7000 rotaions per minute, fuel system should cut ignition to prevent damaging pistons and the valve-train.

- Joined

- May 8, 2018

- Messages

- 1,609 (0.62/day)

- Location

- London, UK

Memory issues again?

The memory chips used are rated up to 95C, yet reportedly run up to around 120C.

I do believe it might be memory issues too.

- Joined

- Oct 22, 2014

- Messages

- 14,703 (3.76/day)

- Location

- Sunshine Coast Australia

| System Name | H7 Flow 2024 |

|---|---|

| Processor | AMD 5800X3D |

| Motherboard | Asus X570 Tough Gaming |

| Cooling | Custom liquid |

| Memory | 32 GB DDR4 |

| Video Card(s) | Intel ARC A750 |

| Storage | Crucial P5 Plus 2TB. |

| Display(s) | AOC 24" Freesync 1m.s. 75Hz |

| Mouse | Lenovo |

| Keyboard | Eweadn Mechanical |

| Software | W11 Pro 64 bit |

Off topic but I hate rev limiters, it feels like someone pulled the handbrake on when you exceed that limit.I think the problem is over-reving. When it goes past 7000 rotaions per minute, fuel system should cut ignition to prevent damaging pistons and the valve-train.

Nanny state for engines.

- Joined

- Jul 27, 2020

- Messages

- 156 (0.09/day)

Is this from the couple hundred people that have actually got one?

- Joined

- Feb 26, 2016

- Messages

- 551 (0.16/day)

- Location

- Texas

| System Name | O-Clock |

|---|---|

| Processor | Intel Core i9-9900K @ 52x/49x 8c8t |

| Motherboard | ASUS Maximus XI Gene |

| Cooling | EK Quantum Velocity C+A, EK Quantum Vector C+A, CE 280, Monsta 280, GTS 280 all w/ A14 IP67 |

| Memory | 2x16GB G.Skill TridentZ @3900 MHz CL16 |

| Video Card(s) | EVGA RTX 2080 Ti XC Black |

| Storage | Samsung 983 ZET 960GB, 2x WD SN850X 4TB |

| Display(s) | Asus VG259QM |

| Case | Corsair 900D |

| Audio Device(s) | beyerdynamic DT 990 600Ω, Asus SupremeFX Hi-Fi 5.25", Elgato Wave 3 |

| Power Supply | EVGA 1600 T2 w/ A14 IP67 |

| Mouse | Logitech G403 Wireless (PMW3366) |

| Keyboard | Monsgeek M5W w/ Cherry MX Silent Black RGBs |

| Software | Windows 10 Pro 64 bit |

| Benchmark Scores | https://hwbot.org/search/submissions/permalink?userId=92615&cpuId=5773 |

I wonder, how many dumbasses use 2x 8 pins on a single power cable?

- Joined

- Aug 8, 2019

- Messages

- 430 (0.20/day)

| System Name | R2V2 *In Progress |

|---|---|

| Processor | Ryzen 7 2700 |

| Motherboard | Asrock X570 Taichi |

| Cooling | W2A... water to air |

| Memory | G.Skill Trident Z3466 B-die |

| Video Card(s) | Radeon VII repaired and resurrected |

| Storage | Adata and Samsung NVME |

| Display(s) | Samsung LCD |

| Case | Some ThermalTake |

| Audio Device(s) | Asus Strix RAID DLX upgraded op amps |

| Power Supply | Seasonic Prime something or other |

| Software | Windows 10 Pro x64 |

The delusion of the article poster... Nvidia never has system crashing driver problems... Never...

*cough* I smell some serious fecal matter in the air... *cough*

*cough* I smell some serious fecal matter in the air... *cough*

- Joined

- Apr 10, 2020

- Messages

- 588 (0.31/day)

I use Corsair HX 1000W platinum PSU and guess what MSI RTX 3080 Gaming X keeps crashing to the desktop when playing games. I could easily run 2x EVGA 1080TI & 10700K OC to 5.1Ghz without a single crash so it's surely not a PSU thing. Lowering base clock via afterburner to 1440 MHz and boost to 1710 MHz seems to solve the problem in my case. It sucks paying price premium over FE and have to settle for the same clocks in the end.

ThornRose

New Member

- Joined

- Sep 25, 2020

- Messages

- 7 (0.00/day)

They're not rated for anything, Micron has not disclosed that info for their GDDR6X modules.

Though yes, with GDDR6X being new, it makes for credible suspect.

They do publish operating temps for this memory, https://www.micron.com/products/ultra-bandwidth-solutions/gddr6x

- Voltage

1.35V - Op. Temp.

0C to +95C

- Joined

- Dec 31, 2009

- Messages

- 19,412 (3.43/day)

| Benchmark Scores | Faster than yours... I'd bet on it. :) |

|---|

I wonder what the thermal shutdown limit is. I can't imagine it being much over that, however I'm just guessing.They do publish operating temps for this memory, https://www.micron.com/products/ultra-bandwidth-solutions/gddr6x

- Voltage

1.35V- Op. Temp.

0C to +95C

ThornRose

New Member

- Joined

- Sep 25, 2020

- Messages

- 7 (0.00/day)

I wonder what the thermal shutdown limit is. I can't imagine it being much over that, however I'm just guessing.

95.2*C The data sheet is intresting, but I have to admit I didn't read it all.

saw a post about people getting a evga 3080 from Newegg in bags and not boxes lol

Saw that too. I'd be furious. I keep my boxes for almost all my hardware.

- Joined

- Sep 17, 2019

- Messages

- 538 (0.25/day)

Have no sympathy for gerbils buying this abomination of a video card.

YOU people bitched (and rightly so) on AMD Vega Series because of excsessive heat and wattage issues. An increase in performance at the expense of increase of wattage. As stated again I bought a EVGA 1070 SC because of the excessive wattage/ heat issue that the Vega Series gave. The 1070 is a great card that I still use in my back up computer.

NOW I get to bitch on how crappy these cards really are for the same damn thing. An increase in performance at the expense of increase of wattage.

30% increase in performance for 30% wattage increase IS NOT AN ADVANCEMENT.

And you are not even getting the quality of Silicon that you should get for a $800 (add shipping and tax and most 3080 video cards will hit this price) video card that will work nicely as a winter heater. Nvidia is hoarding the best for later cards.

The world does NOT run on 4K. Real 4K game playing for the masses is over 5 years off as they haven't milked the 1080p market to death yet. Right now 1440p is now the sweet spot for video games and prices are starting to come down for everyone to enjoy the experience.

Just wait until the holiday season really begins to make your purchases. By the time the newer 3080's should have their issues ironed out.

If the AMD gives me a Video card with similar performance of a 3080 with LESS wattage usage. I'll buy one. And if they get their act together I'll buy from Nvidia as well.

This is not me taking one side. This is me looking at how the industry if giving less to the masses by marketing hype.

YOU people bitched (and rightly so) on AMD Vega Series because of excsessive heat and wattage issues. An increase in performance at the expense of increase of wattage. As stated again I bought a EVGA 1070 SC because of the excessive wattage/ heat issue that the Vega Series gave. The 1070 is a great card that I still use in my back up computer.

NOW I get to bitch on how crappy these cards really are for the same damn thing. An increase in performance at the expense of increase of wattage.

30% increase in performance for 30% wattage increase IS NOT AN ADVANCEMENT.

And you are not even getting the quality of Silicon that you should get for a $800 (add shipping and tax and most 3080 video cards will hit this price) video card that will work nicely as a winter heater. Nvidia is hoarding the best for later cards.

The world does NOT run on 4K. Real 4K game playing for the masses is over 5 years off as they haven't milked the 1080p market to death yet. Right now 1440p is now the sweet spot for video games and prices are starting to come down for everyone to enjoy the experience.

Just wait until the holiday season really begins to make your purchases. By the time the newer 3080's should have their issues ironed out.

If the AMD gives me a Video card with similar performance of a 3080 with LESS wattage usage. I'll buy one. And if they get their act together I'll buy from Nvidia as well.

This is not me taking one side. This is me looking at how the industry if giving less to the masses by marketing hype.

- Joined

- May 29, 2013

- Messages

- 72 (0.02/day)

Now that I think about, knowing this, could it be why they included that error correction ?

Nah. Error correction is for the 2 bit signal encoding of the memory transmission.

ThornRose

New Member

- Joined

- Sep 25, 2020

- Messages

- 7 (0.00/day)

Hello, it is Fermi all over again.

Bless you!

Ruru

S.T.A.R.S.

- Joined

- Dec 16, 2012

- Messages

- 14,038 (3.06/day)

- Location

- Jyväskylä, Finland

| System Name | 4K-gaming / console |

|---|---|

| Processor | 5800X @ PBO +200 / i5-8600K @ 4.6GHz |

| Motherboard | ROG Crosshair VII Hero / ROG Strix Z370-F |

| Cooling | Custom loop CPU+GPU / Custom loop CPU |

| Memory | 32GB DDR4-3466 / 16GB DDR4-3600 |

| Video Card(s) | Asus RTX 3080 TUF / Powercolor RX 6700 XT |

| Storage | 3TB SSDs + 3TB / 372GB SSDs + 750GB |

| Display(s) | 4K120 IPS + 4K60 IPS / 1080p projector @ 90" |

| Case | Corsair 4000D AF White / DeepCool CC560 WH |

| Audio Device(s) | Sony WH-CH720N / Hecate G1500 |

| Power Supply | EVGA G2 750W / Seasonic FX-750 |

| Mouse | MX518 remake / Ajazz i303 Pro |

| Keyboard | Roccat Vulcan 121 AIMO / Obinslab Anne 2 Pro |

| VR HMD | Oculus Rift CV1 |

| Software | Windows 11 Pro / Windows 11 Pro |

| Benchmark Scores | They run Crysis |

Used to run my 980 Ti with one cable tho now using two as it was recommended.I wonder, how many dumbasses use 2x 8 pins on a single power cable?

- Joined

- May 22, 2015

- Messages

- 14,411 (3.90/day)

| Processor | Intel i5-12600k |

|---|---|

| Motherboard | Asus H670 TUF |

| Cooling | Arctic Freezer 34 |

| Memory | 2x16GB DDR4 3600 G.Skill Ripjaws V |

| Video Card(s) | EVGA GTX 1060 SC |

| Storage | 500GB Samsung 970 EVO, 500GB Samsung 850 EVO, 1TB Crucial MX300 and 2TB Crucial MX500 |

| Display(s) | Dell U3219Q + HP ZR24w |

| Case | Raijintek Thetis |

| Audio Device(s) | Audioquest Dragonfly Red :D |

| Power Supply | Seasonic 620W M12 |

| Mouse | Logitech G502 Proteus Core |

| Keyboard | G.Skill KM780R |

| Software | Arch Linux + Win10 |

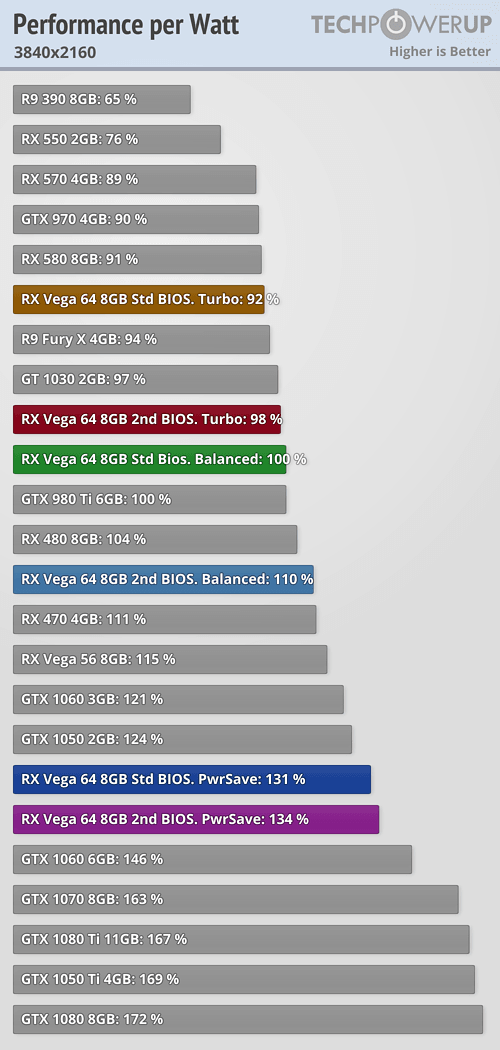

Vega didn't offer much performance for all that power draw (just a tad faster than a 1070, available almost a year before).Have no sympathy for gerbils buying this abomination of a video card.

YOU people bitched (and rightly so) on AMD Vega Series because of excsessive heat and wattage issues. An increase in performance at the expense of increase of wattage. As stated again I bought a EVGA 1070 SC because of the excessive wattage/ heat issue that the Vega Series gave. The 1070 is a great card that I still use in my back up computer.

NOW I get to bitch on how crappy these cards really are for the same damn thing. An increase in performance at the expense of increase of wattage.

30% increase in performance for 30% wattage increase IS NOT AN ADVANCEMENT.

And you are not even getting the quality of Silicon that you should get for a $800 (add shipping and tax and most 3080 video cards will hit this price) video card that will work nicely as a winter heater. Nvidia is hoarding the best for later cards.

The world does NOT run on 4K. Real 4K game playing for the masses is over 5 years off as they haven't milked the 1080p market to death yet. Right now 1440p is now the sweet spot for video games and prices are starting to come down for everyone to enjoy the experience.

Just wait until the holiday season really begins to make your purchases. By the time the newer 3080's should have their issues ironed out.

If the AMD gives me a Video card with similar performance of a 3080 with LESS wattage usage. I'll buy one. And if they get their act together I'll buy from Nvidia as well.

This is not me taking one side. This is me looking at how the industry if giving less to the masses by marketing hype.

On top of that going from Vega56 to Vega64, perf/W took almost a 20% drop, making it obvious the architecture was at its limit. By comparison, going from 3080 to 3090, perf/W only declines 8%. And most of that is probably because of the added VRAM.

Personally, I'd like to see manufacturers put a 250W hard limit on their GPUs, but as long as people are buying these monstrosities (in my eyes), who am I to argue?

- Joined

- Nov 11, 2016

- Messages

- 3,692 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Have no sympathy for gerbils buying this abomination of a video card.

YOU people bitched (and rightly so) on AMD Vega Series because of excsessive heat and wattage issues. An increase in performance at the expense of increase of wattage. As stated again I bought a EVGA 1070 SC because of the excessive wattage/ heat issue that the Vega Series gave. The 1070 is a great card that I still use in my back up computer.

NOW I get to bitch on how crappy these cards really are for the same damn thing. An increase in performance at the expense of increase of wattage.

30% increase in performance for 30% wattage increase IS NOT AN ADVANCEMENT.

And you are not even getting the quality of Silicon that you should get for a $800 (add shipping and tax and most 3080 video cards will hit this price) video card that will work nicely as a winter heater. Nvidia is hoarding the best for later cards.

The world does NOT run on 4K. Real 4K game playing for the masses is over 5 years off as they haven't milked the 1080p market to death yet. Right now 1440p is now the sweet spot for video games and prices are starting to come down for everyone to enjoy the experience.

Just wait until the holiday season really begins to make your purchases. By the time the newer 3080's should have their issues ironed out.

If the AMD gives me a Video card with similar performance of a 3080 with LESS wattage usage. I'll buy one. And if they get their act together I'll buy from Nvidia as well.

This is not me taking one side. This is me looking at how the industry if giving less to the masses by marketing hype.

While you are quick to complain, Ampere is still have higher perf/watt than Turing . Talking about high power consumption alone is pointless because it is configurable, for example thin and light laptop can have 2080 Super configured with 90W TGP and still be faster than a desktop 5600 XT with 180W TGP.

This was why Fermi and Vega were failures, not because they are power hungry, they are just too inefficient

Now the Perf/Watt for 3080 at 1080/1440p is not correct, W1zzard said so himself because the power consumption is measured with 4K gaming, at 1080p/1440p the 3080 is not drawing as much power.

At the end of the day, the first thing most people care about is perf/dollar, followed by perf/watt then additional features. With Ampere Nvidia has placed the most emphasis on Perf/dollar while getting a little efficiency uplift on the side.

I'm sure Nvidia specifically chose Samsung 8N for this reason, had gaming Ampere been produced at TSMC 7+ it would have been much more efficient but pricier. Yes AMD has the chance to achieve slightly higher efficiency with RDNA2, however they won't be competitive in the Perf/dollar without drowing their profit margins (which is already really low to begin with).

Last edited:

- Joined

- Jan 8, 2017

- Messages

- 9,847 (3.18/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

Performance per watt is possibly the least impressive metric in a new GPU architecture. It would take an unprecedented amount of bad design to conceive a GPU with worse perf/watt. Vega had better performance per watt compared to Polaris as well, that's why they put in APUs.

So when NVIDIA proudly states that their new GPUs have higher performance per watt, that basically means nothing. What's impressive is when you get higher perf/watt and lower power.

So when NVIDIA proudly states that their new GPUs have higher performance per watt, that basically means nothing. What's impressive is when you get higher perf/watt and lower power.

Last edited:

- Joined

- Nov 11, 2016

- Messages

- 3,692 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Performance per watt is possibly the least impressive metric in a new GPU architecture. It would take an unprecedented amount of bad design to conceive a GPU with worse perf/watt. Vega had better performance per watt compared to Polaris as well.

So when NVIDIA proudly states that their new GPUs have higher performance per watt, that basically means nothing. What's impressive is when you get higher perf/watt and lower power.

Just wait for Laptop with Ampere GPU then, 3080 with 90W TGP would dominate any other mobile chip.

Mobile Navi is pretty much defunct.

- Joined

- Jan 8, 2017

- Messages

- 9,847 (3.18/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

3080 with 90W TGP would dominate any other mobile chip.

Dream on, a 90W 3080 would probably be no faster or even slower than a mobile 2080.

- Joined

- Nov 11, 2016

- Messages

- 3,692 (1.17/day)

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus Astral 5090 LC OC |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Dream on, a 90W 3080 would probably be no faster or even slower than a mobile 2080.

Wanna bet ?

- Joined

- Jan 8, 2017

- Messages

- 9,847 (3.18/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

Wanna bet ?

You know what's funny ? The RTX 3000 mobile name is already used for mobile Quadros. They must have knew from back then how great Ampere will be for mobile