- Joined

- Aug 6, 2017

- Messages

- 7,412 (2.60/day)

- Location

- Poland

| System Name | Purple rain |

|---|---|

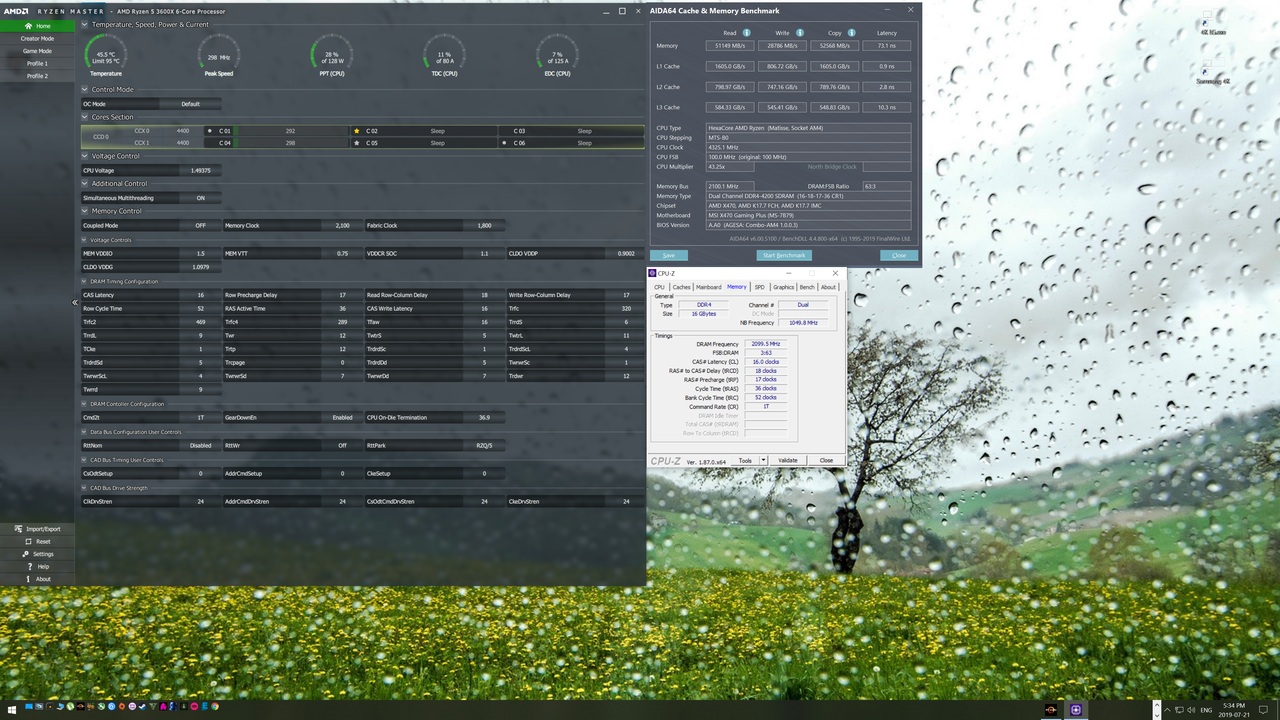

| Processor | 10.5 thousand 4.2G 1.1v |

| Motherboard | Zee 490 Aorus Elite |

| Cooling | Noctua D15S |

| Memory | 16GB 4133 CL16-16-16-31 Viper Steel |

| Video Card(s) | RTX 2070 Super Gaming X Trio |

| Storage | SU900 128,8200Pro 1TB,850 Pro 512+256+256,860 Evo 500,XPG950 480, Skyhawk 2TB |

| Display(s) | Acer XB241YU+Dell S2716DG |

| Case | P600S Silent w. Alpenfohn wing boost 3 ARGBT+ fans |

| Audio Device(s) | K612 Pro w. FiiO E10k DAC,W830BT wireless |

| Power Supply | Superflower Leadex Gold 850W |

| Mouse | G903 lightspeed+powerplay,G403 wireless + Steelseries DeX + Roccat rest |

| Keyboard | HyperX Alloy SilverSpeed (w.HyperX wrist rest),Razer Deathstalker |

| Software | Windows 10 |

| Benchmark Scores | A LOT |

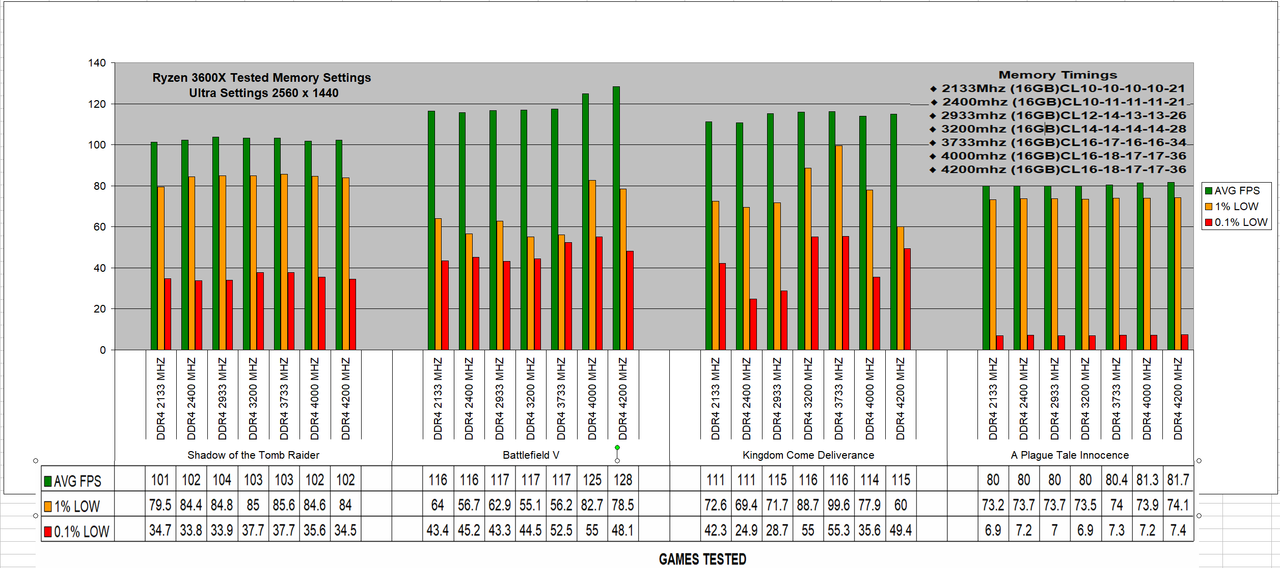

Test pamięci do procesorów AMD Ryzen - Taktowania vs opóźnienia | PurePC.pl

Test pamięci do procesorów AMD Ryzen - Taktowania vs opóźnienia (strona 17) Test pamięci RAM DDR4 do procesora AMD Ryzen 5 3600. Porównanie wpływu taktowania i opóźnień na wydajność w grach i programach. Które ustawienia pamięci RAM są najlepsze?

this is a really good read for someone who is still undecided between 3200 cl14 and 3600 cl16

3600 cl16 is always faster,by 1-2% in some games,by 5-7% in others.

All tests done on single rank B-die memory.