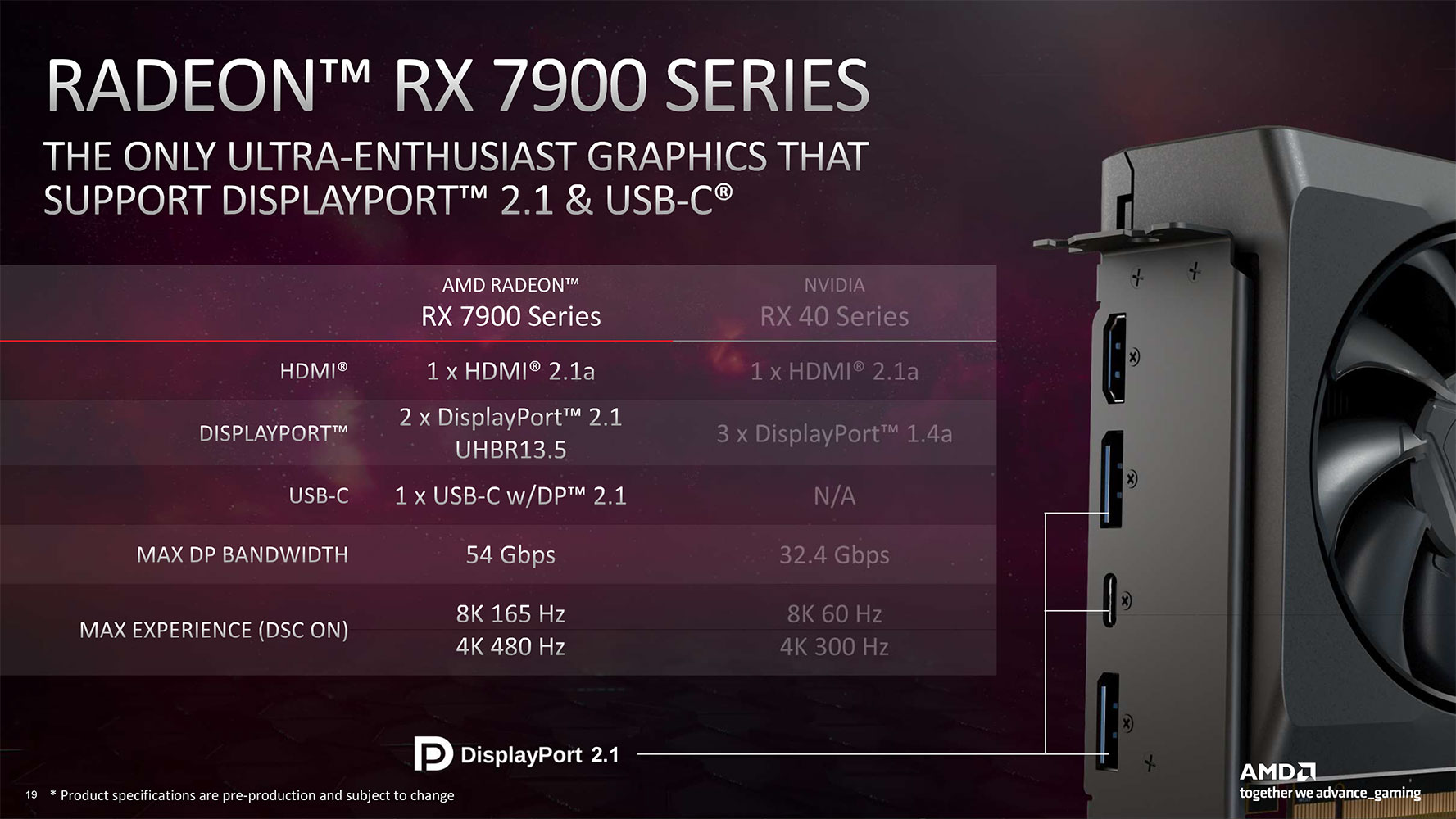

People have been duped by AMD, whether AMD intended this or not. There are so many people saying that they didn't realize that RDNA3 cards don't support the max DP 2.1 bandwidth, so obviously AMD wasn't being very clear. The fact that they only supported UHBR 13.5 was buried in the footnotes of their RDNA3 presentation, and all most people saw and heard was "DisplayPort 2.1." That seems pretty deceptive to me.

Nvidia is obviously deceptive all the time, but in this case, DP 2.1 really isn't necessary because of DSC. You need DSC with or without DP 2.1 in order to get the monitor's full refresh rate, so it's really the same either way. And DSC is genuinely a "visually lossless" compression algorithm. Even if you've studied the effect it has on image quality and have the ability to compare images side by side, I promise that you will have an exceptionally hard time telling them apart. I've read the white paper and looked at the example images, and it really is borderline impossible to tell which is which even when you're trying your hardest to. So yeah, if your expected use case for DP 2.1 is to avoid DSC, then a) you probably won't, and b) it wouldn't be necessary anyway.