- Joined

- Jan 26, 2020

- Messages

- 416 (0.21/day)

- Location

- Minbar

| System Name | Da Bisst |

|---|---|

| Processor | Ryzen 5800X |

| Motherboard | GigabyteB550 AORUS PRO |

| Cooling | 2x280mm + 1x120 radiators, 4xArctic P14 PWM, 2xNoctua P12, TechN CPU, Alphacool Eisblock Auror GPU |

| Memory | Corsair Vengeance RGB 32 GB DDR4 3800 MHz C16 tuned |

| Video Card(s) | AMD PowerColor 6800XT |

| Storage | Samsung 970 Evo Plus 512GB |

| Display(s) | BenQ EX3501R |

| Case | SilentiumPC Signum SG7V EVO TG ARGB |

| Audio Device(s) | Onboard |

| Power Supply | ChiefTec Proton Series 1000W (BDF-1000C) |

| Mouse | Mionix Castor |

Hello World!

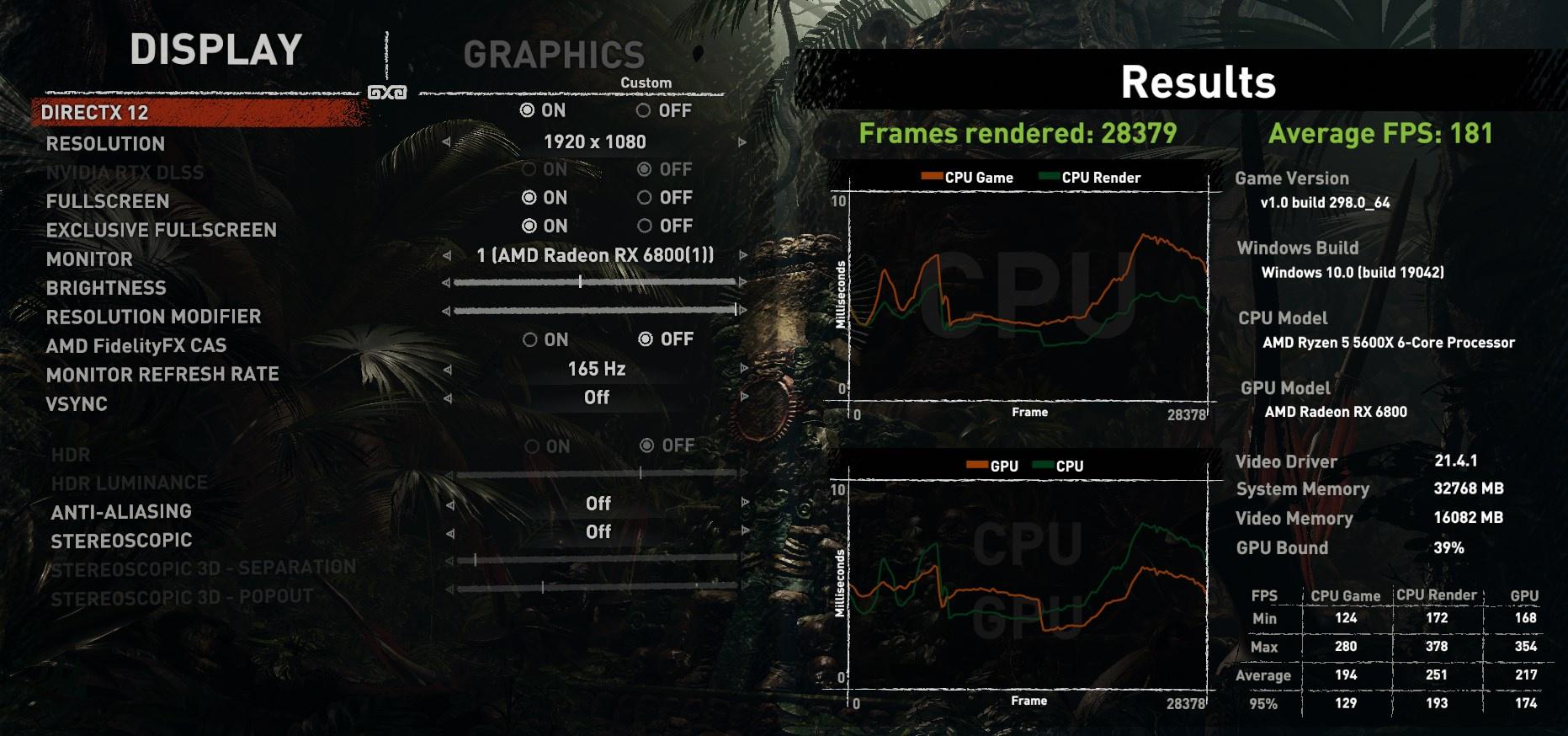

Since Shadow Of The Tomb Raider is a particularly CPU intensive game, or better said, CPU performance has a major impact on FPS, it would be interesting to see how our setups handle the game.

For that, lets settle on very specific in-game settings:

Fullscreen

Exclusive Fullscreen

DirectX 12

DLSS OFF

Vsync OFF

Resolution 1920 X 1080

Anti-Aliasing OFF

Graphic Settings - Lowest Profile (please leave it at Lowest without any changes for the purpose of this test)

We shall gather and centralize the scores once a month, CPU and GPU, and try to find a correlation between them.

Here is the first one

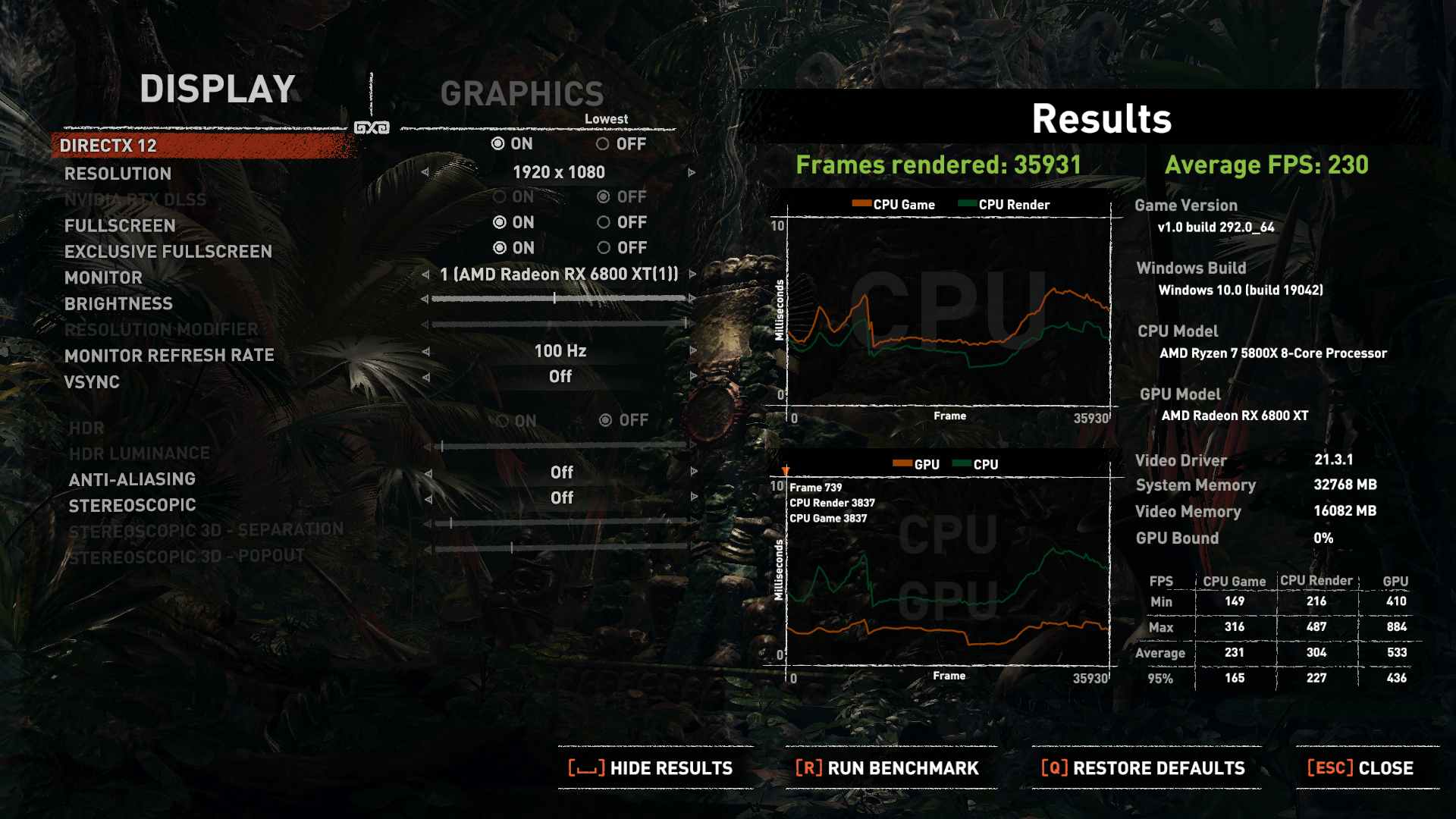

Since Shadow Of The Tomb Raider is a particularly CPU intensive game, or better said, CPU performance has a major impact on FPS, it would be interesting to see how our setups handle the game.

For that, lets settle on very specific in-game settings:

Fullscreen

Exclusive Fullscreen

DirectX 12

DLSS OFF

Vsync OFF

Resolution 1920 X 1080

Anti-Aliasing OFF

Graphic Settings - Lowest Profile (please leave it at Lowest without any changes for the purpose of this test)

We shall gather and centralize the scores once a month, CPU and GPU, and try to find a correlation between them.

Here is the first one

Last edited by a moderator: