- Joined

- Nov 14, 2012

- Messages

- 1,693 (0.37/day)

| System Name | Meh |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI X670E Tomahawk |

| Cooling | Thermalright Phantom Spirit |

| Memory | 32GB G.Skill @ 6000/CL30 |

| Video Card(s) | Gainward RTX 4090 Phantom / Undervolt + OC |

| Storage | Samsung 990 Pro 2TB + WD SN850X 1TB + 64TB NAS/Server |

| Display(s) | 27" 1440p IPS @ 360 Hz + 32" 4K/UHD QD-OLED @ 240 Hz + 77" 4K/UHD QD-OLED @ 144 Hz VRR |

| Case | Fractal Design North XL |

| Audio Device(s) | FiiO DAC |

| Power Supply | Corsair RM1000x / Native 12VHPWR |

| Mouse | Logitech G Pro Wireless Superlight + Razer Deathadder V3 Pro |

| Keyboard | Corsair K60 Pro / MX Low Profile Speed |

| Software | Windows 10 Pro x64 |

Another news flash - Check out Steam Hardware Survey and look at AMDs 15% marketshare (including their mobile GPUs and APUs in general which probably stands for 5% at least)You've spent about 1,5 page now talking about numerous upscale techs while the topic is not entirely that. We get it, you love these techs, good on you. Others don't. Let's move on? This is also not a dick comparison between AMD and Nvidia, which you seem adamant to make it.

Here's a little news flash

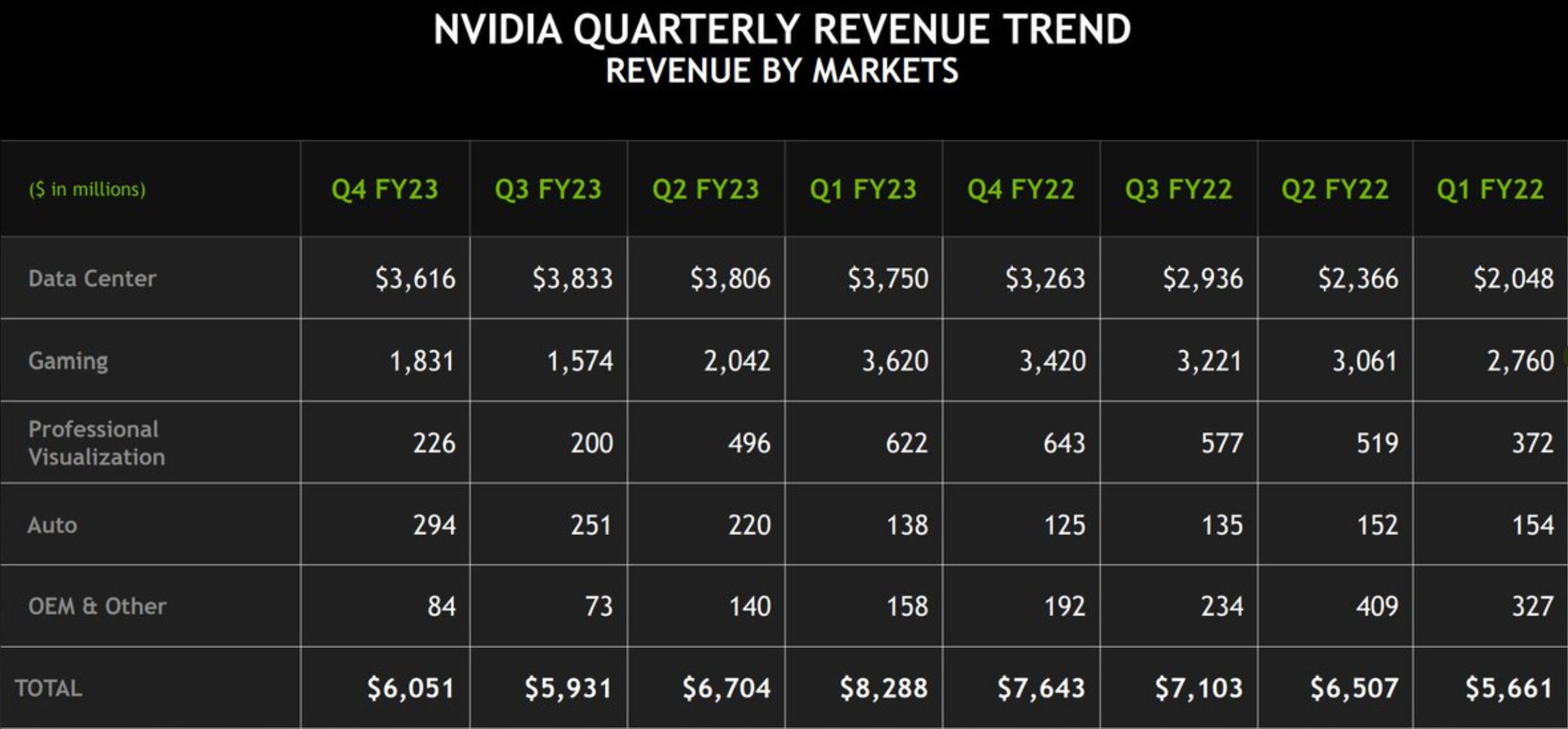

AMD GPU Sales Not That Far Behind NVIDIA's in Revenue Terms

While AMD Radeon PC discrete GPUs have a lot of catching up to do against NVIDIA GeForce products in terms of market-share, the two companies' quarterly revenue figures paint a very different picture. For Q4 2022, AMD pushed $1.644 billion in GPU products encompassing all its markets, namely the...www.techpowerup.com

Ever since AMD owns the two consoles, they ship roughly equal or more gaming GPUs than Nvidia.

And in terms of revenue, consider the fact AMD doesn't have a service to match Geforce NOW either.

Top 25 GPUs on Steam is almost entirely dominated by Nvidia. Nothing newly released from AMD is preset. Zero 6000 series. Zero 7000 series. Keep believing they sell well if you want. They don't. Even AMD knows this. This is why they skip high-end GPUs in RDNA4. 99% of AMD buyers are buying their low to mid-end chips. Like usual.

Best selling AMD cards always been the dirt cheap ones. They need another RX480/470/580/570 soon, and this is what RDNA4 will focus on.