- Joined

- Oct 9, 2007

- Messages

- 47,670 (7.43/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

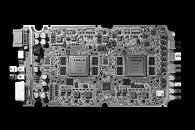

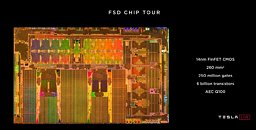

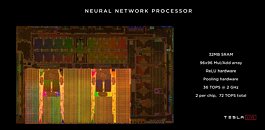

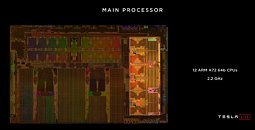

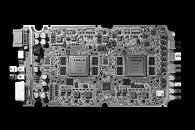

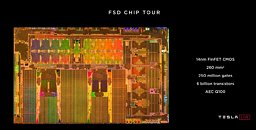

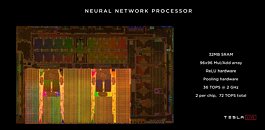

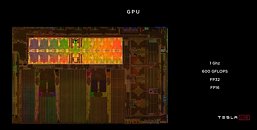

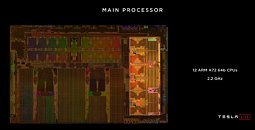

Tesla Motors announced the development of its own self-driving car AI processor that runs the company's Autopilot feature across its product line. The company was relying on NVIDIA's DGX processors for Autopilot. Called the Tesla FSD Chip (full self-driving), the processor has been deployed on the latest batches of Model S and Model X since March 2019, and the company looks to expand it to its popular Model 3. Tesla FSD Chip is an FPGA of 250 million gates across 6 billion transistors crammed into a 260 mm² die built on the 14 nm FinFET process at a Samsung Electronics fab in Texas. The chip packs 32 MB of SRAM cache, a 96x96 mul/add array, and a cumulative performance metric per die of 72 TOPS at its rated clock-speed of 2.00 GHz.

A typical Autopilot logic board uses two of these chips. Tesla claims that the chip offers "21 times" the performance of the NVIDIA chip it's replacing. Elon Musk referred to the FSD Chip as "the best chip in the world," and not just on the basis of its huge performance uplift over the previous solution. "Any part of this could fail, and the car will keep driving. The probability of this computer failing is substantially lower than someone losing consciousness - at least an order of magnitude," he added.

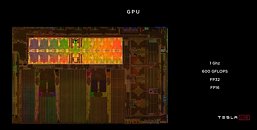

Slides with on-die details follow.

View at TechPowerUp Main Site

A typical Autopilot logic board uses two of these chips. Tesla claims that the chip offers "21 times" the performance of the NVIDIA chip it's replacing. Elon Musk referred to the FSD Chip as "the best chip in the world," and not just on the basis of its huge performance uplift over the previous solution. "Any part of this could fail, and the car will keep driving. The probability of this computer failing is substantially lower than someone losing consciousness - at least an order of magnitude," he added.

Slides with on-die details follow.

View at TechPowerUp Main Site