Wednesday, September 7th 2011

MSI Calls Bluff on Gigabyte's PCIe Gen 3 Ready Claim

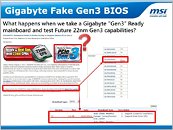

In August, Gigabyte made a claim that baffled at least MSI, that scores of its motherboards are Ready for Native PCIe Gen. 3. Along with the likes of ASRock, MSI was one of the first with motherboards featuring PCI-Express 3.0 slots, the company took the pains to educate buyers what PCI-E 3.0 is, and how to spot a motherboard that features it. MSI thinks that Gigabyte made a factual blunder bordering misinformation by claiming that as many as 40 of its motherboards are "Ready for Native PCIe Gen. 3." MSI decided to put its engineering and PR team to build a technically-sound presentation rebutting Gigabyte's claims.More slides, details follow.

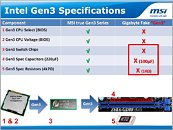

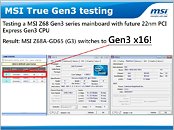

MSI begins by explaining that PCIe support isn't as easy as laying a wire between the CPU and the slot. It needs specifications-compliant lane switches and electrical components, and that you can't count on certain Gigabytes for future-proofing.MSI did some PCI-Express electrical testing using a 22 nm Ivy Bridge processor sample.MSI claims that apart from the G1.Sniper 2, none of Gigabyte's so-called "Ready for Native PCIe Gen. 3" motherboards are what the badge claims to be, and that the badge is extremely misleading to buyers. Time to refill the popcorn bowl.

Source:

MSI

MSI begins by explaining that PCIe support isn't as easy as laying a wire between the CPU and the slot. It needs specifications-compliant lane switches and electrical components, and that you can't count on certain Gigabytes for future-proofing.MSI did some PCI-Express electrical testing using a 22 nm Ivy Bridge processor sample.MSI claims that apart from the G1.Sniper 2, none of Gigabyte's so-called "Ready for Native PCIe Gen. 3" motherboards are what the badge claims to be, and that the badge is extremely misleading to buyers. Time to refill the popcorn bowl.

286 Comments on MSI Calls Bluff on Gigabyte's PCIe Gen 3 Ready Claim

is explained on the manual.....

PCI-E 3.0 is not interesting topic tho, its like the least important thing on a motherboard you buy today.

Now on boards that dont use PCIe lane switches, in theory they might be able to support full 16 lanes of PCIe 3.0 (have no idea if they will be electronically stable). But on the Gigabyte boards that do have lane switches; other than the new G1.sniper2 and possibly the new REV1.3 boards; the chances of having native Gen3 16 lane support might be a bit silm.

It switches between the SINGLE Gen3x16 slot and the NF200 controller.

If you installed a card in slot2, it will disable ALL OTHER PCI EXPRESS SLOTS from the NF200.

If you install a x16 in any other slot than slot 2, it will disable Gen3.

Now tell me, who feels like paying $300 for a single slot ATX board?

Oh, I checked the Gigabyte website:

G1 Sniper v2 lists Gen3 support:

www.gigabyte.com/products/product-page.aspx?pid=3962#ov

All other Z68 boards etc? NO Gen3 support listed anymore (clue clue!)

www.gigabyte.com/products/product-page.aspx?pid=3863#ov

uk.gigabyte.com/press-center/news-page.aspx?nid=1048

www.techpowerup.com/forums/showthread.php?t=151660

SO its OK for MSI to say they are the first on something they are not, but not OK for gigabyte to try something similar.

MSI needs to climb out of everyone's ass, and worry about their own house of cards for a while IMHO!

On topic....meh. The educated won't fall for gigabyte's tricks anyways!

Again point is, people in glass houses shouldn't throw stones, but it seems MSI used bullet proof glass on their house!

So MSI sold the tech before they used it themselves? www.techpowerup.com/115132/SPARKLE-Announces-Calibre-X240-X240G-Graphics-Cards-With-Dual-Layer-Fan-Blade-Cooling.html

The real marketing bs is the 3.0 implementation . I can already see it coming , everybody upgrading their systems , buying a new motherboard for 3.0 support , and the upcoming cards will perform the same on 2.0 (2.1) / 3.0 . I`ve seen it in the past and im pretty sure ill see this crap again .

The fact that the cards maybe won`t run at full potential is another story , and we shall see the impact upon performance . But like i said , im pretty sure they know what they are doing . Just remember that motherboard manufacturers get early en samples to test on their motherboards , so they can actually build that bios , uefi or whatever and im pretty sure they have a 3.0 card around there somewhere . You wouldn`t wan`t ur hd7*** or whatever not working(or not fully compatible) in existing motherboards just because the card itself is 3.0 . It should be backward compatible , and basically that`s what an UEFI or BIOS update is for . Why not update the bios prior to the actual GPU launch ?

And also i am pretty sure that they will actually launch motherboards with TRUE NATIVE ( hardware wise ) pcie 3.0 support , after the actual video cards hit the market .

I`m still going to buy Gigabyte motherboards anyway ...

IDK guess I'm just so tired of people who are obviously wrong making others look bad to get themselves on the next rung of the ladder.

I think it is very nice that MSI took the time to make those slides, and I think its a great way for them to show the community how the PCI-E lane allotment and standards work, sad part it is it doesn't disprove that the first 8x lanes of the first 16x slot on the UD4 isn't PCI-E 3.0 capable.

Besides if what VRZone says is true, then this doesn't matter.

Isn't Gen 3 like Gen 1 to Gen 2 for performance gains ?. Maybe it's why Gigabyte ( if they did that is ) skimped out as the bandwidth is not used most of the time anyways.