Tuesday, December 20th 2011

AMD's Own HD 7970 Performance Expectations?

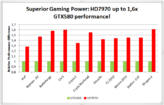

Ahead of every major GPU launch, both NVIDIA and AMD give out a document to reviewers known as Reviewer's Guide, in which both provide guidelines (suggestions, not instructions), to reviewers to ensure new GPUs are given a fair testing. In such documents, the two often also give out their own performance expectations from the GPUs they're launching, in which they compare the new GPUs to either previous-generation GPUs from their own brand, or from the competitors'. Apparently such a performance comparison between the upcoming Radeon HD 7970 and NVIDIA's GeForce GTX 580, probably part of such a document, got leaked to the internet, which 3DCenter.org re-posted. The first picture below, is a blurry screenshot of a graph in which the two GPUs are compared along a variety of tests, at a resolution of 2560 x 1600. A Tweakers.net community member recreated that graph in Excel, using that data (second picture below).

A couple of things here are worth noting. Reviewer guide performance numbers are almost always exaggerated, so if reviewers get performance results lower than 'normal', they find it abnormal, and re-test. It's an established practice both GPUs vendors follow. Next, AMD Radeon GPUs are traditionally good at 2560 x 1600. For that matter, the performance gap between even the Radeon HD 6970 and GeForce GTX 580 narrows a bit at that resolution.

Source:

3DCenter.org

A couple of things here are worth noting. Reviewer guide performance numbers are almost always exaggerated, so if reviewers get performance results lower than 'normal', they find it abnormal, and re-test. It's an established practice both GPUs vendors follow. Next, AMD Radeon GPUs are traditionally good at 2560 x 1600. For that matter, the performance gap between even the Radeon HD 6970 and GeForce GTX 580 narrows a bit at that resolution.

101 Comments on AMD's Own HD 7970 Performance Expectations?

I think that was the only "bad" ATi/AMD GPU. nVidia has had more disappointing GPU's than AMD had (*cough 590/570 cough*). AMD was the only one that provided more than sufficient power phases with Volterra's.

Considering 2 x 40 is only 80 he he:slap:

Yeah - ya know what? I've been reading articles on AMD/ATI's "EXPECTATIONS" for the last 5 years now and seen nothing but soggy half-arsed failure after soggy half-arsed failure that ONLY EVER achieves reasonable frame rates by REMOVING GRAPHIC PROCESSING from your games - reducing textures, texture processing and filtering against your will and without your permission , at driver level.

Forcing FSAA so be disabled with no way to enable it - and other cheap underhanded Bull-SCHYYTE.

I have to rename all my game exe's so I can at least use AA, BECAUSE THEIR ASS-HAT SOLUTION TO "IMPROVING PERFORMANCE" IS TO JUST FORCE DISABLE (IN THE DRIVER) ANY QUALITY IMPROVING SETTINGS , and I'm sick of it - this company has failed - it cant compete and it must stop LYING to its customers, because no matter WHAT result it claims to gets, it only gets it BECAUSE it skewed the results, the environment, AND the benchmark itself just to get them and it will never live up to that in real life without DISABLING half your options before you play any game.

At the very least it's a reading comprehension fail.

Either way you should just admit the fault perhaps laugh it off and move on.

:toast:

... Erm... what?

I've never had this problem EVER

You should probably change all the sliders in catalyst to quality instead of performance as this disables any optimisations.

Skyrim is the best recent example.

After a "Performance" Upgrade from AMD - Fsaa was simply REMOVED at driver level - no matter what you set in the game or your catalyst driver there is NO AA anymore - and the whole gfx goes to schyte with creepy crawly aliased edges allover the screen - but if you simply Alt-f4, find tesv.exe, Rename to quake.exe, and run the game again? BAM all processing now works perfectly again.

It is a very well known fact that AMD/ATI have been Cheating in their driver using EXE name detection for YEARS, deleting textures and grass in games like FarCry to reduce load on their struggling hardware, even Flat out cheating with Fur-Mark - and manipulating settings to effect the benchmark - in an article published in this TPU forum a few years ago.

(Edit : the Stock cooler design was so inferior that if you actually maxed the card EVEN AT STOCK CLOCKS it could physically destroy itself)

YES the idea of "Per-Game Optimizations" started as a good idea - to boost compatibility with various games, and both nVidia and AMD/ATI Use it - the fact was that it was there for COMPATIBILITY - not to cover up how lame ass rubbish your useless gfx cards are by force disabling peoples PERFECTLY COMPATIBLE quality settings just to get a better frame rate.

That is just plain fraud - and in my limited personal opinion, that is not representative of TechPowerUp or it's administration, and based on my personal experience with AMD/ATI products, I believe AMD/ATI are frauds - and anyone new to the game should take their "Claims" and "Expectations" with a large tablespoon of salt.

www.hardocp.com/article/2011/12/19/amd_catalyst_121_preview_profiles_performance

New controls to choose how those application profiles are configured...

So instead of "lying" hardcoding temporary fixes in the driver that reduce IQ but fix other issues...now users can tweak to their hearts content...

and...its Nvidia who coded a profile for Furrmark to keep their cards from dying running it...

sigh... :shadedshu

More specifically " Catalyst A.I" It may say it's just for textures in the description but setting it to high quality instead of performance/quality seems to disable all optimisations.

And as far as I know has always done this.

"Catalyst A.I: Catalyst A.I. allows users to determine the level of 'optimizations' the drivers enable in graphics applications. These optimizations are graphics 'short cuts' which the Catalyst A.I. calculates to attempt to improve the performance of 3D games without any noticeable reduction in image quality. In the past there has been a great deal of controversy about 'hidden optimizations', where both Nvidia and ATI were accused of cutting corners, reducing image quality in subtle ways by reducing image precision for example, simply to get higher scores in synthetic benchmarks like 3DMark. "

:D

Thanks for playing though.

^^This sums up mi opinion.

online chart maker

Just to point out the obvious, according to Moore's Law, AMD should be pumping out much more than 30% improvement.

Now let's assume it's actually lower by 15 percentage points (132%)...that puts it behind a GTX 590 (140%) but still some way ahead of the HD 5970 (124%).