Thursday, December 13th 2012

HD 7950 May Give Higher Framerates, but GTX 660 Ti Still Smoother: Report

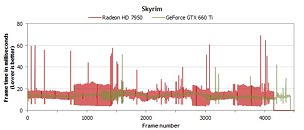

The TechReport, which adds latency-based testing in its VGA reviews, concluded in a recent retrospective review taking into account recent driver advancements, that Radeon HD 7950, despite yielding higher frame-rates than GeForce GTX 660 Ti, has higher latencies (time it takes to beam generated frames onto the display), resulting in micro-stutter. In response to the comments-drama that ensued, its reviewer did a side-by-side recording of a scene from "TESV: Skyrim" as rendered by the two graphics cards, and slowed them down with high-speed recording, at 120 FPS, and 240 FPS. In slow-motion, micro-stuttering on the Radeon HD 7950 is more apparent than on the GeForce GTX 660 Ti.Find the slow-motion captures after the break.

Source:

The TechReport

122 Comments on HD 7950 May Give Higher Framerates, but GTX 660 Ti Still Smoother: Report

So I tried 2 x 5850. Nice performance boost, but stuttering was back in full effect.

So I decided to try nVidia. My 580 gets roughly the same frame rates as the 5850 Crossfire combo in the things I tested, but is noticeably smoother. Stuttering is a rare occurrence on my 580. It does happen on some occasions though. Much less frequent however.

Of course, ymmv. I'm sure there's more to it than just AMD and their drivers. But on my setup, I get a better experience with nVidia.

And lets face it if its THAT BAD then no one would be buying AMD cards period, but we both know that isnt true?

We are talking milliseconds, anyone out there can honestly tell me they can see in milliseconds lol?

Give me the bus width and extra vid ram anyday, much more futureproof.

They are a great card for the money, plain and simple

The few games that do have some sort of blurring option, generally run much smoother at much lower framerates. Just look at Crysis 1 as an example. With blurring, it rendered smoothly on most setups all the way down in the 30's, whereas other games require a much higher framerate to achieve the same level of smoothness.

Besides, you do not have to be able to see each individual frame to recognize when something isn't looking smooth. Most people I let see these issues first hand can't put a finger on what's wrong, but they see microstuttering as something that's just a little off, and doesn't feel quite right.

EDIT: Found what I was looking for to prove my point. Even at 60fps, some settings show a noticeable difference. It's even more pronounced if you view on a high quality CRT.

frames-per-second.appspot.com/

techreport.com/review/23150/amd-radeon-hd-7970-ghz-edition/7

Might as well return to XFX 7950 Black with 900Mhz and no turbo boost.

For AIB overclock vs AIB overclock product, Techreport should have used Sapphire 100352VXSR i.e. 7950 @ 950Mhz with no turboboost.

webvision.med.utah.edu/book/part-viii-gabac-receptors/temporal-resolution/

And xbitlabs also had a look at how display technology affects perceived response times:

www.xbitlabs.com/articles/monitors/display/lcd-parameters_3.html

Anyways they something like the eye (thanks to the brain) is actually able to perceive changes up to 5ms. That's 200 frames per second.

Cinema is smooth at 24fps because those frames are delivered consistently.

i.e., if you plot time vs frames, then

at 0ms you get frame 1,

at 41.6ms you get frame 2,

at 83.3ms you get frame 3 and so on.

The frames always arrive right on time, and your brain combines them into an illusion of fluid motion. Of course it kinda helps that every frame in a movie is already done and rendered, so you dont have to worry about and render delays.

On a computer, the case might be like:

at 0 ms you get frame 1

at 16.7ms you get frame 2

at 40ms you get frame 3 (now this frame should have arrived at 33.3ms)

at 50ms you get frame 4

Frame 3 was delayed by 8ms. Going by a consistent 60fps it should have arrived at 33.3ms, but processing delays meant it rolls off the GPU late. Your in-game fps counter or benchmark tool wont notice it at all because it still arrived before 50ms (or when frame 4 was due), but your eye, sensitive to delays of up to 5ms notices this as a slight stutter.

i think ppl who bought nvidia must say negative coments about amd becouse...well lets face it they can't do nothing else..they have nvidia!!and since im not fan of any of those

i can say the sam about amd fans!i just go price performance and now.. that is amd!!!

i would even go with amd cpu setup just becouse they are cheaper..but that dont give pcie gen3 suport so i give it up!!

:nutkick:

If you have a great experience with your card, by all means, keep using it. I'm not here to tell you otherwise. After all, what works best for one, doesn't always work best for another. I'm not here to tell you AMD is bad for you. If it works great in your setup, there's no reason for you to worry about it at all.

The cards just don't seem to work their best in my particular setup. I can't speak for everyone though.

On the topic of this particular article and related reviews, however, I do like the latency based approach to testing. It seems to fall into line with how my system behaves with these cards.

With newer BETA driver :

techreport.com/review/23527/review-nvidia-geforce-gtx-660-graphics-card/5

techreport.com/review/23527/review-nvidia-geforce-gtx-660-graphics-card/8

Anyway I see this as necessary evil. This will shaken up AMD driver team at least.

950 MHz for ALUs/TMUs/ROPs(1792/112/32)

= 3404.8 GFlops/106.4 GTexels/30.4 GPixels

5 GHz 384-bit GDDR5

= 240 GB/s

www.zotac.com/index.php?page=shop.product_details&flypage=flypage_images-SRW.tpl-VGA&product_id=496&category_id=186&option=com_virtuemart&Itemid=100313&lang=en

1111 MHz for ALUs/TMUs/ROPs(1344/112/24)

= 2986.368 GFlops/124.432 GTexels/26.664 GPixels

6.608 GHz 192-bit GDDR5

= 158.592 GB/s

---

In my conclusion it would appear that the 660 Ti has faster timings(Renders the scene faster) and does more efficient texel work(Can map the textures faster).

--> Higher clocks = faster rendering. <--

Games don't use the ALUs, the ROPs, and the RAM efficiently on the PC, so more Hz means more power even if you have significantly less units.

Games(+HPC with CUDA): Nvidia <--unless AMD is cheaper for the same performance.

High Performance Computing(Not with CUDA): AMD

Just a little tweet from Anand Shimpi:

twitter.com/anandshimpi/status/279440323208417282I'm pretty confident btarunr wouldn't have linked the article here either if he felt it was biased.

Yes, older drivers (12.7 beta) but the entire point is, no bias and no AMD crap out. Also, for each latency blip to be identifed as a non-glitch requires continual rerunning of the same scene and seeing how the latencies play out.

And yes, to repeat, the graph above are older drivers but the point is still valid - there are no inherent issues with the AMD cards. Nvidia's Vsync may well be doing it's intended job here to minimise latency (effectively reducing it by throwing resources - speed - at those more difficult scenes.)

I'm sad now, i have a 7950 Windforce.

In Skyrim with 12.8, it had sometimes a small stuttering, i have updated to 12.10 and the stuttering increased in outside areas (not in the cities, in the cities it runs smoothly, but in some caves the stuttering appears, weird).

I boughted it in july, there was a special offer, paid 310 euros, it was a deal back then.

Now i think i should have gone with a 670gtx, but the damn thing even now is around 360 euros (the cheapest with standard cooling), Asus DCU or Gigabyte windforce beeing at 380-390 euros.

:cry:

Hope AMD improves their drivers fast.:ohwell:

it sounds like it could skew average fps due to super high fps in easy to render scenes that allow thermal headroom but not so much to the intensive ones that allow no thermal headroom which is were you need the power

if anyone knows of any good reviews that compare minimum fps between boast and non boast it would be greatly appreciated

www.bit-tech.net/hardware/2012/08/16/amd-radeon-hd-7950-3gb-with-boost/1

www.pcper.com/reviews/Graphics-Cards/AMD-Radeon-HD-7950-3GB-PowerTune-Boost-Review

www.hardwarecanucks.com/forum/hardware-canucks-reviews/56220-powercolor-hd-7950-3gb-boost-state-review.html

www.pcper.com/files/imagecache/article_max_width/review/2012-08-13/bf3-1680-bar.jpg

here the minimum fps is the same

www.pcper.com/files/imagecache/article_max_width/review/2012-08-13/bac-1920-bar.jpg

and here the boast has lower minimum for some reason

so yeah it appears the whole boast on graphic cards isnt as reliable, it only affects average fps due to higher max fps, which is useless if you ask me because anything higher than 60fps isnt noticable, while going below 60 in some competitive games might be a big problem