Friday, February 1st 2013

Intel "Haswell" Quad-Core CPU Benchmarked, Compared Clock-for-Clock with "Ivy Bridge"

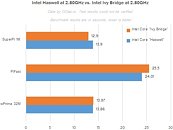

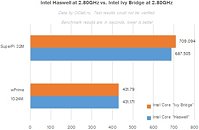

Russian tech publication OCLab.ru, which claims access to Intel's next-generation Core "Haswell" processor engineering-sample (and an LGA1150 8-series motherboard!), wasted no time in running a quick clock-for-clock performance comparison with the current Core "Ivy Bridge" processor. In its comparison, it set both chips to run at a fixed 2.80 GHz clock speed (by disabling Turbo Boost, C1E, and EIST), indicating that the ES OCLab is in possession of doesn't go beyond that frequency.

The two chips were put through SuperPi 1M, PiFast, and wPrime 32M. The Core "Haswell" chip is only marginally faster than Ivy Bridge, in fact slower in one test. In its next battery of tests, the reviewer stepped up iterations (load), putting the chips through single-threaded SuperPi 32M, and multi-threaded wPrime 1024M. While wPrime performance is nearly identical between the two chips, Haswell crunched SuperPi 32M about 3 percent quicker than Ivy Bridge. It's still to early to take a call on CPU performance percentage difference between the two architectures. Intel's Core "Haswell" processors launch in the first week of June.

Source:

OCLab.ru

The two chips were put through SuperPi 1M, PiFast, and wPrime 32M. The Core "Haswell" chip is only marginally faster than Ivy Bridge, in fact slower in one test. In its next battery of tests, the reviewer stepped up iterations (load), putting the chips through single-threaded SuperPi 32M, and multi-threaded wPrime 1024M. While wPrime performance is nearly identical between the two chips, Haswell crunched SuperPi 32M about 3 percent quicker than Ivy Bridge. It's still to early to take a call on CPU performance percentage difference between the two architectures. Intel's Core "Haswell" processors launch in the first week of June.

118 Comments on Intel "Haswell" Quad-Core CPU Benchmarked, Compared Clock-for-Clock with "Ivy Bridge"

Simple answer, to make money.

I agree most users don't need even the power of today's CPU so why would anyone buy something ever so slightly more powerful than what they have now? They won't, but they probably would buy it if the performance doubled. Yes they still wouldn't need this extra power but they will still like that it is 2x the performance of their current product and put their hand in their pocket to buy it.

Maybe some of them will find a use for the extra power, maybe they won't but Intel will be happy and so will I.

And with cloud computing and all that most personal computing devices will probably be more and more like the terminals of old. It's both good and bad imo.

It's a direct comparison of base line architecture, meaning unless they prove to clock to 6ghz a high end ivy bridge owner has little to no reason to upgrade considering raw cpu power alone. From the looks of it here anyways.

Also, the amount of leakage a chip generates varies from CPU to CPU, so two CPU that are the same could produce different amounts of heat at the same voltages but that is a different topic.

All in all, your 920 eats more power than an IVB chip. I honestly wouldn't be surprised if it consumed more power than mine at a high voltage as well.