Tuesday, February 19th 2013

NVIDIA GeForce GTX Titan Final Specifications, Internal Benchmarks Revealed

Specifications of NVIDIA's upcoming high-end graphics card, the GeForce GTX Titan, which were reported in the press over the last couple of weeks, are bang on target, according to a specs sheet leaked by 3DCenter.org, which is allegedly part of the card's press-deck. According to the specs sheet, the GTX Titan indeed features 2,688 out of the 2,880 CUDA cores present on the GK110 silicon, 6 GB of GDDR5 memory across a 384-bit wide memory interface, and draws power from a combination of 6-pin and 8-pin PCIe power connectors.

The GeForce GTX Titan core is clocked at 837 MHz, with a GPU Boost frequency of 876 MHz, and 6.00 GHz memory, churning out 288 GB/s of memory bandwidth. The chip features a single-precision floating-point performance figure of 4.5 TFLOP/s, and 1.3 TFLOP/s double-precision. Despite its hefty specs that include a 7.1 billion-transistor ASIC and 24 GDDR5 memory chips, NVIDIA rates the card's TDP at just 250W.More slides and benchmark figures follow.

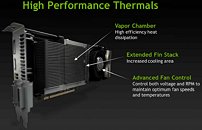

The next slide leaked by the source reveals key features of the reference design cooling solution, which uses a large lateral blower that features RPM and voltage-based speed control on the software side, a vapor-chamber plate that draws heat from the GPU, memory, and VRM; and an extended aluminum fin stack that increases surface area of dissipation.Next up, we have performance numbers by NVIDIA. In the first slide, we see the GTX Titan pitted against the GTX 680, in Crysis 3. The GTX Titan is shown to deliver about 29 percent higher frame-rates, while being a tiny bit quieter than the GTX 680.

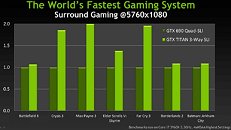

In the second slide, we see three GeForce GTX Titans (3-way SLI) pitted against a pair of GeForce GTX 690 dual-GPU cards (quad-SLI). In every test, the Titan trio is shown to be faster than GTX 690 Quad-SLI. In Crysis 3, GTX Titan 3-way SLI is shown to be about 75 percent faster; 100 percent faster in Max Payne 3, 40 percent faster in TESV: Skyrim, and 95 percent faster in Far Cry 3. Why this comparison matters for NVIDIA is that if Titan does end up being a $1000 product, NVIDIA will have to sell three of them while offering something significantly better than GTX 690 quad-SLI.

Source:

3DCenter.org

The GeForce GTX Titan core is clocked at 837 MHz, with a GPU Boost frequency of 876 MHz, and 6.00 GHz memory, churning out 288 GB/s of memory bandwidth. The chip features a single-precision floating-point performance figure of 4.5 TFLOP/s, and 1.3 TFLOP/s double-precision. Despite its hefty specs that include a 7.1 billion-transistor ASIC and 24 GDDR5 memory chips, NVIDIA rates the card's TDP at just 250W.More slides and benchmark figures follow.

The next slide leaked by the source reveals key features of the reference design cooling solution, which uses a large lateral blower that features RPM and voltage-based speed control on the software side, a vapor-chamber plate that draws heat from the GPU, memory, and VRM; and an extended aluminum fin stack that increases surface area of dissipation.Next up, we have performance numbers by NVIDIA. In the first slide, we see the GTX Titan pitted against the GTX 680, in Crysis 3. The GTX Titan is shown to deliver about 29 percent higher frame-rates, while being a tiny bit quieter than the GTX 680.

In the second slide, we see three GeForce GTX Titans (3-way SLI) pitted against a pair of GeForce GTX 690 dual-GPU cards (quad-SLI). In every test, the Titan trio is shown to be faster than GTX 690 Quad-SLI. In Crysis 3, GTX Titan 3-way SLI is shown to be about 75 percent faster; 100 percent faster in Max Payne 3, 40 percent faster in TESV: Skyrim, and 95 percent faster in Far Cry 3. Why this comparison matters for NVIDIA is that if Titan does end up being a $1000 product, NVIDIA will have to sell three of them while offering something significantly better than GTX 690 quad-SLI.

132 Comments on NVIDIA GeForce GTX Titan Final Specifications, Internal Benchmarks Revealed

Honestly I am more intrigued to see how this does since GK100 never really showed up. Should make for an interesting card regardless, and damn does it look good (improvement on even the 690's design). Essentially, unless it drops below $650 it's not exactly cost effective, but does offer nice bragging right I suppose.Rumor says NDA drops later today and reviews go out on thursday.

TL;DR - crack is cheaper.

www.techpowerup.com/reviews/ASUS/ARES_II/27.html

Now tell me AMD Cards are cheap.. :p

So I am just hoping for a little price drop on 670 and/or 680 && get two of those some time soon.

This card reminds me of several people on this website posting about how during the last GPU generations the performance of the new top card is better while the price has also increased dramatically...

That's clever............nice one Nvidia

wonder how this 3x Titan SLI would do against 4x 7970 Crossfire, which is both cheaper and faster than 2x 690 quad-sli

Jen Hsun must be doing this just to see fights break out in a line full of rich people.

Until then its all speculation, it looks great!! :D

But ask yourself:

- is it the fastest card on the planet? The answer is yes.

- does it scale brutally well as the resolution increases, especially in SLI configuration? That's probably a yes again

- as a high-end card, is it more power efficient than the competitions? Yep, looks like it is.

- etc,etc

It's like asking: can you buy a car which only costs you a few hundred thousands $ and which will perform near or just as good as cars costing a million $ would? Yep, but they still sell cars worth million(s) because there is a market for it.

There is a demand for the best, and some people just don't care about the money. New innovations and top performance products were never cheap. If you can forget the price and look at what the card can do, this card currently just owns everything out there, period.

I'd even go so far as to argue that the engineering on the 690 is more impressive than the big ass old school Nvidia approach of having huge die sizes (a la Titan).

Given the sli capabilities of the 690 (works in pretty much every game of note) if the Titan card is not as good but costs more then the Titan card is a bum deal. I know folk always argue that dual cards are not as good as single cards but I've never seen that proven with the 690. On my 7970's, yes, crossfire not so proficient (still good though) but 690's have proven their position.

Titan ought to be cheaper than the 690 - then it works. It should cost way more than a 680 - it deserves to but it needs to hit the right spot.

Here's hoping.