Monday, September 23rd 2013

Radeon R9 290X Pictured, Tested, Beats Titan

Here are the first pictures of AMD's next-generation flagship graphics card, the Radeon R9 290X. If the naming caught you off-guard, our older article on AMD's new nomenclature could help. Pictured below is the AMD reference-design board of the R9 290X. It's big, and doesn't have too much going on with its design. At least it doesn't look Fisher Price like its predecessor. This reference design card is all that you'll be able to buy initially, and non-reference design cards could launch much later.

With its cooler taken apart, the PCB is signature AMD, you find digital-PWM voltage regulation, Volterra and CPL (Cooperbusmann) chippery, and, well, the more obvious components, the GPU and memory. The GPU, which many sources point at being built on the existing 28 nm silicon fab process, and looks significantly bigger than "Tahiti." The chip is surrounded by not twelve, but sixteen memory chips, which could indicate a 512-bit wide memory interface. At 6.00 GHz, we're talking about 384 GB/s of memory bandwidth. Other rumored specifications include 2,816 stream processors, four independent tessellation units, 176 TMUs, and anywhere between 32 and 64 ROPs. There's talk of DirectX 11.2 support.It gets better, the source also put out benchmark figures.

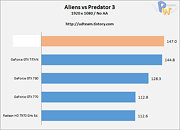

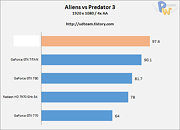

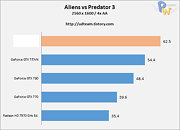

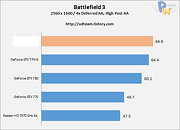

The R9 290X is significantly faster than NVIDIA's GeForce TITAN graphics card among the two games it was tested on, Aliens vs. Predators 3, and Battlefield 3. It all boils down to pricing. AMD could cash in on its performance premium, by overpricing the card much like it did with HD 7990 "Malta," or it could torch NVIDIA's high-end lineup by competitively pricing the card.

Source:

DG's Nerdy Story

With its cooler taken apart, the PCB is signature AMD, you find digital-PWM voltage regulation, Volterra and CPL (Cooperbusmann) chippery, and, well, the more obvious components, the GPU and memory. The GPU, which many sources point at being built on the existing 28 nm silicon fab process, and looks significantly bigger than "Tahiti." The chip is surrounded by not twelve, but sixteen memory chips, which could indicate a 512-bit wide memory interface. At 6.00 GHz, we're talking about 384 GB/s of memory bandwidth. Other rumored specifications include 2,816 stream processors, four independent tessellation units, 176 TMUs, and anywhere between 32 and 64 ROPs. There's talk of DirectX 11.2 support.It gets better, the source also put out benchmark figures.

The R9 290X is significantly faster than NVIDIA's GeForce TITAN graphics card among the two games it was tested on, Aliens vs. Predators 3, and Battlefield 3. It all boils down to pricing. AMD could cash in on its performance premium, by overpricing the card much like it did with HD 7990 "Malta," or it could torch NVIDIA's high-end lineup by competitively pricing the card.

142 Comments on Radeon R9 290X Pictured, Tested, Beats Titan

oh hang on you were just trollin werent you, helpful comment dude:shadedshu

So to number of transistors within the die (chip) has more effect on what each chip costs, and more than the number of die’s they harvest form each wafer? I always thought whatever they (AMD/TSMC) feel can be create within the die is free (within limits). Unless such increase also increase the amount of discarded area between those die's, but I always figured that waste is basically accounted for by the 18% growth from each harvested candidate.

From what you're saying a GK110 which that it has less transistors is less costly to produce, even though the each physical harvested die is much larger?

As you increase transistor density the chance of a failing transistor increases as imperfections cause sections to fail completely or not perform upto speed

So density/complexity and die size x node size /maturity = price per chip to amd

I would agree if they tried this revision of architecture and on a die shrink (20Nm) it would be too much, they get it worked-out-here and then basically then spin it down to 20Nm with some other optimization through "Pirate Islands" and they minimize risk. Not like AMD did debuting a whole new GCN architecture on a die-shrink. I think the price increase for the harvested chips will be consonant if not improved. And why I maintain the rational AMD will harvest 3 true derivatives, not just a XT/LE, but more what Nvidia did with a GK104.

I am very eager to see how 2014 pans out all in.

Im still on for one of these but it will have to stretch the 7970 a fair bit as at 1080p I can get away with a ghz 7970 and value before epeen counts to me.

7990 is selling for as low as $599-$649 now. So taking that statement id be surprise if it was priced higher then there dual-gpu solution.

Less than 22hrs to go

When will I get GTA5 on PC with one of these?

Here is the webcast livestream link. It should have a timer countdown to your time-zone.

AMD Webcasts Product Showcase at GPU '14

In-case you miss it. It will be posted on AMD YouTube channel afterwards.

The new link to the livestream is

bit.ly/gpu2014

or

www.livestream.com/amdlivestream

let me know when yall know :D

Where are the benchmarks of the Titan beating the R9 290X? The Titan beats it in quite a few benchmarks.If this is all ATI has...they are in trouble.:twitch: