Wednesday, February 12th 2014

GM107 Features 128 CUDA Cores Per Streaming Multiprocessor

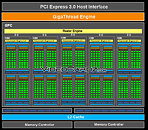

NVIDIA's upcoming GM107 GPU, the first to be based on its next-generation "Maxwell" GPU architecture, reportedly features a different arrangement of CUDA cores and streaming multiprocessors to those typically associated with "Kepler," although the component hierarchy is similar. The chip reportedly features five streaming multiprocessors, highly integrated computation subunits of the GPU. NVIDIA is referring to these parts as "streaming multiprocessor (Maxwell)," or SMMs.

Further, each streaming multiprocessor features 128 CUDA cores, and not the 192 CUDA cores found in SMX units of "Kepler" GPUs. If true, GM107 features 640 CUDA cores, all of which will be enabled on the GeForce GTX 750 Ti. If NVIDIA is carving out the GTX 750 by disabling one of those streaming multiprocessors, its CUDA core count works out to be 512. NVIDIA will apparently build two GPUs on the existing 28 nm process, the GM107, and the smaller GM108; and three higher performing chips on the next-generation 20 nm process, the GM206, the GM204, and the GM200. The three, as you might have figured out, succeed the GK106, GK104, and GK110, respectively.

Source:

VideoCardz

Further, each streaming multiprocessor features 128 CUDA cores, and not the 192 CUDA cores found in SMX units of "Kepler" GPUs. If true, GM107 features 640 CUDA cores, all of which will be enabled on the GeForce GTX 750 Ti. If NVIDIA is carving out the GTX 750 by disabling one of those streaming multiprocessors, its CUDA core count works out to be 512. NVIDIA will apparently build two GPUs on the existing 28 nm process, the GM107, and the smaller GM108; and three higher performing chips on the next-generation 20 nm process, the GM206, the GM204, and the GM200. The three, as you might have figured out, succeed the GK106, GK104, and GK110, respectively.

42 Comments on GM107 Features 128 CUDA Cores Per Streaming Multiprocessor

Secondly, pre-GCN AMD cores were much simpler than CUDA cores.

He thinks AMD uses CUDA cores too. :shadedshu: :banghead:

:roll:

:lovetpu:

NVidia changed how SMM is internally subdivided on 4 blocks each with L1 cache (Kepler has simpler L1 cache organization per SMX) so larger L2 cache is needed to accommodate changes in the cache hierarchy.

AMD APU platforms along with Hybrid Cross-Fire could becoming noteworthy (it seems as though it might start to become relevant although we've heard that for many years), and we see much of the entry/low-end computer and laptops business warming to AMD's APU's. As entry systems APU offer similar low-end CPU class leading graphics within a efficient package. Plenty good to be starting some gaming, all while still fairly good efficiency/battery life as a standalone solution. If laptops from boutique builders can option with Hybrid Cross-Fire and see the added benefit of the dual GPU's, while efficiency of just the APU graphics when not in 3D stress gaming they get the best of both. In desktops very similar, buy a APU desktop and then later a 75W AMD card and you have benefit of dual GPU's, while not even worrying about the PSU. Lastly pehaps some boost form Mantle. (yet to be seen)

For an Intel system and discrete Nvidia graphics, does Intel play-ball offering CPU SKu's with disabled graphics, while still a decent/cheap enough for builders to add Nvidia graphics and still tout class leading efficiency? Can or does the iGPU have a way of turning-off or disabling completely in the BIOS? If not might the iGPU section still be taking away some efficiency? do we se a time (if ever) when Intel permits the Nvidia chip to power down in non-stressful operations and hands-off to the Intel iGPU? (Is that something Intel see's in it's best interest long term?). While you aren't getting the anything like the Hybrid Cross-Fire advantage (ever?).

Nvidia has to be as good on an Intel CPU perf/w and price, as an APU Hybrid Cross-Fire arrangement to stay viable. I could see many OEMs and boutique builders seeing APU as a better seller as an entry laptop. They can be sold with and APU offering solid graphics, while offering a MXM slot for a premium. Down the road perhaps they can get the sale on the discrete card, while even for new "higher" battery. Desktop's can tout the upgrade of "Hybrid Cross-Fire option", while still the efficiency of powering down when under less stressful (2D) workloads.

Maxwell, being much more efficient, could change entry-level graphics in mobile devices significantly, because the low-end desktop and laptops ranges are still using Fermi silicon. Tegra K1 is already the lowest level of implementation for Nvidia graphics, so it remains to be seen what they do with products that have two to three shader modules enabled, who they sell it to and how it performs. At best, I think, the jump will be similar to the move from Whistler to Mars for AMD.Nope. Its Optimus or nothing in Intel's case. Nvidia is really the third wheel in the mobile market right now.

In the case of the South African market, over 90% of the laptops sold here have Intel APUs. Nvidia is found only in the Samsung and ASUS ranges in the middle market, AMD is restricted to a few netbooks and the MSI GX series and in the high-end laptops market Nvidia has the monopoly on discrete graphics in single and multi-GPU configurations.But that runs counter to the plan from Intel and AMD to move towards greater levels of integration. The less they have people fiddling with the insides, the better. Also, integrated systems give them even more money in the long run when it comes to spares and insurance claims.

Nvidia Optimus or "switch-able graphics", has been as one would believe hard(er) to implement then even AMD's ability to accomplish such seamless hand-shakes, and they own both of the parts trying.Why sure Intel/AMD and even most OE look toward that, but the boutique builders are like whatever sells... is Ok by them. And well it should be they-are-there and someone needs to be there to "step to the edge" and deliver the latest options in the small arena, especially if it means a buck.

videocardz.com/49632/nvidia-geforce-gtx-750-ti-gtx-750-final-specifications

Sweet.