Friday, July 24th 2015

Skylake iGPU Gets Performance Leap, Incremental Upgrade for CPU Performance

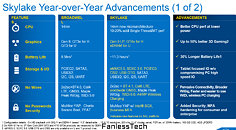

With its 6th generation Core "Skylake" processors, Intel is throwing in everything it's got, into increasing performance of the integrated graphics. This is necessitated not by some newfound urge to compete with entry-discrete GPUs from NVIDIA or AMD, but a rather sudden increase in display resolutions, after nearly a decade of stagnation. Notebook and tablet designers are wanting to cram in higher resolution displays, such as WQHD (2560 x 1440), 4K (3840 x 2160), and beyond, and are finding it impossible to achieve them without discrete graphics. This is what Intel is likely after. The aftereffect of this effort would be that the iGPU will be finally capable of playing some games at 720p or 900p resolutions, with moderate eye-candy. Games such as League of Legends should be fairly playable, even at 1080p. Intel claims that its 9th generation integrated graphics will over a 50% performance increment over the previous generation.

Moving on to CPU, and the performance-increase is a predictable 10-20% single/multi-thread CPU performance, over "Broadwell." This is roughly similar to how "Haswell" bettered "Ivy Bridge," and how "Sandy Bridge" bettered "Lynnfield." Intel will provide platform support on some of its "Skylake-U" ultraportable variants, for much of the modern I/O used by today's tablets and notebooks, which required third-party controllers, and which competing semi-custom SoCs natively offer, such as eMMC 5.0, SDIO 3.0, SATA 6 Gb/s, PCIe gen 3.0, and USB 3.0. Communications are also improved, with 2x 802.11 ac, Bluetooth 4.1, and WiDi 6.0.

Source:

FanlessTech

Moving on to CPU, and the performance-increase is a predictable 10-20% single/multi-thread CPU performance, over "Broadwell." This is roughly similar to how "Haswell" bettered "Ivy Bridge," and how "Sandy Bridge" bettered "Lynnfield." Intel will provide platform support on some of its "Skylake-U" ultraportable variants, for much of the modern I/O used by today's tablets and notebooks, which required third-party controllers, and which competing semi-custom SoCs natively offer, such as eMMC 5.0, SDIO 3.0, SATA 6 Gb/s, PCIe gen 3.0, and USB 3.0. Communications are also improved, with 2x 802.11 ac, Bluetooth 4.1, and WiDi 6.0.

100 Comments on Skylake iGPU Gets Performance Leap, Incremental Upgrade for CPU Performance

As far as sharing resources, that is really a driver issue, and one that Microsoft says will be addressed with DX12.

And you gotta keep in mind that Intel High End Desktop platforms are made just for that, and a large part of the "gamer market" doesn't even game on desktops, let alone gaming desktops or custom built desktops.However, it IS viable to have a "gamer" line of CPUs with iGPU disabled, without having to spend as much resources as a new CPU die would need. Intel has proved this for 3 generations so far with E3-12x0 SKUs that have their iGPUs disabled. Although Intel clearly doesn't think that gaming is very important because as of E3 v4 the trend has been broken and the E3s were never marketed for gaming anyways. If Intel offer a similarly handicapped unlocked SKU in the future, it could be interesting, though this would have a separate, potentially detrimental impact on its existing i5-xxxxK and i7-xxxxK SKUs.

I want M.2/Sata express capability. I want to actually be able to RAID my MX100's (remember many of us Sandy users can't use Raid0 + Trim, at least without a hell of a lot of trouble). Hell, I want pci-e 3.0.

I also really would like added tangible performance for video decoding stuffs, etc (50%?).

Many people seem to take the approach that upgrading from a 4c to a 4c will long be a fool's errand, and the only way to go is up (ie to a 5820k)....which is fair....but I, for one, think Skylake is going to get there just as well. It may be through IPC rather than cores, and overclocked vs overclocked (I think at stock the 5820k will justify it's ~10% higher price) , but that's okay.

While I'm not quite on board with the NUC future, I am on board with mini-itx/microatx present. Just like with Sandy Bridge, I could see building a fairly small performance box out of Skylake; perhaps one that can more-or-less rival a similar-price build on x99. Whenever I think of simply building the latter now, I feel compelled to check the BTU rating of my air conditioner...I just don't think it would work (for me).

IOW, it may be a side-step for us enthusiasts, sure....but it's a side-step when coupled with all the improvements over the past few years finally pushes it over the edge, imho.

Do I fault anyone that goes 5820k+? No.

How about waiting for Zen (which may compete or be slightly better over-all while perhaps a better price for an 8-core part)? What about Skylake-E, which actually will probably be the big step forward?

All of those are respectable opinions.

To me though, I need a new platform, preferably the best combo of longevity/newest features, with a worth-while upgrade on the cpu which preferably won't use a ridiculous amount of power overclocked.

I think that's Skylake.

Very few of us are extreme overclockers and the presence of an iGPU is not the end of the world. If Intel sat back and kept HD 2500 performance for 5 generations, whiners would complain about how they're just making money off helpless consumers and not doing anything for them. If Intel is improving iGPUs as it is now, the same whiners complain about how Intel GPUs suck and how the Future is Fusion.

as for testing things when you main gpu go kia/mia ... well i always have a spare GT730/HD5450 but for a mITX or even Micro ATX indeed it's usefull (HTPC again ... or lanbox at most)

CMT is a sound strategy, but not when DX11 and Windows are poor at multithreading.

store.steampowered.com/hwsurveyActually, xeons are pretty cheap. You can get the equivalent of an i7 for the price of an i5 with xeons. That is if you don't mind not being able to overclock.

So, theoretically, if Skylake is lets say 50% more efficient per MHz and is factory clocked at 4,2 GHz, it should be fine even at stock speeds for a very long time.

I wonder if Skylake-E will feature more cores and what will they use eDRAM for. The CPU part or only as aid for iGPU. I'm kinda aiming at that for year 2016. If it'll be able to provide me with all the compute power for 6 more years like i7 920 did, that would be amazing.

If I'd give you one system with overclocked i7 920 and one with Skylake and you wouldn't be able to tell a difference. I don't give a shit about synthetic benchmarks and few examples where you actually notice it. I'm often encoding videos and compressing data with 7zip and this thing is hammering workloads like a champ with 8 threads. And with games, maybe you gain 2fps using latest CPU. Totally worth replacing entire platform and spending 1k € on it... by your logic.

In my case, I own a less powerful CPU, FX 8320 since 2012, and I still can't think on upgrading, the thing is a beast.

face it 1366 actual is 1150/1151 not 2011/2011-v3 (unless you have a 990X ) you have the right to be nostalgic but being realist is more important.

just in case ... even at 20%(unless it's Haswell-E only improvement) more ipc a 4790K is base clock at 4.0 if my 920 did effectively go from 2.66 to 4.0 a 4790K do that at stock and can clock higher and would need less power ... technically my 4690K is faster than my 920 at their respective OC and, too, need less power (NO HT INVOLVED THANKS, keep it realistic in my domain ... i dont edit video use heavy threaded soft, just gaming and day to day browsing, some bench sometime) ... end words : better and worth the change (plus as i said in another thread, reselling my 1366 setup got me 30% of the 1150 setup investment back but i didn't took a 2011-v3 :laugh: )

well for the IGP ... i am happy i have a igp in my cpu in case my discrete crap out (if my GPU would do that ... i never needed more than once .... for re flashing a 6950)

Mind you, I was going from a 990x i7, not quite the same as the 920... Same damn core though minus AES instructions.