Monday, August 24th 2015

AMD Radeon R9 Nano Nears Launch, 50% Higher Performance per Watt over Fury X

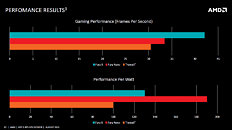

AMD's ultra-compact graphics card based on its "Fiji" silicon, the Radeon R9 Nano (or R9 Fury-Nano), is nearing its late-August/early-September launch. At its most recent "Hot Chips" presentation, AMD put out more interesting numbers related to the card. To begin with, it lives up to the promise of being faster than the R9 290X, at nearly half its power draw. The R9 Nano has 90% higher performance/Watt over the R9 290X. More importantly, it has about 50% higher performance/Watt over the company's current flagship single-GPU product, the Radeon R9 Fury X. With these performance figures, the R9 Nano will be targeted at compact gaming-PC builds that are capable of 1440p gaming.

Source:

Golem.de

106 Comments on AMD Radeon R9 Nano Nears Launch, 50% Higher Performance per Watt over Fury X

Nvidia also sits on the presidency of Khronos consortium which adopted Mantel into Vulkan.

Nvidia was the first one to team up with Microsoft for a DX12 demo. Well over a 1yr+.

Nvidia is well placed to know where things are going well in advance.

Don't forget how bad the DX12 test makes AMD look in DX11 yet

Apples-to-Apples

*I know its just one game with Hairworks.

The Ashes of the Singularity wasn't so much that AMD was ahead but how Nvidia fell behind.

That is 1 thing you have to wonder but even with DX12 being worked with nvidia for 1+year doesn't mean that much when it comes to a game.

We wont know if AMD is dumbing down, you like to point to the tessellation and others point to the DirectCompute of Nvidia. Unless both all of a sudden become transparent with their drivers there wont be an answer.

So what you intend? To pay more rather than setting Hairwork to a lower setting (4X) to get playable? The Witcher canned B-M is impractical in determining if a $200 card is worthy/practical to provide immersive graphics experience. Nvidia wants you to think that you must go to a $300 to make the experience enjoyable and that's just disingenuous. There are plenty of “tweaks” for Foliage, Grass, Shadows, that are max’d out in the B-M that in my opinion don’t superbly enhance the immersive experience.

Have a look at the difference here between, hair, foliage, grass, shadows. Some like shadows in 8x (default) look to razor-sharp, not the natural soften shadows that appear in real world (go outside and look). For Hairworks their comparison has you looking the guy’s head in a way that I don’t believe the game ever get that close, while at that close the hair for 8X to me still looks horrible. While between 8X/4X perhaps the most improved area is the sideburns. So are you saying your okay paying $100 more for "in your face" sideburns, and that doesn’t burn you?

www.geforce.com/whats-new/guides/the-witcher-3-wild-hunt-graphics-performance-and-tweaking-guide#nvidia-hairworks-config-file-tweak