Monday, January 4th 2016

NVIDIA Announces Drive PX 2 Mobile Supercomputer

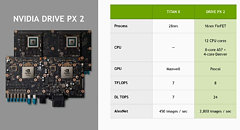

NVIDIA announced the Drive PX 2, the first in-car AI deep-learning device. This lunchbox sized "mobile supercomputer" embeds up to twelve CPU cores, a "Pascal" GPU built on the 16 nm FinFET process, 6 TFLOP/s of raw compute power, and 24 deep-learning TOps of compute power usable for deep-learning applications; the chips are liquid-cooled, draw 250W in all, and give the car a very powerful deep-learning device for self-driving cars. The device itself will be offered to car manufacturers to redesign and co-develop self-driving cars with.The press-release follows.

Accelerating the race to autonomous cars, NVIDIA (NASDAQ: NVDA) today launched NVIDIA DRIVE PX 2 -- the world's most powerful engine for in-vehicle artificial intelligence.

NVIDIA DRIVE PX 2 allows the automotive industry to use artificial intelligence to tackle the complexities inherent in autonomous driving. It utilizes deep learning on NVIDIA's most advanced GPUs for 360-degree situational awareness around the car, to determine precisely where the car is and to compute a safe, comfortable trajectory.

"Drivers deal with an infinitely complex world," said Jen-Hsun Huang, co-founder and CEO, NVIDIA. "Modern artificial intelligence and GPU breakthroughs enable us to finally tackle the daunting challenges of self-driving cars.

"NVIDIA's GPU is central to advances in deep learning and supercomputing. We are leveraging these to create the brain of future autonomous vehicles that will be continuously alert, and eventually achieve superhuman levels of situational awareness. Autonomous cars will bring increased safety, new convenient mobility services and even beautiful urban designs -- providing a powerful force for a better future."

24 Trillion Deep Learning Operations per Second

Created to address the needs of NVIDIA's automotive partners for an open development platform, DRIVE PX 2 provides unprecedented amounts of processing power for deep learning, equivalent to that of 150 MacBook Pros.

Its two next-generation Tegra processors plus two next-generation discrete GPUs, based on the Pascal architecture, deliver up to 24 trillion deep learning operations per second, which are specialized instructions that accelerate the math used in deep learning network inference. That's over 10 times more computational horsepower than the previous-generation product.

DRIVE PX 2's deep learning capabilities enable it to quickly learn how to address the challenges of everyday driving, such as unexpected road debris, erratic drivers and construction zones. Deep learning also addresses numerous problem areas where traditional computer vision techniques are insufficient -- such as poor weather conditions like rain, snow and fog, and difficult lighting conditions like sunrise, sunset and extreme darkness.

For general purpose floating point operations, DRIVE PX 2's multi-precision GPU architecture is capable of up to 8 trillion operations per second. That's over four times more than the previous-generation product. This enables partners to address the full breadth of autonomous driving algorithms, including sensor fusion, localization and path planning. It also provides high-precision compute when needed for layers of deep learning networks.

Deep Learning in Self-Driving Cars

Self-driving cars use a broad spectrum of sensors to understand their surroundings. DRIVE PX 2 can process the inputs of 12 video cameras, plus lidar, radar and ultrasonic sensors. It fuses them to accurately detect objects, identify them, determine where the car is relative to the world around it, and then calculate its optimal path for safe travel.

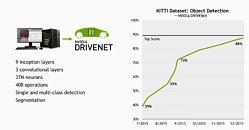

This complex work is facilitated by NVIDIA DriveWorks , a suite of software tools, libraries and modules that accelerates development and testing of autonomous vehicles. DriveWorks enables sensor calibration, acquisition of surround data, synchronization, recording and then processing streams of sensor data through a complex pipeline of algorithms running on all of the DRIVE PX 2's specialized and general-purpose processors. Software modules are included for every aspect of the autonomous driving pipeline, from object detection, classification and segmentation to map localization and path planning.

End-to-End Solution for Deep Learning

NVIDIA delivers an end-to-end solution -- consisting of NVIDIA DIGITS and DRIVE PX 2 -- for both training a deep neural network, as well as deploying the output of that network in a car.

DIGITS is a tool for developing, training and visualizing deep neural networks that can run on any NVIDIA GPU-based system -- from PCs and supercomputers to Amazon Web Services and the recently announced Facebook Big Sur Open Rack-compatible hardware. The trained neural net model runs on NVIDIA DRIVE PX 2 within the car.

Strong Market Adoption

Since NVIDIA delivered the first-generation DRIVE PX last summer, more than 50 automakers, tier 1 suppliers, developers and research institutions have adopted NVIDIA's AI platform for autonomous driving development. They are praising its performance, capabilities and ease of development.

"Using NVIDIA's DIGITS deep learning platform, in less than four hours we achieved over 96 percent accuracy using Ruhr University Bochum's traffic sign database. While others invested years of development to achieve similar levels of perception with classical computer vision algorithms, we have been able to do it at the speed of light."

-- Matthias Rudolph, director of Architecture Driver Assistance Systems at Audi

"BMW is exploring the use of deep learning for a wide range of automotive use cases, from autonomous driving to quality inspection in manufacturing. The ability to rapidly train deep neural networks on vast amounts of data is critical. Using an NVIDIA GPU cluster equipped with NVIDIA DIGITS, we are achieving excellent results."

-- Uwe Higgen, head of BMW Group Technology Office USA

"Due to deep learning, we brought the vehicle's environment perception a significant step closer to human performance and exceed the performance of classic computer vision."

-- Ralf G. Herrtwich, director of Vehicle Automation at Daimler

"Deep learning on NVIDIA DIGITS has allowed for a 30X enhancement in training pedestrian detection algorithms, which are being further tested and developed as we move them onto NVIDIA DRIVE PX."

-- Dragos Maciuca, technical director of Ford Research and Innovation Center

The DRIVE PX 2 development engine will be generally available in the fourth quarter of 2016. Availability to early access development partners will be in the second quarter.

Accelerating the race to autonomous cars, NVIDIA (NASDAQ: NVDA) today launched NVIDIA DRIVE PX 2 -- the world's most powerful engine for in-vehicle artificial intelligence.

NVIDIA DRIVE PX 2 allows the automotive industry to use artificial intelligence to tackle the complexities inherent in autonomous driving. It utilizes deep learning on NVIDIA's most advanced GPUs for 360-degree situational awareness around the car, to determine precisely where the car is and to compute a safe, comfortable trajectory.

"Drivers deal with an infinitely complex world," said Jen-Hsun Huang, co-founder and CEO, NVIDIA. "Modern artificial intelligence and GPU breakthroughs enable us to finally tackle the daunting challenges of self-driving cars.

"NVIDIA's GPU is central to advances in deep learning and supercomputing. We are leveraging these to create the brain of future autonomous vehicles that will be continuously alert, and eventually achieve superhuman levels of situational awareness. Autonomous cars will bring increased safety, new convenient mobility services and even beautiful urban designs -- providing a powerful force for a better future."

24 Trillion Deep Learning Operations per Second

Created to address the needs of NVIDIA's automotive partners for an open development platform, DRIVE PX 2 provides unprecedented amounts of processing power for deep learning, equivalent to that of 150 MacBook Pros.

Its two next-generation Tegra processors plus two next-generation discrete GPUs, based on the Pascal architecture, deliver up to 24 trillion deep learning operations per second, which are specialized instructions that accelerate the math used in deep learning network inference. That's over 10 times more computational horsepower than the previous-generation product.

DRIVE PX 2's deep learning capabilities enable it to quickly learn how to address the challenges of everyday driving, such as unexpected road debris, erratic drivers and construction zones. Deep learning also addresses numerous problem areas where traditional computer vision techniques are insufficient -- such as poor weather conditions like rain, snow and fog, and difficult lighting conditions like sunrise, sunset and extreme darkness.

For general purpose floating point operations, DRIVE PX 2's multi-precision GPU architecture is capable of up to 8 trillion operations per second. That's over four times more than the previous-generation product. This enables partners to address the full breadth of autonomous driving algorithms, including sensor fusion, localization and path planning. It also provides high-precision compute when needed for layers of deep learning networks.

Deep Learning in Self-Driving Cars

Self-driving cars use a broad spectrum of sensors to understand their surroundings. DRIVE PX 2 can process the inputs of 12 video cameras, plus lidar, radar and ultrasonic sensors. It fuses them to accurately detect objects, identify them, determine where the car is relative to the world around it, and then calculate its optimal path for safe travel.

This complex work is facilitated by NVIDIA DriveWorks , a suite of software tools, libraries and modules that accelerates development and testing of autonomous vehicles. DriveWorks enables sensor calibration, acquisition of surround data, synchronization, recording and then processing streams of sensor data through a complex pipeline of algorithms running on all of the DRIVE PX 2's specialized and general-purpose processors. Software modules are included for every aspect of the autonomous driving pipeline, from object detection, classification and segmentation to map localization and path planning.

End-to-End Solution for Deep Learning

NVIDIA delivers an end-to-end solution -- consisting of NVIDIA DIGITS and DRIVE PX 2 -- for both training a deep neural network, as well as deploying the output of that network in a car.

DIGITS is a tool for developing, training and visualizing deep neural networks that can run on any NVIDIA GPU-based system -- from PCs and supercomputers to Amazon Web Services and the recently announced Facebook Big Sur Open Rack-compatible hardware. The trained neural net model runs on NVIDIA DRIVE PX 2 within the car.

Strong Market Adoption

Since NVIDIA delivered the first-generation DRIVE PX last summer, more than 50 automakers, tier 1 suppliers, developers and research institutions have adopted NVIDIA's AI platform for autonomous driving development. They are praising its performance, capabilities and ease of development.

"Using NVIDIA's DIGITS deep learning platform, in less than four hours we achieved over 96 percent accuracy using Ruhr University Bochum's traffic sign database. While others invested years of development to achieve similar levels of perception with classical computer vision algorithms, we have been able to do it at the speed of light."

-- Matthias Rudolph, director of Architecture Driver Assistance Systems at Audi

"BMW is exploring the use of deep learning for a wide range of automotive use cases, from autonomous driving to quality inspection in manufacturing. The ability to rapidly train deep neural networks on vast amounts of data is critical. Using an NVIDIA GPU cluster equipped with NVIDIA DIGITS, we are achieving excellent results."

-- Uwe Higgen, head of BMW Group Technology Office USA

"Due to deep learning, we brought the vehicle's environment perception a significant step closer to human performance and exceed the performance of classic computer vision."

-- Ralf G. Herrtwich, director of Vehicle Automation at Daimler

"Deep learning on NVIDIA DIGITS has allowed for a 30X enhancement in training pedestrian detection algorithms, which are being further tested and developed as we move them onto NVIDIA DRIVE PX."

-- Dragos Maciuca, technical director of Ford Research and Innovation Center

The DRIVE PX 2 development engine will be generally available in the fourth quarter of 2016. Availability to early access development partners will be in the second quarter.

31 Comments on NVIDIA Announces Drive PX 2 Mobile Supercomputer

Talk about PR department on steroids.

Edit: Are any of you WCG or F@H members thinking what I'm thinking?

For the Ford, the DNN calculates how you can juggle nose picking, picking your fantasy football team, and getting to the nearest McDonalds.

Quick screengrab of the Pascal GPUs on the reverse side of the PX2 unit

and the obligatory numbers slide...

Volvo announced as the launch partner for the system.

The Drive PX2 will use the trained weight data to do online/direct image detection and classification for inference purposes (identifying road signs, avoiding obstacles, avoiding pedestrians, avoiding other vehicles, etc.), bear in mind that there will be hundreds or thousands of images to be processed each second so don't be surprised about the high GPU capability and "2,800 images/sec." claim. Yes, hardware wise it might go to waste, but in an autonomous driving it's always better be safe than sorry.

Oh and also it can be used to collect extra and new images and sensor data for further training purpose.That's more like it :)

The key is cumulative but localized training.

Cumulative means that the training process will get as much data as it want, so the model can minimized overfitting, and localized means that all the data you get will be relevant and not redundant.

Resistance is Futile.

maybe if they can train the system to park in the garage for me it might have some usefullness. leave car in drive way, walk away, car opens garage door waits for it to rise, enter garage, commands garage door to lower and powers downoh, and also, this isnt ai, its machine learning, although they might seem similar to most they are very different

I'll leave.....