Monday, June 17th 2019

Intel "Ice Lake" IPC Best-Case a Massive 40% Uplift Over "Skylake," 18% on Average

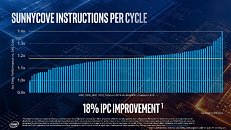

Intel late-May made its first major disclosure of the per-core CPU performance gains achieved with its "Ice Lake" processor that packs "Sunny Cove" CPU cores. Averaged across a spectrum of benchmarks, Intel claims a best-case scenario IPC (instructions per clock) uplift of a massive 40 percent over "Skylake," and a mean uplift of 18 percent. The worst-case scenario sees its performance negligibly below that of "Skylake." Intel's IPC figures are derived entirely across synthetic benchmarks, which include SPEC 2016, SPEC 2017, SYSMark 2014 SE, WebXprt, and CineBench R15. The comparison to "Skylake" is relevant because Intel has been using essentially the same CPU core in the succeeding three generations that include "Kaby Lake" and "Coffee Lake."

A Chinese tech-forum member with access to an "Ice Lake" 6-core/12-thread sample put the chip through the CPU-Z internal benchmark (test module version 17.01). At a clock-speed of 3.60 GHz, the "Ice Lake" chip allegedly achieved a single-core score of 635 points. To put this number into perspective, a Ryzen 7 3800X "Matisse" supposedly needs to run at 4.70 GHz to match this score, and a Core i7-7700K "Kaby Lake" needs to run at 5.20 GHz. Desktop "Ice Lake" processors are unlikely to launch in 2019. The first "Ice Lake" processors are 4-core/8-thread chips designed for ultraportable notebook platforms, which come out in Q4-2019, and desktop "Ice Lake" parts are expected only in 2020.

Source:

WCCFTech

A Chinese tech-forum member with access to an "Ice Lake" 6-core/12-thread sample put the chip through the CPU-Z internal benchmark (test module version 17.01). At a clock-speed of 3.60 GHz, the "Ice Lake" chip allegedly achieved a single-core score of 635 points. To put this number into perspective, a Ryzen 7 3800X "Matisse" supposedly needs to run at 4.70 GHz to match this score, and a Core i7-7700K "Kaby Lake" needs to run at 5.20 GHz. Desktop "Ice Lake" processors are unlikely to launch in 2019. The first "Ice Lake" processors are 4-core/8-thread chips designed for ultraportable notebook platforms, which come out in Q4-2019, and desktop "Ice Lake" parts are expected only in 2020.

153 Comments on Intel "Ice Lake" IPC Best-Case a Massive 40% Uplift Over "Skylake," 18% on Average

Developing an architecture without a new node isn't ideal.

Obviously it'd be nice if each new architecture had a new node to go with it, but it's hardly necessary.

The reasoning goes that Intel has plenty of time to fix the plethora of vulnerabilities and rectify the regressions. At the pace new Intel-only vulnerabilities have been popping up I don't think it's that outlandish to expect new ones in relatively short order either.

One might quip that Intel's best hope is to find devastating vulnerabilities in AMD's CPUs, along the lines of having to completely disable hyperthreading. :rolleyes:

It's a bit mind-boggling that so many seem to so blithely accept such massive defects in Intel's CPUs. The mentality is "just go and buy another one", as if there is unlimited money. Planned obsolescence at its most inglorious?

I am willing to bet AMD can do a monolithic 16 core at around 200mm^2 on 7nm. 8-core chiplets are 75-80mm^2 and 12/14nm IO Die is 120mm^2. There are a lot of extra things in IO Die that are not strictly required.

We will probably get a good idea about what AMD can do with 7nm in terms of cores and die size when APUs come out. Intel is still betting on 4-core mobile CPUs (which is probably not a bad idea) and AMDs current response is 12nm Zen+ APUs but 7nm APUs should replace these within a years time.

You are right about Sunny Cove though, doubled caches will increase the size notably.

I tend to think at 5nm we'll see a pair CPU core/thread die's and pair of I/O die's with about half the logic split between the two which will bring down the temperatures of them a bit. The chipset could be a multi chip solution as well might as well if made sense for the CPU probably does for the chipset as well.

But Intel was about to double theirs as well, putting AMD back where it was. Although they have some 10nm parts in volume production, the desktop chips that were going to bring AVX-512 support to the mainstream aren't here yet.

So, while Intel and AMD have made comparable IPC improvements, it seems to me that AMD has not done everything it should have done to obtain a solid lead over Intel, and their current lead is simply a result of Intel having some unexpected further delays with its 10nm lineup. So, while I still feel pretty excited over the new Ryzens, I take a somewhat cautious view.

We were recieving 5% IPC increases or even less for 10 years and suddenly, boom, 18%, just when AMD seems to get the lead.

AMD has nothing to do with it.

I've done some research into this and it seems that most programs in use by regular people (programs like Firefox, Google Chrome, 7-ZIP, Photoshop, etc.) are using AVX2 (that's AVX-256) which is what is now supported by Zen 2 or Ryzen 3000. AVX-512 may be the newest kind of AVX instructions but it seems that it's still only used in limited and very custom workloads, not in general use.

And besides, for most Intel chips in use today whenever they start executing AVX-256 bit instructions they tend to clock down via the AVX-offset generally because to execute AVX instructions it requires more power thus more heat and thus they can't run at their regular clock speed. AMD's new Zen 2 architecture appears (or at least what AMD has said) doesn't require some kind of AVX-offset while executing AVX-256 bit instructions which the way I see it is that the new Ryzen 3000 series of chips won't downclock while executing AVX-256 bit instructions as their Intel counterparts do thus we'll see better performance from AMD chips than Intel chips while performing AVX-256 bit workloads.

But you got to start somewhere, hardware support usually have to come first.

This is Passmark. Single thread score at ~4.8 Ghz (short test, might actually run at 4.8Ghz) of 8665U is 2400 points. 1065G7 gets 2625 points, at 3.9 Ghz. If we get the 1065G7 to 4.8 Ghz, we get 3200 points. That would translate into 34% higher IPC. Any thoughts? I was also skeptical about the 40% mentioned in this stupid forum picture, but passmark looks a bit more legit to me.

That is a 35% difference, sounds very unrealistic.

While Intel have become more aggressive in boosting over the years, AMD took it to a new level with XFR's extra ~200 MHz for burst speed. This sort of stuff can't be accurately measured. This super aggressive boosting is more about manipulating benchmark scores than offering actual improvements, but (unfortunately) I expect Intel to push it further too.

And then we have the benchmarks that yeah, they show amazing numbers but how do those numbers translate into real-world performance? It's great if you're the benchmark king but at the end of the day what really matters is how much work you can get done in a certain time frame. We've even seen it with SSDs, sure the sustained throughput numbers look great but do they necessarily translate to faster loading software? Nope, 4K random reads are still semi the same over the last few years.