Tuesday, May 26th 2009

Single-PCB GeForce GTX 295 Pictured

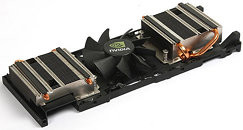

Zol.com.cn has managed to take some pictures of the upcoming single-PCB GeForce GTX 295. Expected to arrive within a month, the single-PCB GTX 295 features the same specs as the dual-PCB model - 2x448bit memory interface, 480 Processing Cores and 1792MB of GDDR3 memory, and GPU/shader/memory clocks of 576/1242/1998 MHz respectively.

65 Comments on Single-PCB GeForce GTX 295 Pictured

That must be one of the most inefficient poorly designed cooling concepts I've ever seen for a premium product. "Stock cooling" designer should be fired.

This is surely just NVIDIA & it's partners padding things out for the next few months until the new products arrive. Make it appear as though GT200 chips have life in them and are still being innovated until GT300 arrives.

The DX11 features of the new cards won't be a factor for most of the people, simply because they should know that there won't be any DX11 titles until at least a year from now, if not longer.