291

291

NVIDIA GeForce GTX 780 Ti 3 GB Review

(291 Comments) »Introduction

Here you are: The GeForce GTX 780 Ti, NVIDIA's gag-reflex to AMD's Radeon R9 290X. It took the $549 R9 290X and the humble $399 R9 290, launched over the past fortnight, to kick NVIDIA in its rear behind hard enough for price slashes anywhere between 17 and 23 percent to its then $650 GeForce GTX 780 and $399 GeForce GTX 770. Interestingly, NVIDIA left the GTX TITAN untouched in its $999 ivory tower despite it losing its gaming-performance edge to the R9 290X; NVIDIA probably wants to milk its full double-precision floating-point credentials other GK110-based GeForce SKUs lack. It's on this back-drop that the GeForce GTX 780 Ti is coming to town, a $699 graphics card that almost maxes out the GK110 silicon.

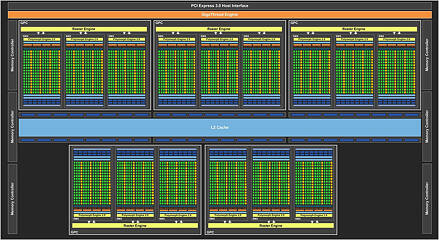

Almost, you ask? Sure, the GTX 780 Ti features all of the 2,880 CUDA cores physically present on the GK110 silicon, the 240 TMUs, and the 48 ROPs; and sure, it's clocked higher than the GTX TITAN, at 875 MHz core, 928 MHz maximum GPU Boost, and 7.00 GHz (GDDR5-effective) memory, which belts out a staggering 336 GB/s of memory bandwidth; but there's a catch. The chip only offers 1/3rd the GTX Titan's double-precision floating-point compute performance (493 GFLOP/s vs. 1.48 TFLOP/s). Single-precision performance scales up untouched (5.04 TFLOP/s vs. 4.70 TFLOP/s). Thankfully for us PC gamers and readers of this review at large, double-precision floating point performance is completely irrelevant. Just outside the GPU silicon, the other big difference between the GTX 780 Ti reviewed today and the GTX TITAN is memory amount. At 3 GB, NVIDIA gave the GTX 780 Ti half the memory amount of the GTX TITAN, but the memory itself is faster.

The GeForce GTX 780 Ti is designed to be a gamer's card throughout. It has all the muscle any game could possibly need, and has another thing going its way: better thermals. Despite the "GK110" featuring more transistors than "Hawaii" (7.08 billion vs. 6.20 billion) and, hence, a bigger die (561 mm² vs. 438 mm²), GK110-based products are inherently cooler because of higher "Kepler" micro-architecture performance-per-watt figures than AMD's "Graphics CoreNext," which translates into lower thermal density. These should in turn translate into lower temperatures and, hence, lower noise levels. Energy-efficiency and fan-noise are really the only tethers NVIDIA's high-end pricing is holding on to.

Given its chops and so-claimed higher energy efficiency, NVIDIA decided to price the GTX 780 Ti at $699, which is still $150 and a league above the R9 290X. The only way NVIDIA can justify the pricing is by offering significantly higher performance than the GTX 780, the R9 290X itself, and given its specifications, even the GTX TITAN (at least for gaming and consumer graphics). In our GTX 780 Ti review, we put a reference design card through its paces against the other options available in the vast >$300 expanse. The card looks practically identical to the GTX TITAN and features the same sexy-looking cooling solution that looks great in a windowed case.

| GeForce GTX 680 | GeForce GTX 780 | Radeon R9 290 | Radeon R9 290X | Radeon HD 7990 | GeForce GTX Titan | GeForce GTX 780 Ti | GeForce GTX 690 | |

|---|---|---|---|---|---|---|---|---|

| Shader Units | 1536 | 2304 | 2560 | 2816 | 2x 2048 | 2688 | 2880 | 2x 1536 |

| ROPs | 32 | 48 | 64 | 64 | 2x 32 | 48 | 48 | 2x 32 |

| Graphics Processor | GK104 | GK110 | Hawaii | Hawaii | 2x Tahiti | GK110 | GK110 | 2x GK104 |

| Transistors | 3500M | 7100M | 6200M | 6200M | 2x 4310M | 7100M | 7100M | 2x 3500M |

| Memory Size | 2048 MB | 3072 MB | 4096 MB | 4096 MB | 2x 3072 MB | 6144 MB | 3072 MB | 2x 2048 MB |

| Memory Bus Width | 256 bit | 384 bit | 512 bit | 512 bit | 2x 384 bit | 384 bit | 384 bit | 2x 256 bit |

| Core Clock | 1006 MHz+ | 863 MHz+ | 947 MHz | 1000 MHz | 1000 MHz | 837 MHz+ | 876 MHz+ | 915 MHz+ |

| Memory Clock | 1502 MHz | 1502 MHz | 1250 MHz | 1250 MHz | 1500 MHz | 1502 MHz | 1750 MHz | 1502 MHz |

| Price | $390 | $500 | $400 | $550 | $770 | $1000 | $700 | $1000 |

NVIDIA G-Sync

Last month, NVIDIA invited the European press to a special event in London, where it demonstrated G-Sync, a new technology by NVIDIA that addresses monitor-stuttering issues that were deemed unsolvable.For archaic reasons, such as being evolved essentially off television sets, PC monitors feature fixed refresh-rates, the number of times a display refreshes what it is displaying per second. There's no technical reason why a modern flat-screen display should feature fixed refresh rates. Since it's the displays that dictate refresh rates, it has always been the GPU's job to ensure display output is fluid, which it did by deploying technologies such as V-Sync (vertical sync). If a GPU sends out lower number of frames per second than the display refresh rate, the output won't appear fluid. If it sends higher number of frames per second, the output would feature artifacts such as display-tearing, caused by portions of multiple frames overlapping each other.

NVIDIA's solution to the problem is to kill fixed refresh rates on monitors, instead making them synchronize their refresh rates in real-time to the frame-rates generated by a GPU. Ever wondered why a movie watched in a theater feels more fluid at even 24 frames per second while a PC game being played at that frame-rate doesn't? It's because the monitor mandates that the GPU obey its refresh-rate. G-Sync tilts that equation and makes the monitor sync its refresh-rate to the frame-rate of the GPU so games will feel more fluid at frame-rates well below 60. To make this happen, NVIDIA developed hardware that resides inside the display—hardware that communicates with the GPU in real-time to coordinate G-Sync.

We've witnessed G-Sync with our own eyes at the London event and couldn't believe what we were seeing. Games (playable, so we could tell they weren't recordings) were butter-smooth and extremely fluid. At the demo, NVIDIA displayed games that were doing 40 to 59 FPS in a given scene, and it felt like a constant frame-rate throughout. NVIDIA obviously demonstrated cases where G-Sync unleashed its full potential—on FPS between 35 and 59. I am still a bit skeptical because it looks too good to be true, so I'm looking forward to testing G-Sync on my own setup and with my own games, mouse and keyboard included, for a complete assessment. One can't make a video recording of a display that's running G-Sync to show you that, and you really need to experience G-Sync to buy into the idea. NVIDIA's G-Sync will launch with a $100 price premium on monitors. That's not insignificant, but could also go down in the future. Also, from what I've seen, G-Sync promises lower framerates that look smoother, which means you no longer need an expensive card to reach 60 FPS—money you then put into a G-Sync enabled monitor instead.

NVIDIA Shadow Play

GeForce Experience Shadow Play is another feature NVIDIA recently debuted. The feature lets you create video recordings or live-streams of your gameplay with minimal performance impacts on the game you're playing. The feature is handled by GeForce Experience, which lets you set hot-keys to toggle recording on the fly; or the output, format, quality, and so on.Unlike other apps, which record videos at loss-less AVI formats by tapping into the DirectX pipeline and clogging the system bus, disk, and memory with high bit-rate video streams, Shadow Play taps into a proprietary path that lets it copy the display output to the GPU's hardware H.264 encoder. This encoder neither strains the CPU nor the GPU's own unified shaders. Since the video stream that's being saved to a file comes out encoded, its bit-rate is infinitesimally lower than uncompressed AVI.

Packaging and Contents

We received the card without packaging or accessories from NVIDIA. Rest assured that the final product will come with the usual documentation, driver CD, and adapters.Our Patreon Silver Supporters can read articles in single-page format.

May 14th, 2024 17:54 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Free Games Thread (3805)

- 2024 and STILL no dark mode? (46)

- What are you playing? (20648)

- Ryzen Owners Zen Garden (7329)

- LOL ASUS says this is $200 in repair, Steve from gamers Nexus smokes ASUS, Steam Deck til I die boys!!!! (78)

- Ubuntu 24.04 LTS released (26)

- The RX 6000 series Owners' Club (2313)

- Purchased an AX1200i PSU as part of some forward planning, what tier is this PSU? (107)

- Laptop i7 11800H Throttling Immediately (9)

- Random Black screens on 1 year old build, absolute headache during diagnosis (0)

Popular Reviews

- Homeworld 3 Performance Benchmark Review - 35 GPUs Tested

- ZMF Caldera Closed Planar Magnetic Headphones Review

- Enermax REVOLUTION D.F. X 1200 W Review

- Corsair MP700 Pro SE 4 TB Review

- Upcoming Hardware Launches 2023 (Updated Feb 2024)

- ThundeRobot ML903 NearLink Review

- AMD Ryzen 7 7800X3D Review - The Best Gaming CPU

- Bykski CPU-XPR-C-I CPU Water Block Review - Amazing Value!

- ASRock Radeon RX 7900 XT Phantom Gaming White Review

- CHERRY XTRFY M64 Pro Review

Controversial News Posts

- Intel Statement on Stability Issues: "Motherboard Makers to Blame" (266)

- AMD to Redesign Ray Tracing Hardware on RDNA 4 (227)

- Windows 11 Now Officially Adware as Microsoft Embeds Ads in the Start Menu (172)

- NVIDIA to Only Launch the Flagship GeForce RTX 5090 in 2024, Rest of the Series in 2025 (152)

- AMD Hits Highest-Ever x86 CPU Market Share in Q1 2024 Across Desktop and Server (131)

- Sony PlayStation 5 Pro Specifications Confirmed, Console Arrives Before Holidays (119)

- AMD's RDNA 4 GPUs Could Stick with 18 Gbps GDDR6 Memory (114)

- AMD Ryzen 9 7900X3D Now at a Mouth-watering $329 (104)