- Joined

- Mar 18, 2008

- Messages

- 5,510 (0.87/day)

- Location

- Australia

| System Name | Night Rider | Mini LAN PC | Workhorse |

|---|---|

| Processor | AMD R7 5800X3D | Ryzen 1600X | i7 970 |

| Motherboard | MSi AM4 Pro Carbon | GA-AB350H-D3 | Gigabyte EX58-UD5 |

| Cooling | Noctua U9S Twin Fan| Stock Cooler, Copper Core)| Big shairkan B |

| Memory | 2x16GB DDR4 G.Skill 3600MHz| 4x8GB Corsair 3000 | 6x2GB DDR3 1300 Corsair |

| Video Card(s) | MSI AMD 6750XT | Solo RTX 4060| MSI RX 580 8GB |

| Storage | 1TB WD Black NVME / 250GB SSD /2TB WD Black | 250GB SSD WD/2TB SSD, 2x1TB, 1x750 | WD 500 SSD |

| Display(s) | Gigabyte 27" 1440P 180Hz / 22" DELL| MSI 27" 1080P 100Hz / 17" DELL | 22" DELL / 19"DELL |

| Case | LIAN LI PC-18 | Mini ATX Case (custom) | Fractal |

| Audio Device(s) | Onboard | Onbaord | Onboard |

| Power Supply | Silverstone 850 | Silverstone Mini 450W | Corsair CX-750 |

| Mouse | Coolermaster Pro | Rapoo V900 | Gigabyte 6850X |

| Keyboard | Corsair K90| Ducky One | Some POS Logitech |

| Software | Windows 10 Pro 64 | Windows 10 Pro 64 | Windows 7 Pro 64 |

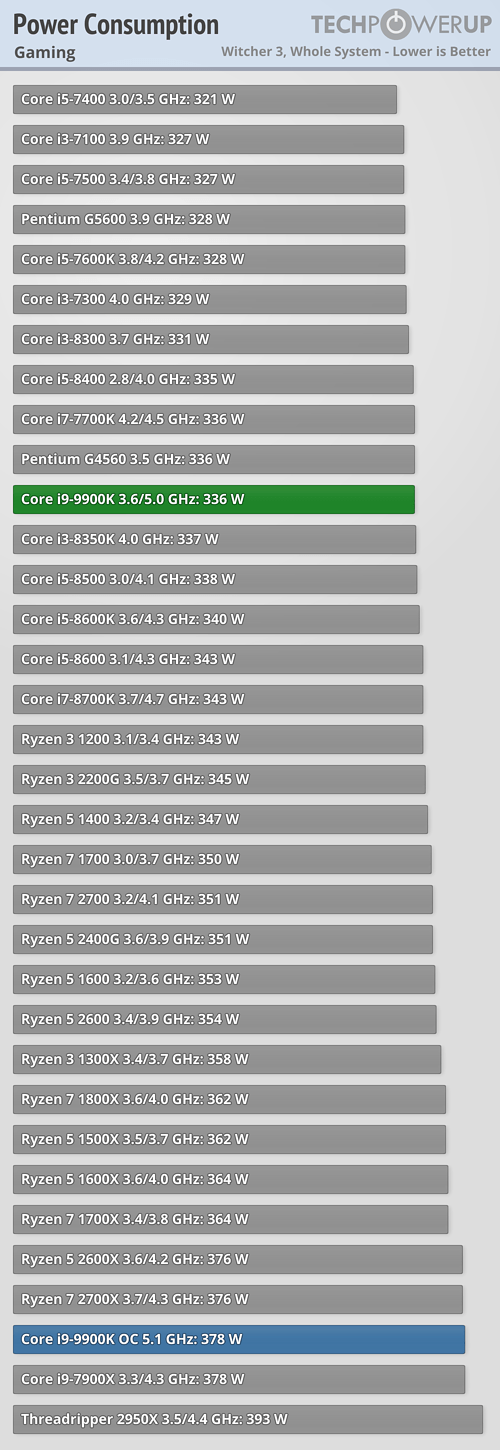

So, we're back to Pentium vs Athlon? Intel's hot, power hungry chips with lots of gigglehurtz are slightly faster than AMD's cheaper, more efficient offerings.

Not exactly, Yes Intels is Hot hungry chips but back then there CPU's was also slower, today they are Hot hungry but faster.

, who knows maby i will extend it to 10 years if there isn`t a 2x performance gain and for now i can do anything with it . This 3-5% generational performance gains is getting really boring.

, who knows maby i will extend it to 10 years if there isn`t a 2x performance gain and for now i can do anything with it . This 3-5% generational performance gains is getting really boring.