- Joined

- Jun 19, 2019

- Messages

- 225 (0.10/day)

Do you have all the benchmarks from the Nvidia 3000 serie and AMD RDNA2....? Share it with us plz.

AMD will catch up with 2080 Super +20% maximum. They certainly not expected so huge speed bump...

Do you have all the benchmarks from the Nvidia 3000 serie and AMD RDNA2....? Share it with us plz.

| System Name | Eula |

|---|---|

| Processor | AMD Ryzen 9 7950X |

| Motherboard | MSI MPG B850 Edge Ti WiFi |

| Cooling | Corsair H150i Elite LCD XT White |

| Memory | Trident Z5 Neo RGB DDR5-6000 CL32-38-38-96 1.40V 64GB (2x32GB) AMD EXPO F5-6000J3238G32GX2-TZ5NR |

| Video Card(s) | Gigabyte GeForce RTX 4080 GAMING OC |

| Storage | Crucial P3 Plus, 4 TB NVMe, Samsung 980 Pro 2TB NVMe, Toshiba N300 10TB HDD, WDC Red Pro NAS HDD |

| Display(s) | Acer Predator X32FP 32in 160Hz 4K, Corsair Xeneon 32UHD144 32in 144 hz 4K |

| Case | Antec Constellation C8 RGB White |

| Audio Device(s) | Creative Sound Blaster Z |

| Power Supply | Corsair HX1000 Platinum 1000W |

| Mouse | SteelSeries Prime Pro Gaming Mouse |

| Keyboard | SteelSeries Apex 5 |

| Software | MS Windows 11 Pro |

I rather buy RTX 3080 with 20 GB VRAM i.e. RTX 3080 Ti type product between RTX 3090 ($1500, 24 GB) and RTX 3080 ($700, 10GB). Willing to pay $999 USD.NVIDIA just announced the GeForce RTX 3090 ($1500), RTX 3080 ($700) and RTX 3070 ($500).

Do you feel like Ampere is for you? If yes, which card are you most interested in? Or sticking with Turing? Or AMD?

Xbox Series X GPU (52 CU, 1825 Mhz base clock only) is already RTX 2080 level with Gears 5 benchmark.AMD will catch up with 2080 Super +20% maximum. They certainly not expected so huge speed bump...

| Processor | Core i9-9900k |

|---|---|

| Motherboard | ASRock Z390 Phantom Gaming 6 |

| Cooling | All air: 2x140mm Fractal exhaust; 3x 140mm Cougar Intake; Enermax ETS-T50 Black CPU cooler |

| Memory | 32GB (2x16) Mushkin Redline DDR-4 3200 |

| Video Card(s) | ASUS RTX 4070 Ti Super OC 16GB |

| Storage | 1x 1TB MX500 (OS); 2x 6TB WD Black; 1x 2TB MX500; 1x 1TB BX500 SSD; 1x 6TB WD Blue storage (eSATA) |

| Display(s) | Infievo 27" 165Hz @ 2560 x 1440 |

| Case | Fractal Design Define R4 Black -windowed |

| Audio Device(s) | Soundblaster Z |

| Power Supply | Seasonic Focus GX-1000 Gold |

| Mouse | Coolermaster Sentinel III (large palm grip!) |

| Keyboard | Logitech G610 Orion mechanical (Cherry Brown switches) |

| Software | Windows 10 Pro 64-bit (Start10 & Fences 3.0 installed) |

Why would anybody think they could recoup their money on a GPU? After a new gen comes out the best you can get for a brief period of time is 50%, falling off quickly thereafter. Sure, lots of people will ask near full price, until finally some poor sucker comes along and pays that price.The problem is that people who bought a 2000 cards especially 2080 Ti's have paid too much to get them, that's why they price them a little bit too high. So nobody wants to loose 600-700 $ of money selling them used. Because if the 3070 will bring same or close performance of a 2080 Ti that means the 2080 Ti value is the same too.

So im not selling my 2080 Ti and i advice to all of you who bought a 20XX card to not rush to sell it !!!

You can keep it and wait for the 4000 or who knows graphics cards from nvidia.

| Processor | i9 9900KS ( 5 Ghz all the time ) |

|---|---|

| Motherboard | Asus Maximus XI Hero Z390 |

| Cooling | EK Velocity + EK D5 pump + Alphacool full copper silver 360mm radiator |

| Memory | 16GB Corsair Dominator GT ROG Edition 3333 Mhz |

| Video Card(s) | ASUS TUF RTX 3080 Ti 12GB OC |

| Storage | M.2 Samsung NVMe 970 Evo Plus 250 GB + 1TB 970 Evo Plus |

| Display(s) | Asus PG279 IPS 1440p 165Hz G-sync |

| Case | Cooler Master H500 |

| Power Supply | Asus ROG Thor 850W |

| Mouse | Razer Deathadder Chroma |

| Keyboard | Rapoo |

| Software | Win 10 64 Bit |

Why would anybody think they could recoup their money on a GPU? After a new gen comes out the best you can get for a brief period of time is 50%, falling off quickly thereafter. Sure, lots of people will ask near full price, until finally some poor sucker comes along and pays that price.

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Why would anybody think they could recoup their money on a GPU? After a new gen comes out the best you can get for a brief period of time is 50%, falling off quickly thereafter. Sure, lots of people will ask near full price, until finally some poor sucker comes along and pays that price.

And this just keeps on giving, too, because we can STILL enjoy all the little upgrades over time. Higher res, new tech, etc. etc.

And this just keeps on giving, too, because we can STILL enjoy all the little upgrades over time. Higher res, new tech, etc. etc.Well it more about accepting that people just have different needs.

Some people want the best performance for their dollar.

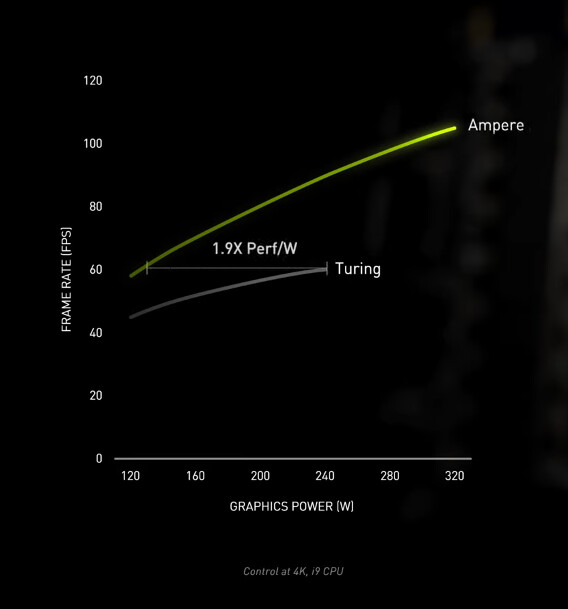

Some just want the best performance per watt (which would always belong to the most expensive GPU).

The majority will look for the right balance between the 2 metrics.

Let just say you have a Laptop with limited cooling potential, then perf/watt will become the most limiting factor, the more efficient GPU will give more FPS. You can look into 5600M vs 2060 laptops and see why efficiency matter.

HUH ?

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Oh please.

Customers buy whatever product looks best at that specific point in time. Trying to attribute that to a few bar charts with minimal percentile gaps is a sign of madness in the eyes of the beholder. That's you in this case.

Nvidia sells because

- It leads in technological advances, mindshare, thought leadership. This is a big thing in any soft- and hardware environment as development is iterative. If you can think faster than the rest, you're smarter and you'll keep leading. Examples: Gsync and other advances in gaming/content delivery, new AA methods, GameWorks emergent tech in co-conspiracy with devs, a major investment in engineers and industry push, CUDA development, etc etc.

- It has a great marketing department

- It has maintained a steady product stack across many generations, this instills consumer faith. The company delivers. Every time. If it delays, there is no competitor that has a better offer.

Got it? Now please, cut this nonsense. Perf/watt is an important metric on an architectural level because it influences what can happen with a specified power budget. Its interesting for us geeks. Not for the other 98% of consumers. In the end all that really matters is what a GPU can do in practice. Perf/watt is great but if you're stuck at 4GB VRAM, well.. yay. If the max TDP is 100W... yay. And if you're just buying the top-end GPU, don't even get me started man, you never cared about perf/watt at all, you only care about absolute perf.

Perf/watt arguments coming from a 2080ti owner is like the new born Tesla driver who's suddenly all about climate, except the reason he bought it was just because he's fond of fast cars. And then speeds off in Ludicrous mode.

.

.

.

.

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Oh please,

Stop with the PCMR shaming, we all are PCMR fanatic.

Well this might be a surprise to you that the absolute performance is only when you are trying to bench for high score, most of the time 2080 Ti owners will try for a balance between thermal, noise output and performance when gaming. FYI the AIB 2080 Ti can draw 350W too but it make little sense to use that much power for little performance gain.

Would it surprise you that there are people who would run the 3080 at 250-280W ? From this chart the gain from 250 to 320W is around 15fps, a 16% perf gain for 28% power increase. Yes the more FPS the better but you would more likely to notice your room being warmed up after a while rather a few fps drop.

Worrying about max power a card can use is just short sighted or just salty, especially when Ampere offer 25-30% higher efficiency vs Turing.

Do you know why Vega is meme ?

7% perf/watt improvement vs Fury X

Well people are selling 2080 Ti for 500usd now, might as well grab one. At least the 11GB framebuffer is more enticing than 8GB and the 2080 Ti only use 40W more.

RTX and DLSS performance would likely to be the same between the two.

| System Name | Not so complete or overkill - There are others!! Just no room to put! :D |

|---|---|

| Processor | Ryzen Threadripper 3970X |

| Motherboard | Asus Zenith 2 Extreme Alpha |

| Cooling | Lots!! Dual GTX 560 rads with D5 pumps for each rad. One rad for each component |

| Memory | Viper Steel 4 x 16GB DDR4 3600MHz not sure on the timings... Probably still at 2667!! :( |

| Video Card(s) | Asus Strix 3090 with front and rear active full cover water blocks |

| Storage | I'm bound to forget something here - 250GB OS, 2 x 1TB NVME, 2 x 1TB SSD, 4TB SSD, 2 x 8TB HD etc... |

| Display(s) | 3 x Dell 27" S2721DGFA @ 7680 x 1440P @ 144Hz or 165Hz - working on it!! |

| Case | The big Thermaltake that looks like a Case Mods |

| Audio Device(s) | Onboard |

| Power Supply | EVGA 1600W T2 |

| Mouse | Corsair thingy |

| Keyboard | Razer something or other.... |

| VR HMD | No headset yet |

| Software | Windows 11 OS... Not a fan!! |

| Benchmark Scores | I've actually never benched it!! Too busy with WCG and FAH and not gaming! :( :( Not OC'd it!! :( |

I love hardware, I'll buy whatever is best for me and not worry too much about anything else

I love hardware, I'll buy whatever is best for me and not worry too much about anything else

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

Okay, you repeated yourself once more, but your point is?

Everyone can run their GPU as they see fit, but you're still limited by its max TDP, and you will use that too when you need the performance. Undervolting is always an option, but to have it influence a purchase decision is another world entirely. Your world. People didn't undervolt Vega's because they loved to. They did it because the settings from the factory were usually pretty loose and horrible. They bought a Vega not because it would undervolt so well... they bought it because it had, at some point in time, a favorable perf/dollar. Despite its power usage.

Similarly, not a single soul in the world, well maybe not counting you, did buy a 2080ti for its great efficiency per watt, they bought it for absolute performance and having the longest epeen for the shortest possible time in GPU history, as it now seems. Can you undervolt it yes, and if you won't need the last 5% you probably will. But its an irrelevant metric here and also wrt a 3080 or 3090 with a much increased power budget.

Turing is also proving you wrong with the exact same perf/watt figures across the whole stack, by the way. And Ampere won't be much different. The scaling is the same or almost the same regardless of tier/SKU. They all clock about as high.

| System Name | Tiny the White Yeti |

|---|---|

| Processor | 7800X3D |

| Motherboard | MSI MAG Mortar b650m wifi |

| Cooling | CPU: Thermalright Peerless Assassin / Case: Phanteks T30-120 x3 |

| Memory | 32GB Corsair Vengeance 30CL6000 |

| Video Card(s) | ASRock RX7900XT Phantom Gaming |

| Storage | Lexar NM790 4TB + Samsung 850 EVO 1TB + Samsung 980 1TB + Crucial BX100 250GB |

| Display(s) | Gigabyte G34QWC (3440x1440) |

| Case | Lian Li A3 mATX White |

| Audio Device(s) | Harman Kardon AVR137 + 2.1 |

| Power Supply | EVGA Supernova G2 750W |

| Mouse | Steelseries Aerox 5 |

| Keyboard | Lenovo Thinkpad Trackpoint II |

| VR HMD | HD 420 - Green Edition ;) |

| Software | W11 IoT Enterprise LTSC |

| Benchmark Scores | Over 9000 |

Well you are wrong in many arguments.

First Nvidia TDP is set in the bios, I could flash a 380W TDP bios to my 2080 Ti if I wanted to, but there is no reason to because efficiency just fell off a cliff after about 260W TDP, as mirrored in Nvidia Perf/Power chart.

Second 24 months is actually the longest time a GPU has held onto the performance crown (2080 Ti was released Sept 2018). The previous king would be the 8800GTX at 19 months (1080 Ti at 18 months). I previously own the Titan X Maxwell and 1080 Ti too so I'm just happy that 3090 will beat the 2080 Ti by a good amount.

Third it seems like almost 12% of voters in this forum are buying the RTX 3090, wherether for the best performance or highest efficiency, does it matter ?

| System Name | The de-ploughminator Mk-III |

|---|---|

| Processor | 9800X3D |

| Motherboard | Gigabyte X870E Aorus Master |

| Cooling | DeepCool AK620 |

| Memory | 2x32GB G.SKill 6400MT Cas32 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | 4TB Samsung 990 Pro |

| Display(s) | 48" LG OLED C4 |

| Case | Corsair 5000D Air |

| Audio Device(s) | KEF LSX II LT speakers + KEF KC62 Subwoofer |

| Power Supply | Corsair HX1200 |

| Mouse | Razor Death Adder v3 |

| Keyboard | Razor Huntsman V3 Pro TKL |

| Software | win11 |

I know you need to justify a 2080ti > 3090 but really, don't. Its fine. All is well. Great cards.

| System Name | Main PC |

|---|---|

| Processor | Ryzen 9 5900X @ 4.7GHz (all core) |

| Motherboard | MSI MAG X570 Tomahawk |

| Cooling | Noctua NH-D15 |

| Memory | G.Skill 64GB 3600MHz CL16 |

| Video Card(s) | Palit RTX 3080 12GB GameRock |

| Storage | 2x PNY XLR8 NVMe 2TB SSD, Samsung 850 EVO 1TB, Samsung 860 EVO 500GB NVMe, 34TB HDD |

| Display(s) | ASUS ROG Swift PG279Q / 27" / IPS / 1440p / 165 Hz G-SYNC |

| Case | Fractal Design R5 w/ 5x Noctua A15 |

| Power Supply | Corsair RM850Wx |

| Mouse | Logitech G Pro Wireless, Logitech G403 Wireless, Rival 650 Wireless, Rival 600, Rival 310 |

| Keyboard | Steelseries Apex Pro, Steelseries Apex 7 TKL, Corsair K70 Rapidfire (MX speed/silver) |

| Software | Windows 10 Pro |

| Benchmark Scores | Time Spy: 18 808 (GPU: 19 819/CPU: 14 591) http://www.3dmark.com/spy/26583284 |

| System Name | Windows 10 64-bit Core i7 6700 |

|---|---|

| Processor | Intel Core i7 6700 |

| Motherboard | Asus Z170M-PLUS |

| Cooling | Corsair AIO |

| Memory | 2 x 8 GB Kingston DDR4 2666 |

| Video Card(s) | Gigabyte NVIDIA GeForce GTX 1060 6GB |

| Storage | Western Digital Caviar Blue 1 TB, Seagate Baracuda 1 TB |

| Display(s) | Dell P2414H |

| Case | Corsair Carbide Air 540 |

| Audio Device(s) | Realtek HD Audio |

| Power Supply | Corsair TX v2 650W |

| Mouse | Steelseries Sensei |

| Keyboard | CM Storm Quickfire Pro, Cherry MX Reds |

| Software | MS Windows 10 Pro 64-bit |

| System Name | Home |

|---|---|

| Processor | Ryzen 3600X |

| Motherboard | MSI Tomahawk 450 MAX |

| Cooling | Noctua NH-U14S |

| Memory | 16GB Crucial Ballistix 3600 MHz DDR4 CAS 16 |

| Video Card(s) | MSI RX 5700XT EVOKE OC |

| Storage | Samsung 970 PRO 512 GB |

| Display(s) | ASUS VA326HR + MSI Optix G24C4 |

| Case | MSI - MAG Forge 100M |

| Power Supply | Aerocool Lux RGB M 650W |

You say that because you assume that the population who voted in this poll is representative of the whole market.Interesting statistics ... about 31% of the userbase is willing to wait and see what AMD brings to the table... it means that AMD (with proper new power/performance optimized arch) can retake its former piece of the market essentially in one generation

| System Name | Windows 10 64-bit Core i7 6700 |

|---|---|

| Processor | Intel Core i7 6700 |

| Motherboard | Asus Z170M-PLUS |

| Cooling | Corsair AIO |

| Memory | 2 x 8 GB Kingston DDR4 2666 |

| Video Card(s) | Gigabyte NVIDIA GeForce GTX 1060 6GB |

| Storage | Western Digital Caviar Blue 1 TB, Seagate Baracuda 1 TB |

| Display(s) | Dell P2414H |

| Case | Corsair Carbide Air 540 |

| Audio Device(s) | Realtek HD Audio |

| Power Supply | Corsair TX v2 650W |

| Mouse | Steelseries Sensei |

| Keyboard | CM Storm Quickfire Pro, Cherry MX Reds |

| Software | MS Windows 10 Pro 64-bit |

Forget the whole market, the relevant market are those who can buy and run triple A titles on their PCs. So I'd say people that visit TPU are good representative of the relevant market for game industry.You say that because you assume that the population who voted in this poll is representative of the whole market.

Well, it isn't, there's no way there will be 12% of the market buying the 3090. The sample is pretty far from representative.

| Processor | Intel |

|---|---|

| Motherboard | MSI |

| Cooling | Cooler Master |

| Memory | Corsair |

| Video Card(s) | Nvidia |

| Storage | Western Digital/Kingston |

| Display(s) | Samsung |

| Case | Thermaltake |

| Audio Device(s) | On Board |

| Power Supply | Seasonic |

| Mouse | Glorious |

| Keyboard | UniKey |

| Software | Windows 10 x64 |

Forget the whole market, the relevant market are those who can buy and run triple A titles on their PCs. So I'd say people that visit TPU are good representative of the relevant market for game industry.

12% of those people would like to buy 3090, not will buy ... but 31% of those are waiting for AMD to show something ... big difference

When you have duopoly of this kind, things get interesting close to product launches ... for example what's happening on CPU front with Intel and AMD, people are expecting something on the GPU front from AMD also.

| System Name | Home |

|---|---|

| Processor | 5950x |

| Motherboard | Asrock Taichi x370 |

| Cooling | Thermalright True Spirit 140 |

| Memory | Patriot 32gb DDR4 3200mhz |

| Video Card(s) | Gigabyte gaming OC 9070 xt |

| Storage | Too many to count |

| Display(s) | U2518D+u2417h |

| Case | Chieftec |

| Audio Device(s) | onboard |

| Power Supply | seasonic prime 1000W |

| Mouse | Razer Viper |

| Keyboard | Logitech |

| Software | Windows 10 |

| System Name | Home |

|---|---|

| Processor | 5950x |

| Motherboard | Asrock Taichi x370 |

| Cooling | Thermalright True Spirit 140 |

| Memory | Patriot 32gb DDR4 3200mhz |

| Video Card(s) | Gigabyte gaming OC 9070 xt |

| Storage | Too many to count |

| Display(s) | U2518D+u2417h |

| Case | Chieftec |

| Audio Device(s) | onboard |

| Power Supply | seasonic prime 1000W |

| Mouse | Razer Viper |

| Keyboard | Logitech |

| Software | Windows 10 |

| System Name | Home |

|---|---|

| Processor | Ryzen 3600X |

| Motherboard | MSI Tomahawk 450 MAX |

| Cooling | Noctua NH-U14S |

| Memory | 16GB Crucial Ballistix 3600 MHz DDR4 CAS 16 |

| Video Card(s) | MSI RX 5700XT EVOKE OC |

| Storage | Samsung 970 PRO 512 GB |

| Display(s) | ASUS VA326HR + MSI Optix G24C4 |

| Case | MSI - MAG Forge 100M |

| Power Supply | Aerocool Lux RGB M 650W |

This is a tech site, most people coming here are first and foremost hardware geeks, not only gamers. It's a different subculture.People like you where the price of hardware is irrelevant are so few that Nvidia and Amd would go bankrupt, you can't even make 1%, nobody spends millions of $ to make games for the 1%, that's why pc gaming is ridiculously expensive and not worth it in my opinion compared to consoles.

| System Name | AlderLake |

|---|---|

| Processor | Intel i7 12700K P-Cores @ 5Ghz |

| Motherboard | Gigabyte Z690 Aorus Master |

| Cooling | Noctua NH-U12A 2 fans + Thermal Grizzly Kryonaut Extreme + 5 case fans |

| Memory | 32GB DDR5 Corsair Dominator Platinum RGB 6000MT/s CL36 |

| Video Card(s) | MSI RTX 2070 Super Gaming X Trio |

| Storage | Samsung 980 Pro 1TB + 970 Evo 500GB + 850 Pro 512GB + 860 Evo 1TB x2 |

| Display(s) | 23.8" Dell S2417DG 165Hz G-Sync 1440p |

| Case | Be quiet! Silent Base 600 - Window |

| Audio Device(s) | Panasonic SA-PMX94 / Realtek onboard + B&O speaker system / Harman Kardon Go + Play / Logitech G533 |

| Power Supply | Seasonic Focus Plus Gold 750W |

| Mouse | Logitech MX Anywhere 2 Laser wireless |

| Keyboard | RAPOO E9270P Black 5GHz wireless |

| Software | Windows 11 |

| Benchmark Scores | Cinebench R23 (Single Core) 1936 @ stock Cinebench R23 (Multi Core) 23006 @ stock |

| System Name | Windows 10 64-bit Core i7 6700 |

|---|---|

| Processor | Intel Core i7 6700 |

| Motherboard | Asus Z170M-PLUS |

| Cooling | Corsair AIO |

| Memory | 2 x 8 GB Kingston DDR4 2666 |

| Video Card(s) | Gigabyte NVIDIA GeForce GTX 1060 6GB |

| Storage | Western Digital Caviar Blue 1 TB, Seagate Baracuda 1 TB |

| Display(s) | Dell P2414H |

| Case | Corsair Carbide Air 540 |

| Audio Device(s) | Realtek HD Audio |

| Power Supply | Corsair TX v2 650W |

| Mouse | Steelseries Sensei |

| Keyboard | CM Storm Quickfire Pro, Cherry MX Reds |

| Software | MS Windows 10 Pro 64-bit |

Funny stuff, that's why I'd like to think this corner of the interwebz to be reasonable ... I realize how unreasonable it is to think that, as I couldn't type it with a straight face ... but hey, relatively?Don't forget this legendary pool.