- Joined

- Nov 11, 2016

- Messages

- 3,068 (1.13/day)

| System Name | The de-ploughminator Mk-II |

|---|---|

| Processor | i7 13700KF |

| Motherboard | MSI Z790 Carbon |

| Cooling | ID-Cooling SE-226-XT + Phanteks T30 |

| Memory | 2x16GB G.Skill DDR5 7200Cas34 |

| Video Card(s) | Asus RTX4090 TUF |

| Storage | Kingston KC3000 2TB NVME |

| Display(s) | LG OLED CX48" |

| Case | Corsair 5000D Air |

| Power Supply | Corsair HX850 |

| Mouse | Razor Viper Ultimate |

| Keyboard | Corsair K75 |

| Software | win11 |

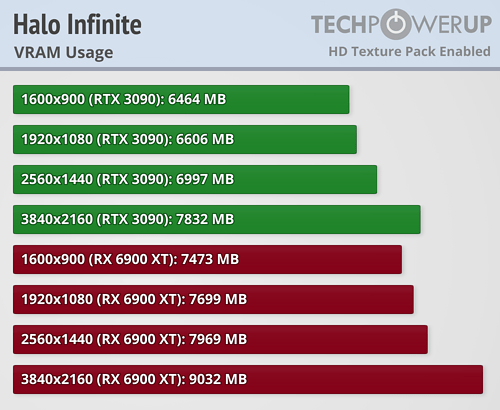

I think this is to show the great difference in texture compression both camps use.

Nvidia skimps on texture quality. Hence why AMD always looks better at native.

Care to take a professional camera, record gameplay to backup your claim? here is an example of how to do it

I honestly thought selecting for example (using random values for this example) 1920x1080, if that uses 7GB of VRAM, it will use 7GB of VRAM whether a Nvidia GPU or AMD GPU is installed. But this belief looks wrong to me now!

I honestly thought selecting for example (using random values for this example) 1920x1080, if that uses 7GB of VRAM, it will use 7GB of VRAM whether a Nvidia GPU or AMD GPU is installed. But this belief looks wrong to me now!