It's possible but not at those clocks. Here's the thing, these companies advertise up to X% performance gains or Y% efficiency gains but never or very rarely at the same time. Do you remember Apple's chips doing turbo? And that's why they're so efficient.

-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Ryzen 9 7950X

- Thread starter W1zzard

- Start date

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I never said producitivity, i said heavy multithreading workloads.How on earth is Cinebench a 'productivity workload' or even a workload? Come back to the real world and read the review, Wizzard did all the tests for no reason it seems...

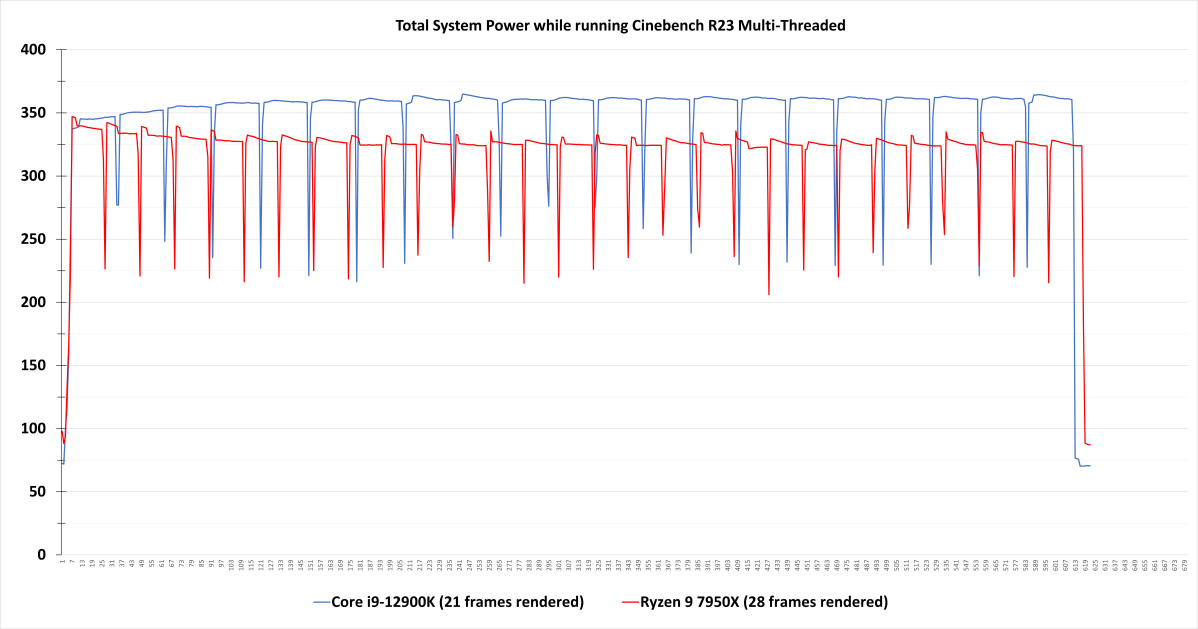

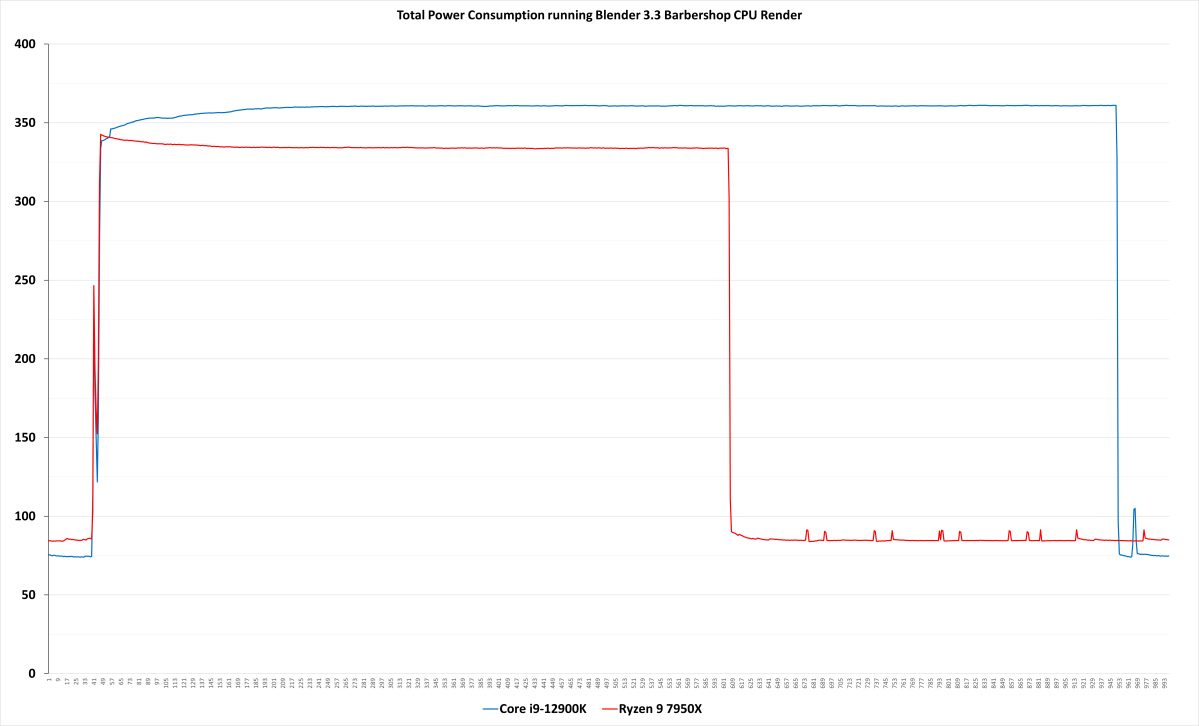

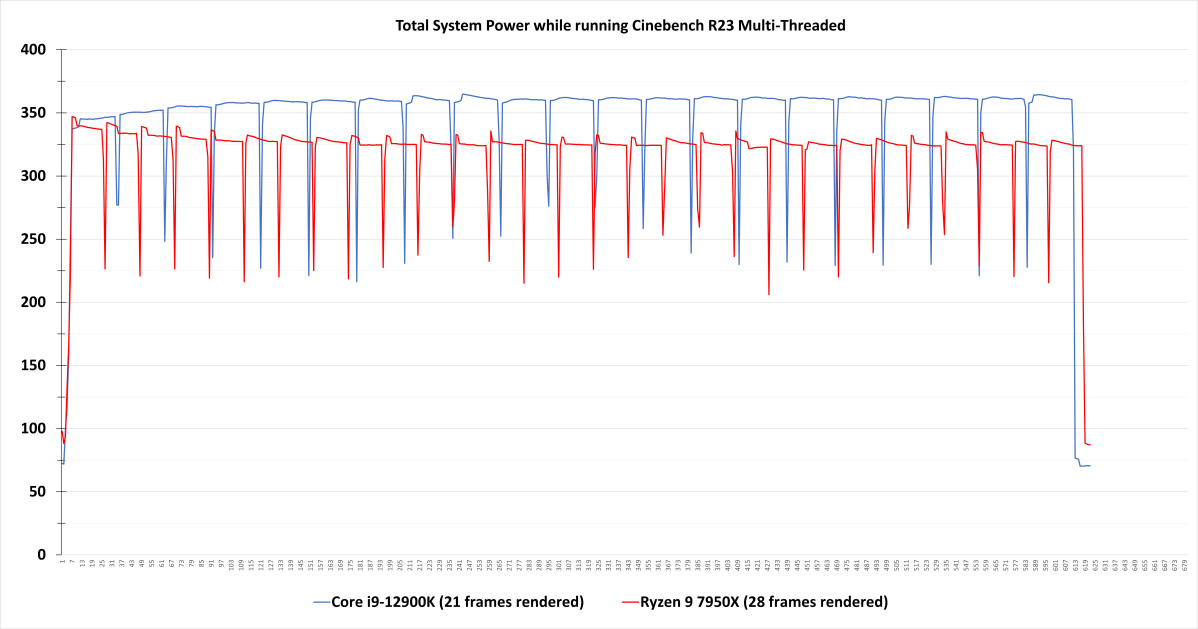

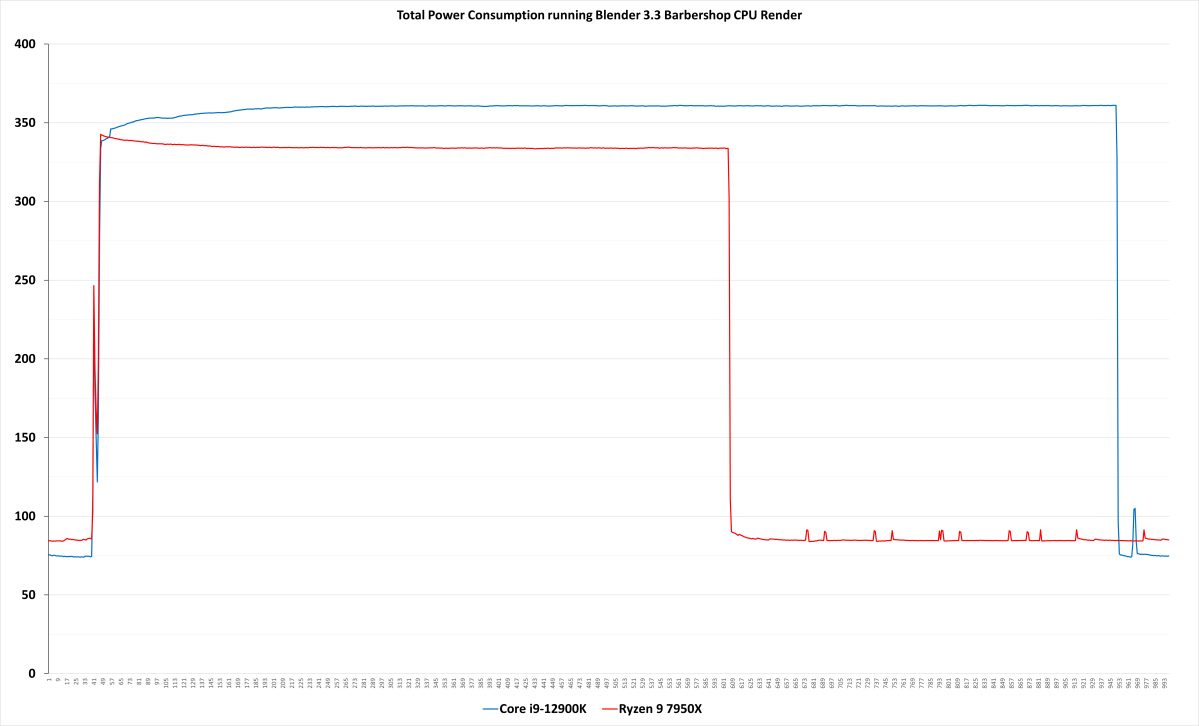

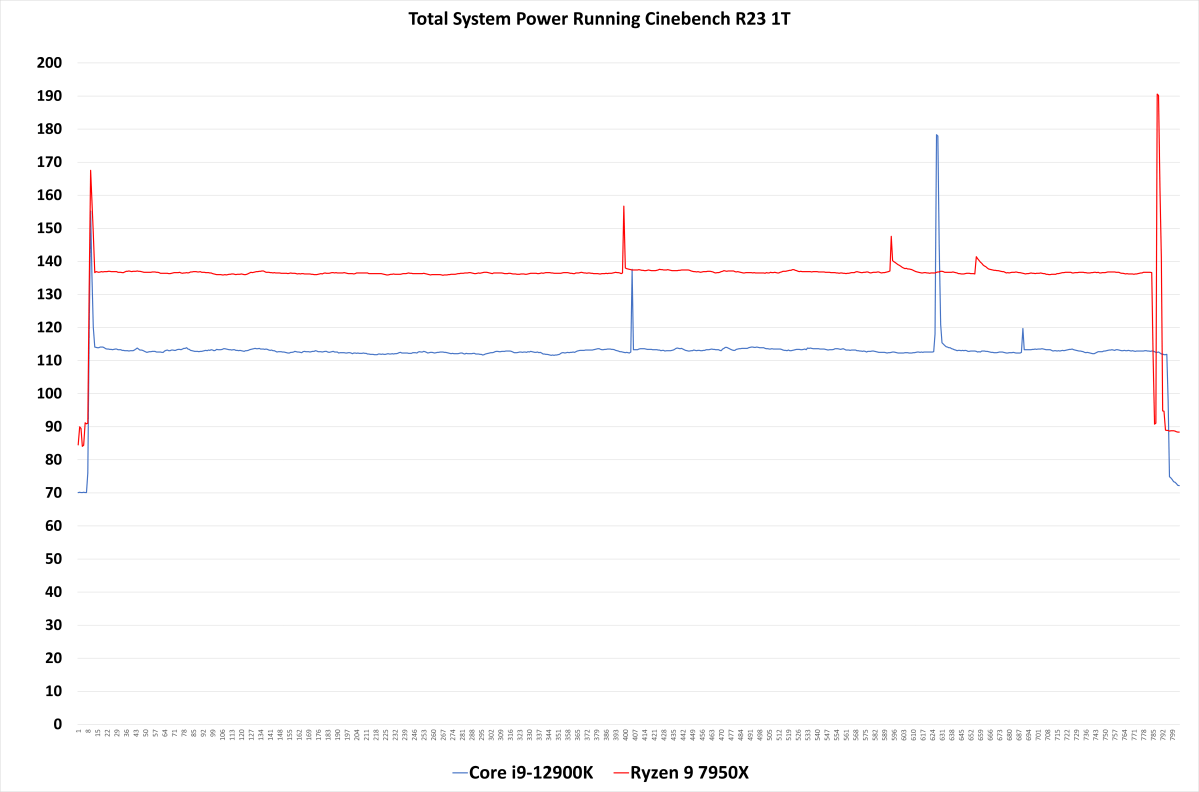

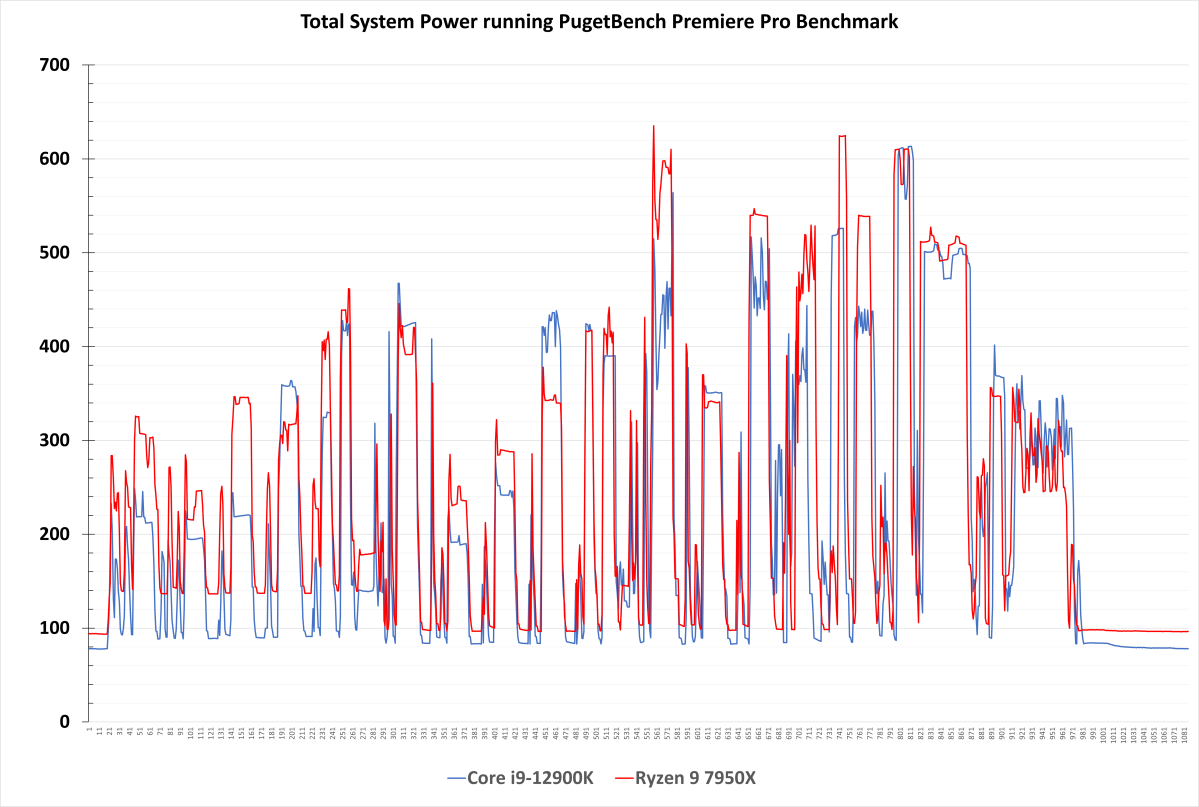

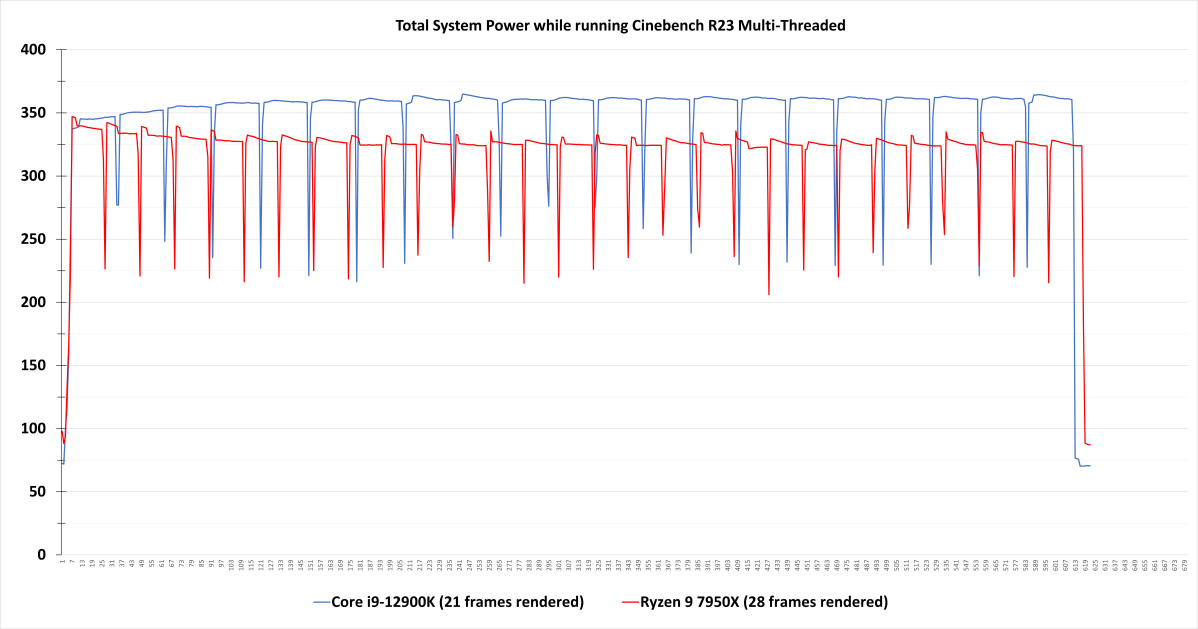

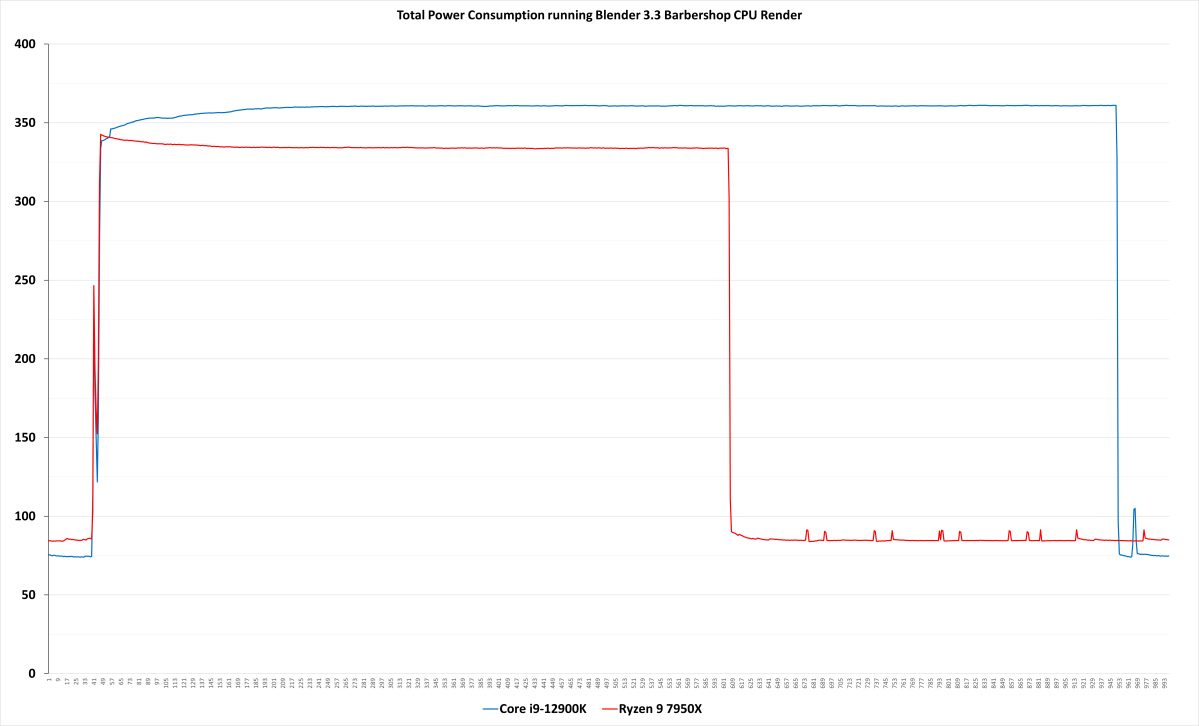

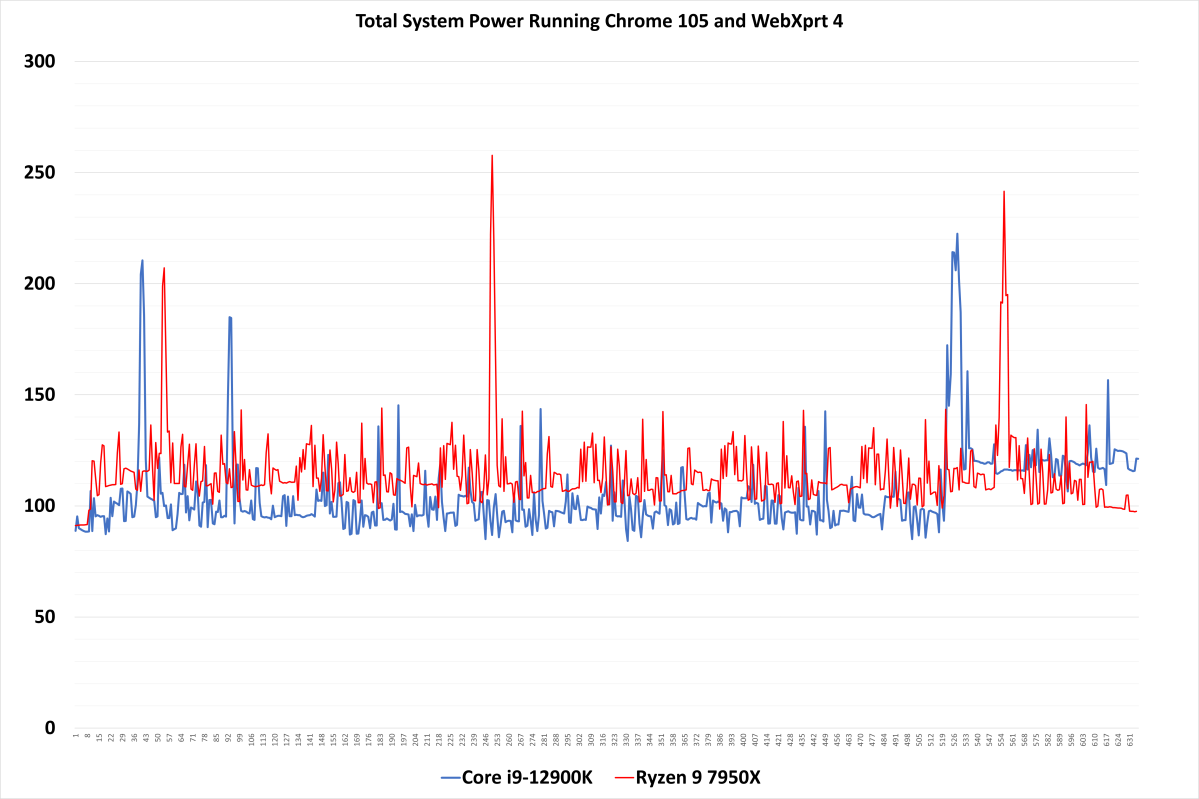

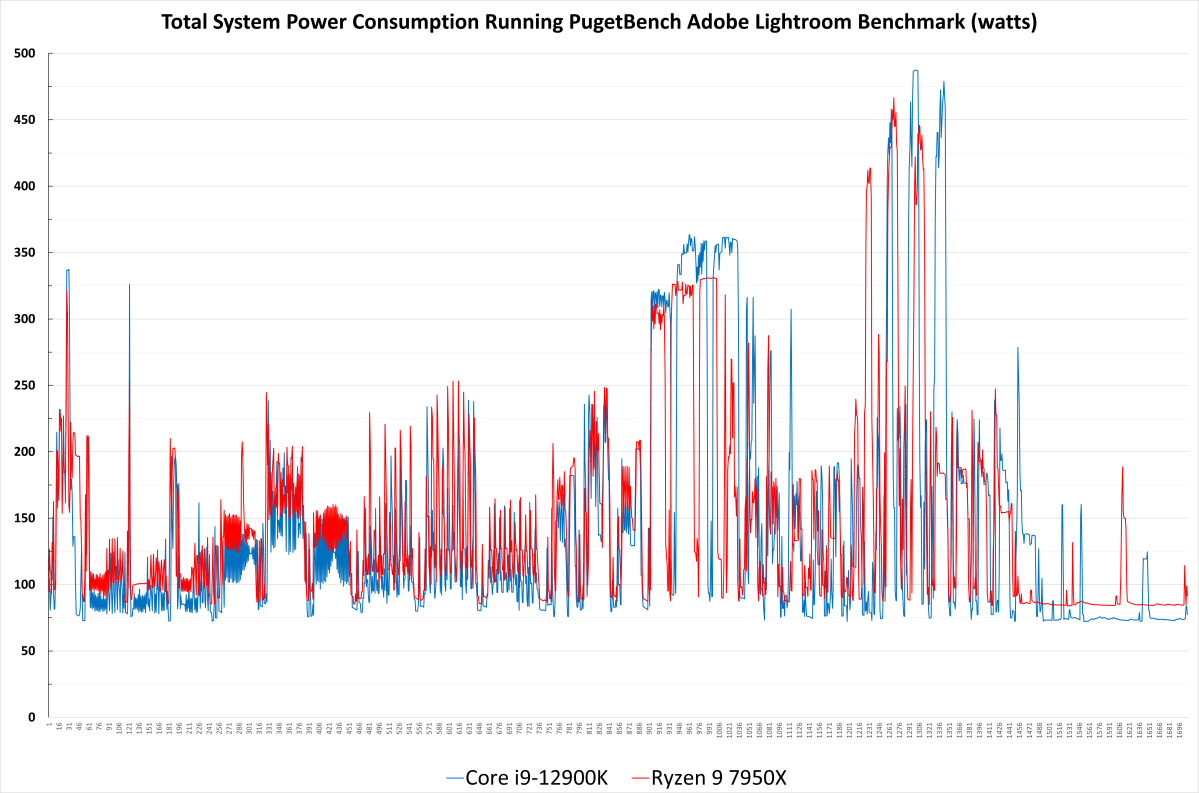

Look at the above. In Blender, the 7950X draws 235W, the 12900K draws 257W. The 13900K has 8 more cores than the 12900K and is clocked even further past the limit on the same aging 10nm process node. It will offer around the same performance as the 7950X in productivity apps on average yet the power draw is going to be what, 300W+? For the CPU alone, that is rather obscene.

No, the 13900k won't draw 300w. It has a limit at 250, and probably a PL1 of 125, meaning after the 56s of TAU it WILL be more efficient than the 7950x, just like the 12900k is.

Yes, exactly because of those extra 8 ecores and better binning it will absolutely be more efficient than the 12900k. Also it will absolutely be way easier to cool since it's a bigger die. The power draw of the 13900k official is 125 / 253, meaning it will boost to 253w for 56 seconds and then drop down to 125, in which guess it will be insanely more efficient than a stock 7950x. Guaranteed.You have a serious habit of embellishing facts, with 8 more E cores, higher clocks & possibly higher temps (leakage) you think 13900k will be more efficient? And where are you pulling that 245W number from

That's not how stock 12900k performs & you know it ~

So can we stop this insinuation that it doesn't run at full turbo by default? Whether by design or Intel allowing their motherboards to basically ignore all power limits

At stock IMO 13900k will at best match 12900k's efficiency, of course it will depend on the task as well but I don't really see it being that much better. What is more likely though that it will be less efficient at those higher default clocks.

So can we stop this insinuation that it doesn't run at full turbo by default? Whether by design or Intel allowing their motherboards to basically ignore all power limits

At stock IMO 13900k will at best match 12900k's efficiency, of course it will depend on the task as well but I don't really see it being that much better. What is more likely though that it will be less efficient at those higher default clocks.

- Joined

- May 14, 2004

- Messages

- 28,709 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

No worries, it didn't come across like that, I still felt I could add more contextI wasn't trying to criticize yours or anyone else coverage of these cpus I was just surpised more outlets didn't validate amd claims of performance at lower wattages. Thanks for clarifying it isn't a simple toggle that's definitely something AMD should add.

AMD said that they will add it as toggleable option to AGESA, the way you would expect it to work

- Joined

- May 2, 2017

- Messages

- 7,762 (2.65/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

It literally does.Power draw doesn't automatically mean heat.

CPUs do not break the laws of thermodynamics. All electric energy put into the chip is converted to thermal energy while performing said work (with the exception of the small amount of energy leaving the package still in the form of electric energy through various I/O). Your X watts of electric energy in means X watts of thermal energy out. Your presentation of CPU efficiency here is very fundamentally wrong - it is "how much work is performed per unit of energy", not "which proportion of energy is useful and which is wasted".The missing thing here is the efficiency, which tells us what part of the consumed energy went for useful work and what as heat

- Joined

- Jul 19, 2016

- Messages

- 485 (0.15/day)

Yes, exactly because of those extra 8 ecores and better binning it will absolutely be more efficient than the 12900k. Also it will absolutely be way easier to cool since it's a bigger die. The power draw of the 13900k official is 125 / 253, meaning it will boost to 253w for 56 seconds and then drop down to 125, in which guess it will be insanely more efficient than a stock 7950x. Guaranteed.

This is all totally wrong, going by how the 12900K behaves. Please stop.

- Joined

- Sep 2, 2022

- Messages

- 93 (0.09/day)

- Location

- Italy

| Processor | AMD Ryzen 9 5900X |

|---|---|

| Motherboard | ASUS TUF Gaming B550-PLUS |

| Memory | Corsair Vengeance LPX DDR4 4x8GB |

| Video Card(s) | Gigabyte GTX 1070 TI 8GB |

| Storage | NVME+SSD+HDD |

| Display(s) | Benq GL2480 24" 1080p 75 Hz |

| Power Supply | Seasonic M12II 520W |

| Mouse | Logitech G400 |

| Software | Windows 11 IOT LTSC |

It's cool that they included the option to switch to an ECO mode. This probably reduces the base cpu frequency in the heavy multithreading loads, like in rendering.

The great performance even in these modes is given by the single thread performance of the single core and by the core counts. It's a beast.

The great performance even in these modes is given by the single thread performance of the single core and by the core counts. It's a beast.

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I don';t care about motherboard defaults, motherboards might run them at 999 watts by default, that's up the mobo manafacturers. From the intel website it has both the 12900k at the 13900k at 125 / 241w and 125/253 respectively.That's not how stock 12900k performs & you know it ~

So can we stop this insinuation that it doesn't run at full turbo by default? Whether by design or Intel allowing their motherboards to basically ignore all power limits

At stock IMO 13900k will at best match 12900k's efficiency, of course it will depend on the task as well but I don't really see it being that much better. What is more likely though that it will be less efficient at those higher default clocks.

Regardless of that, even at full TAU numbers the 13900k will obviously be way more efficient than the 12900k, lol.

Are you saying intel has wrong info on their website about their own cpus? LOLKThis is all totally wrong, going by how the 12900K behaves. Please stop.

So you claimed it goes down to 125W "TDP" after 56s & when you saw that's not the case you're trying the other obfuscation/deflection route?

What's ironic is that if they did limit "TDP" like in the past both the chips, ADL & likely RPL on unlimited turbo, would be a bit to a lot more efficient overall.

Yeah no, not happening at those clocks unless Intel strictly restricts "TDP" to reasonable numbers!Regardless of that, even at full TAU numbers the 13900k will obviously be way more efficient than the 12900k, lol.

What's ironic is that if they did limit "TDP" like in the past both the chips, ADL & likely RPL on unlimited turbo, would be a bit to a lot more efficient overall.

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I didn't claim anything, Intel claims it in their official 12900k webpage. What the heck are you talking about, really?So you claimed it goes down to 125W "TDP" after 56s & when you saw that's not the case you're trying the other obfuscation/deflection route?

Yeah no, not happening at those clocks unless Intel strictly restricts "TDP" to reasonable numbers!

What's ironic is that if they did limit "TDP" like in the past both the chips, ADL & likely RPL on unlimited turbo, would be a bit to a lot more efficient overall.

All im saying is that even with unlimited TAU, the 13900k will be way more efficient than the 12900k. It's way better binned and has more cores, it's a no brainer that efficiency will go up.

- Joined

- Sep 2, 2022

- Messages

- 93 (0.09/day)

- Location

- Italy

| Processor | AMD Ryzen 9 5900X |

|---|---|

| Motherboard | ASUS TUF Gaming B550-PLUS |

| Memory | Corsair Vengeance LPX DDR4 4x8GB |

| Video Card(s) | Gigabyte GTX 1070 TI 8GB |

| Storage | NVME+SSD+HDD |

| Display(s) | Benq GL2480 24" 1080p 75 Hz |

| Power Supply | Seasonic M12II 520W |

| Mouse | Logitech G400 |

| Software | Windows 11 IOT LTSC |

@W1zzard

Don't know if it's possible with these cpus, because of their architecture, but can you try to overclock the boost/turbo of just 1 or 2 cores and reduce their base frequency for when more cores are used? Would be possible to get close to 6GHz this way?

Just asking, I never tried a Ryzen, so I don't know if it's possible to overclock things the same way of something like the FX or the i7-5960X.

Don't know if it's possible with these cpus, because of their architecture, but can you try to overclock the boost/turbo of just 1 or 2 cores and reduce their base frequency for when more cores are used? Would be possible to get close to 6GHz this way?

Just asking, I never tried a Ryzen, so I don't know if it's possible to overclock things the same way of something like the FX or the i7-5960X.

- Joined

- May 14, 2004

- Messages

- 28,709 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

OC doesn't work like that on Ryzen. You can either do all-core fixed OC or use PBO and push up the clocks PBO selects, and possibly push down the voltage at the same time for more headroom.@W1zzard

Don't know if it's possible with these cpus, because of their architecture, but can you try to overclock the boost/turbo of just 1 or 2 cores and reduce their base frequency for when more cores are used? Would be possible to get close to 6GHz this way?

Just asking, I never tried a Ryzen, so I don't know if it's possible to overclock things the same way of something like the FX or the i7-5960X.

No way to get 6 GHz on air I'd say. 5.4, 5.5 maybe, but not significantly higher

And how do you know it's better binned? And binned for what exactly? Did you forget what binning does ~I didn't claim anything, Intel claims it in their official 12900k webpage. What the heck are you talking about, really?

All im saying is that even with unlimited TAU, the 13900k will be way more efficient than the 12900k. It's way better binned and has more cores, it's a no brainer that efficiency will go up.

Explainer: What is Chip Binning?

You bought a new CPU and it seems to run cool, so you try a bit of overclocking. The GHz climb higher. Did you hit the silicon...

www.techspot.com

www.techspot.com

- Joined

- May 14, 2004

- Messages

- 28,709 (3.74/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

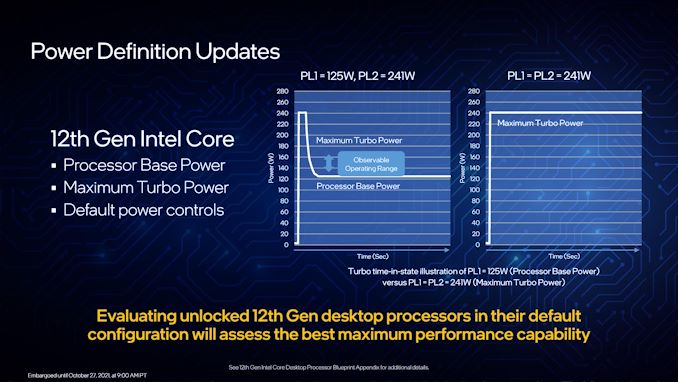

Intel is full of bs for 12900K power. The chips are configured to run PL1=PL2=241 W, so they will never go down to 125 W. What Intel means with the 125 W number is some vague "typical average in some non-boosted workload [that we don't disclose and that magically hits that magic 125 W number]"I didn't claim anything, Intel claims it in their official 12900k webpage

- Joined

- Jun 22, 2012

- Messages

- 330 (0.07/day)

| Processor | Intel i7-12700K |

|---|---|

| Motherboard | MSI PRO Z690-A WIFI |

| Cooling | Noctua NH-D15S |

| Memory | Corsair Vengeance 4x16 GB (64GB) DDR4-3600 C18 |

| Video Card(s) | MSI GeForce RTX 3090 GAMING X TRIO 24G |

| Storage | Samsung 980 Pro 1TB, SK hynix Platinum P41 2TB |

| Case | Fractal Define C |

| Power Supply | Corsair RM850x |

| Mouse | Logitech G203 |

| Software | openSUSE Tumbleweed |

Intel is full of bs for 12900K power. The chips are configured to run PL1=PL2=241 W, so they will never go down to 125 W. What Intel means with the 125 W number is some vague "typical average in some non-boosted workload [that we don't disclose and that magically hits that magic 125 W number]"

Intel Turbo limits are entirely up to the motherboard manufacturers/integrators. There are Intel-defined recommendations which include for the i9-12900K PL2=241W, PL1=125W, Tau=56s, but these limits are not enforced nor meant to be strictly followed and the notes in their datasheets make this clear.

Please have a look at sections 4.1 and 4.2 under chapter 4 "Thermal Management" here in the 12th gen datasheet: https://cdrdv2.intel.com/v1/dl/getcontent/655258

I wonder if the upcoming one for 13th gen Intel CPUs will change anything in this regard; it should be out soon given that the i9-13900K/KF is already on the Intel Ark database.

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

You can tell by the freaking clocks, lol. Binning is the wrong word, so my bad. I should have said more mature process = better clocks at same voltage. Better now?And how do you know it's better binned? And binned for what exactly? Did you forget what binning does ~

So better binned == much better efficiency? Should I quote you on that?

Explainer: What is Chip Binning?

You bought a new CPU and it seems to run cool, so you try a bit of overclocking. The GHz climb higher. Did you hit the silicon...www.techspot.com

I've seen mobos automatically place 125w PL1 limits, for example both my unifyx and my apex do that when you choose you are running on an air cooler (which I am). Other than that, yeah for alderlake and onwards it seems to be up to the OEMs and mobo manafacturers to enforce the 125/241 limits, but still they are the official recommendations i think.Intel is full of bs for 12900K power. The chips are configured to run PL1=PL2=241 W, so they will never go down to 125 W. What Intel means with the 125 W number is some vague "typical average in some non-boosted workload [that we don't disclose and that magically hits that magic 125 W number]"

Btw, any chance youll redo the 12900k power limited review you did back 10 months ago. Your numbers are flawed beyond belief

Was telling people and they called me crazy.

We've been over this ~Intel Turbo limits are entirely up to the motherboard manufacturers/integrators. There are Intel-defined recommendations which include for the i9-12900K PL2=241W, PL1=125W, Tau=56s, but these limits are not enforced nor meant to be strictly followed and the notes in their datasheets make this clear.

Please have a look at sections 4.1 and 4.2 under chapter 4 "Thermal Management" here in the 12th gen datasheet: https://cdrdv2.intel.com/v1/dl/getcontent/655258

I wonder if the upcoming one for 13th gen Intel CPUs will change anything in this regard; it should be out soon given that the i9-13900K/KF is already on the Intel Ark database.

It is basically Intel sanctioned, if Intel can retroactively patch non Z OCing a year after their previous gen boards released you think they can't shut this down?

There's basically only two ways to make efficient processors, on the same node, these days ~You can tell by the freaking clocks, lol. Binning is the wrong word, so my bad. I should have said more mature process = better clocks at same voltage. Better now?

Higher IPC or much better/tighter power management. The corollary to that is if you increase clocks further, with higher IPC, all that efficiency goes down the drain! You saw that with zen4, how much do you think RPL will be more efficient than ADL at stock? Take a wild guess?

Wrong! This would actually break thermodynamics. X watts electric cannot be equal to Y watts thermal output, and at the same time you'd get tons of rendering (for example) be done on the CPU!It literally does.

CPUs do not break the laws of thermodynamics. All electric energy put into the chip is converted to thermal energy while performing said work (with the exception of the small amount of energy leaving the package still in the form of electric energy through various I/O). Your X watts of electric energy in means X watts of thermal energy out. Your presentation of CPU efficiency here is very fundamentally wrong - it is "how much work is performed per unit of energy", not "which proportion of energy is useful and which is wasted".

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

At stock, meaning 240w on 12900k vs 253 on raptorlake? A lot, like 25% minimumThere's basically only two ways to make efficient processors, on the same node, these days ~

Higher IPC or much better/tighter power management. The corollary to that is if you increase clocks further, with higher IPC, all that efficiency goes down the drain! You saw that with zen4, how much do you think RPL will be more efficient than ADL at stock? Take a wild guess?

- Joined

- Jun 14, 2020

- Messages

- 5,057 (2.82/day)

| System Name | Mean machine |

|---|---|

| Processor | AMD 6900HS |

| Memory | 2x16 GB 4800C40 |

| Video Card(s) | AMD Radeon 6700S |

I don't understand how you expect similar efficiency when adding 8 cores but w/e, we will see. Im willing to bet a paycheck or twoWe'll see. I think you're a fair bit off, probably a month to go now before first reviews leak?

Yeah, in about a month the reviews will be out

- Joined

- Aug 21, 2015

- Messages

- 1,875 (0.53/day)

- Location

- North Dakota

| System Name | Office |

|---|---|

| Processor | Core i7 10700K |

| Motherboard | Gigabyte Z590 Aourus Ultra |

| Cooling | be quiet! Shadow Rock LP |

| Memory | 16GB Patriot Viper Steel DDR4-3200 |

| Video Card(s) | Intel ARC A750 |

| Storage | PNY CS1030 250GB, Crucial MX500 2TB |

| Display(s) | Dell S2719DGF |

| Case | Fractal Define 7 Compact |

| Power Supply | EVGA 550 G3 |

| Mouse | Logitech M705 Marthon |

| Keyboard | Logitech G410 |

| Software | Windows 10 Pro 22H2 |

Wrong! This would actually break thermodynamics. X watts electric cannot be equal to Y watts thermal output, and at the same time you'd get tons of rendering (for example) be done on the CPU!

In terms of energy, rendering (or other computing workload) is a side effect of the work done, not the work itself. Work in the physics sense is energy changing from one form to another. All the electrical energy that goes into a computer has to come out in another form to "do" anything; that is, perform work. That ends up being kinetic energy in the fans and the air they're moving (we can consider the sound generated by fans and HDDs as kinetic, but there's hardly enough of that to matter), a small amount of EM radiation from the various electrical components, a few milliwatts as signaling to your monitor or whatever, and maybe another mW or three as light from LEDs. The rest becomes heat. Those are the only options. Unless you've got some weird custom setup with a rechargeable battery, none of the energy becomes chemical, and if you're turning a bunch of energy into light, something has gone VERY wrong.

Long story short: >95% (est) of the electricity drawn by a PC becomes heat.

- Joined

- Apr 1, 2008

- Messages

- 4,696 (0.75/day)

- Location

- Portugal

| System Name | HTC's System |

|---|---|

| Processor | Ryzen 5 5800X3D |

| Motherboard | Asrock Taichi X370 |

| Cooling | NH-C14, with the AM4 mounting kit |

| Memory | G.Skill Kit 16GB DDR4 F4 - 3200 C16D - 16 GTZB |

| Video Card(s) | Sapphire Pulse 6600 8 GB |

| Storage | 1 Samsung NVMe 960 EVO 250 GB + 1 3.5" Seagate IronWolf Pro 6TB 7200RPM 256MB SATA III |

| Display(s) | LG 27UD58 |

| Case | Fractal Design Define R6 USB-C |

| Audio Device(s) | Onboard |

| Power Supply | Corsair TX 850M 80+ Gold |

| Mouse | Razer Deathadder Elite |

| Software | Ubuntu 20.04.6 LTS |

Regarding efficiency, @W1zzard :

If you stick "a worse cooler", while it should end up having the same 95º temps, it will also boost to lower speeds thus requiring less power but more time for the various benches, possibly less FPS in the various games, and also better power efficiency.

OTOH, if you stick "a better cooler", while it should end up having the same 95º temps, it might also boost to higher speeds thus requiring more power but less time for the various benches, possible more FPS in the various games, and also worse power efficiency.

Wouldn't it be better to say so, wherever applicable in the review?

Also, and i'm not sure if all boards have this option in BIOS, it's apparently possible to change the value of 95º max temp for the CPU. If it's changed to a lower temp like ... say ... 88º for example, all the scores will be affected by the fact that the CPU, instead of ramping up as much as it can with a temp target of 95º, will "only" ramp up as much as it can with a temp target of 88º, in this example, with the power and efficiency changes, and performance drop that entails.

If you stick "a worse cooler", while it should end up having the same 95º temps, it will also boost to lower speeds thus requiring less power but more time for the various benches, possibly less FPS in the various games, and also better power efficiency.

OTOH, if you stick "a better cooler", while it should end up having the same 95º temps, it might also boost to higher speeds thus requiring more power but less time for the various benches, possible more FPS in the various games, and also worse power efficiency.

Wouldn't it be better to say so, wherever applicable in the review?

Also, and i'm not sure if all boards have this option in BIOS, it's apparently possible to change the value of 95º max temp for the CPU. If it's changed to a lower temp like ... say ... 88º for example, all the scores will be affected by the fact that the CPU, instead of ramping up as much as it can with a temp target of 95º, will "only" ramp up as much as it can with a temp target of 88º, in this example, with the power and efficiency changes, and performance drop that entails.

- Joined

- May 2, 2017

- Messages

- 7,762 (2.65/day)

- Location

- Back in Norway

| System Name | Hotbox |

|---|---|

| Processor | AMD Ryzen 7 5800X, 110/95/110, PBO +150Mhz, CO -7,-7,-20(x6), |

| Motherboard | ASRock Phantom Gaming B550 ITX/ax |

| Cooling | LOBO + Laing DDC 1T Plus PWM + Corsair XR5 280mm + 2x Arctic P14 |

| Memory | 32GB G.Skill FlareX 3200c14 @3800c15 |

| Video Card(s) | PowerColor Radeon 6900XT Liquid Devil Ultimate, UC@2250MHz max @~200W |

| Storage | 2TB Adata SX8200 Pro |

| Display(s) | Dell U2711 main, AOC 24P2C secondary |

| Case | SSUPD Meshlicious |

| Audio Device(s) | Optoma Nuforce μDAC 3 |

| Power Supply | Corsair SF750 Platinum |

| Mouse | Logitech G603 |

| Keyboard | Keychron K3/Cooler Master MasterKeys Pro M w/DSA profile caps |

| Software | Windows 10 Pro |

So ... uh ... work is not energy. Energy is used to do work - to convert energy from one form to another. That is how that rendering (for example) gets done. In the process of doing work, electrical energy is converted to heat energy, which is a lower form of energy than electricity. Take a light bulb: electric energy is used to trigger some form of light-generating process (incandescent, LED, whatever). Some portion of said electrical energy is converted to electromagnetic radiation in the visible light spectrum. Some portion of it is turned directly into heat. As that visible light is spread out and hits other things, eventually it will all be absorbed by surrounding objects, and, again, turn into heat. CPUs do not give off light, or any other meaningful form of energy. As I said, some negligible amount of electrical energy is kept as that and transferred off-die for I/O - writing to RAM or storage, transferring data over PCIe, etc. This amount in watts is tiny compared to the heat output of the CPU.Wrong! This would actually break thermodynamics. X watts electric cannot be equal to Y watts thermal output, and at the same time you'd get tons of rendering (for example) be done on the CPU!

Exactly. We've essentially found a bunch of smart ways of converting energy from one form into another that leave us with really useful byproducts. Like converting fuel to heat, while leaving us with rapid movement or cooked food; or converting electricity to heat and leaving us with lights, warm (or cool) houses, and RGB. Lots of RGB.In terms of energy, rendering (or other computing workload) is a side effect of the work done, not the work itself. Work in the physics sense is energy changing from one form to another. All the electrical energy that goes into a computer has to come out in another form to "do" anything; that is, perform work. That ends up being kinetic energy in the fans and the air they're moving (we can consider the sound generated by fans and HDDs as kinetic, but there's hardly enough of that to matter), a small amount of EM radiation from the various electrical components, a few milliwatts as signaling to your monitor or whatever, and maybe another mW or three as light from LEDs. The rest becomes heat. Those are the only options. Unless you've got some weird custom setup with a rechargeable battery, none of the energy becomes chemical, and if you're turning a bunch of energy into light, something has gone VERY wrong.

Long story short: >95% (est) of the electricity drawn by a PC becomes heat.

Last edited: