It might be actually worse than 20% power for 5% performance.

Whether the actual figure is 20%, 22%, or 26.73% isn't important.

The point is overclocking silicon is a poor strategy from a performance-per-watt perspective, especially for a sustained workload. In the context of this particular thread, you should be looking for a peak load that is near the top of that curve.

Remember that performance-per-watt doesn't address fan acoustics. Decibels is a logarithmic scale so a 10 dB(A) difference is a tenfold increase in sound. A 3 dB(A) difference about twice the noise. So overclocking your GPU and having your fans run at 100% burns more GPU electricity (which is money) as well as more fan electricity (more money).

(truncated for brevity)

And I wonder who killed SLI???

Well, it certainly wasn't the GPU makers. They had a vested interest in selling more cards obviously. The onus was on the individual game developers to make SLI work properly on each title. In some notable cases, SLI made performance worse. Properly configuring SLI for the consumer wasn't a cakewalk either.

By 2017, SLI's heyday had passed. Graphics card performance was improving to the point where rasterization could be handled by one card making SLI unattractive.

Anyway, most people can only afford RTX 3060s, it was the best selling GPU from Ampere line-up. That's all nice and all, but most popular cards that people use right now are GTX 1060, GTX 1650 and RTX 2060 and those 3 alone account for over 17% of all gamers.

(truncated for brevity)

You can try to justify that RTX 3090 as much as you want, but it's a dumb card and makes no sense.

It's natural that the entry level graphics card two generations ago is the most common one on the Steam Hardware Survey, just like there are more five year old Toyota Celicas on the road than the brand new Mercedes-Benz S-Class V12 sedan.

For some people, the price of the S-Class isn't really a significant dent in their disposable income.

Remember that not everyone who buys a 3090 is going to game with it. Video games are made on PCs. And people not involved in gaming use the card too.

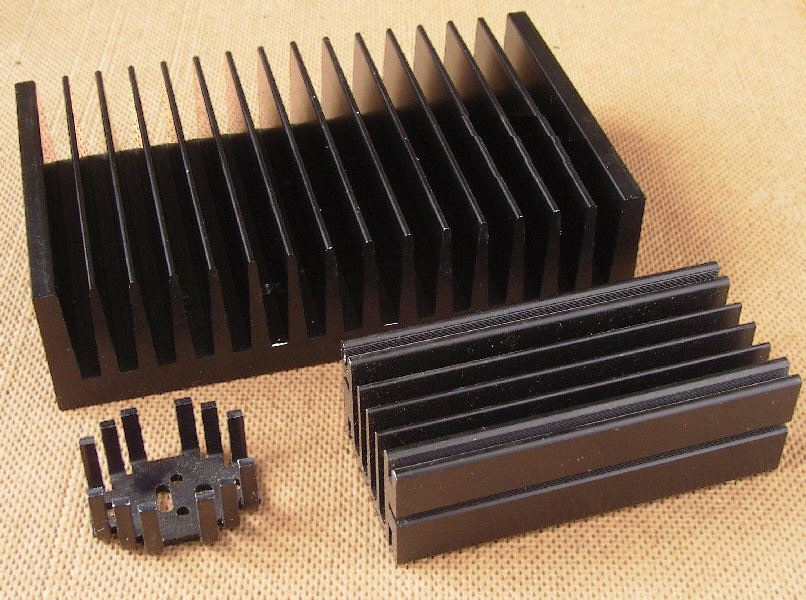

The thread discussion was the dearth of 2-slot cards for the DIY market. As several of us mentioned earlier, the main reason for this is increased power consumption in today's graphics card products which requires more hefty cooling solutions.

You can get a 2-slot 3090 but it will be liquid cooled, either as a hybrid with an AIO radiator (and an on-board fan for the VRM & VRAM) or as a waterblock for a custom cooling loop. I had an Alphacool full-length waterblock on a 2070 Super FE in a 2-slot NZXT H210 case. It worked great and ran much quieter than the stock cooler (which was also 2-slots thick). Most of the AIB coolers for 2070 Super were thicker than 2 slots and once I got the FE, I understood why. Two slots isn't realistically adequate for a graphics card GPU with a >225W TDP.

So, yes one can buy an off the shelf 2-slot GPU today. It'll be super expensive and has the caveat that there will be 240mm AIO radiator dangling off the end. There are a handful of entry level GPUs available for 2-slot configurations.

I have one: EVGA GeForce RTX 3050 8GB XC Gaming. It's in a build (the aforementioned NZXT H210) that I don't use for gaming but it works great.

And you basically end up with skimped cooling solutions in the end and hotter running GPUs, because quietness isn't important and that's basically what has been happening for over decade with pro cards. Many of them just plain suck, because they are either loud or run very hot and since there are no aftermarket coolers, you can't just buy Zotac or MSI version with actually decent heatsink either. So basically that efficiency advance only lead to smaller heatsinks, but neither lower temps or less noise.

Again, performance-per-watt is a different way to look at the situation. Apple does this with their iPhones. Those guys are laser focused on performance-per-watt because most of their business comes from iPhone and of the computers, >85% of Macs sold are notebook models.

You won't get 4090 benchmark results from a Mac but for sure their performance-per-watt metrics crush anything in the x86/x64 PC consumer market.