- Joined

- Oct 9, 2007

- Messages

- 46,827 (7.63/day)

- Location

- Hyderabad, India

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | ASUS ROG Strix B450-E Gaming |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 8GB G.Skill Sniper X |

| Video Card(s) | Palit GeForce RTX 2080 SUPER GameRock |

| Storage | Western Digital Black NVMe 512GB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

AMD this Monday in a blog post demystified the boosting algorithm and thermal management of its new Radeon RX 5700 series "Navi" graphics cards. These cards are beginning to be available in custom-designs by AMD's board partners, but were only available as reference-design cards for over a month since their 7th July launch. The thermal management of these cards spooked many early adopters accustomed to seeing temperatures below 85 °C on competing NVIDIA graphics cards, with the Radeon RX 5700 XT posting GPU "hotspot" temperatures well above 100 °C, regularly hitting 110 °C, and sometimes even touching 113 °C with stress-testing application such as Furmark. In its blog post, AMD stated that 110 °C hotspot temperatures under "typical gaming usage" are "expected and within spec."

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.

On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

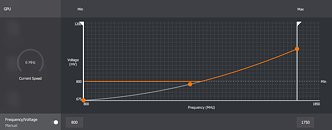

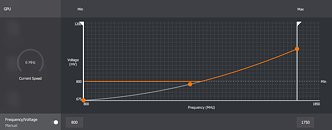

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

View at TechPowerUp Main Site

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.

On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

View at TechPowerUp Main Site