Tuesday, August 13th 2019

110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

AMD this Monday in a blog post demystified the boosting algorithm and thermal management of its new Radeon RX 5700 series "Navi" graphics cards. These cards are beginning to be available in custom-designs by AMD's board partners, but were only available as reference-design cards for over a month since their 7th July launch. The thermal management of these cards spooked many early adopters accustomed to seeing temperatures below 85 °C on competing NVIDIA graphics cards, with the Radeon RX 5700 XT posting GPU "hotspot" temperatures well above 100 °C, regularly hitting 110 °C, and sometimes even touching 113 °C with stress-testing application such as Furmark. In its blog post, AMD stated that 110 °C hotspot temperatures under "typical gaming usage" are "expected and within spec."

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

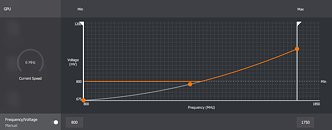

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

Source:

AMD

AMD also elaborated on what constitutes "GPU Hotspot" aka "junction temperature." Apparently, the "Navi 10" GPU is peppered with an array of temperature sensors spread across the die at different physical locations. The maximum temperature reported by any of those sensors becomes the Hotspot. In that sense, Hotspot isn't a fixed location in the GPU. Legacy "GPU temperature" measurements on past generations of AMD GPUs relied on a thermal diode at a fixed location on the GPU die which AMD predicted would become the hottest under load. Over the generations, and starting with "Polaris" and "Vega," AMD leaned toward an approach of picking the hottest temperature value from a network of diodes spread across the GPU, and reporting it as the Hotspot.On Hotspot, AMD writes: "Paired with this array of sensors is the ability to identify the 'hotspot' across the GPU die. Instead of setting a conservative, 'worst case' throttling temperature for the entire die, the Radeon RX 5700 series GPUs will continue to opportunistically and aggressively ramp clocks until any one of the many available sensors hits the 'hotspot' or 'Junction' temperature of 110 degrees Celsius. Operating at up to 110C Junction Temperature during typical gaming usage is expected and within spec. This enables the Radeon RX 5700 series GPUs to offer much higher performance and clocks out of the box, while maintaining acoustic and reliability targets."

AMD also commented on the significantly increased granularity of clock-speeds that improves the GPU's power-management. The company transisioned from fixed DPM states to a highly fine-grained clock-speed management system that takes into account load, temperatures, and power to push out the highest possible clock-speeds for each component. "Starting with the AMD Radeon VII, and further optimized and refined with the Radeon RX 5700 series GPUs, AMD has implemented a much more granular 'fine grain DPM' mechanism vs. the fixed, discrete DPM states on previous Radeon RX GPUs. Instead of the small number of fixed DPM states, the Radeon RX 5700 series GPU have hundreds of Vf 'states' between the bookends of the idle clock and the theoretical 'Fmax' frequency defined for each GPU SKU. This more granular and responsive approach to managing GPU Vf states is further paired with a more sophisticated Adaptive Voltage Frequency Scaling (AVFS) architecture on the Radeon RX 5700 series GPUs," the blog post reads.

159 Comments on 110°C Hotspot Temps "Expected and Within Spec", AMD on RX 5700-Series Thermals

Without knowing where the Temp sensor on nVidia GPUs is located, there really is no valid comparison.

The edge temp on AMD gpus aka "GPU" read out is much closer to what you typically expects.

Edit: It is not a single TJunction sensor, the TJunction / Hotspot read out is just the highest reading out of many different sensors spread across the die.

In the case of Radeon VII there are 64 of them. It is not necessary the the same area of the GPU die that is getting hot all the time.

You dont tell me either that a Nvidia GPU or Intel CPU does'nt have a hotspot either. If Hwtools where able to capture the data among those sensors, then we could realtime see which part of the GPU now is getting hotter, and thus improving thermals by for example, rework the heatpaste in between the cooler and chip.

The Reference cards are not actually overheating / throttling.That reason being? As long as the GPU chip is consuming similar power, they are putting out similar amount of heat energy.

The cooler / thermal transfer is all there is to it.

www.techpowerup.com/review/galax-geforce-rtx-2060-super-ex/30.html

5700 XT uses more power than 2070 Super in gaming on average, while performing worse. 5700 XT is slower, hotter and louder.

The VRM efficiency etc affects the cooling not the GPU die itself.Why would you want to run Furmark on any card except to heat it up?

FYI even if you put a waterblock on a stock GPU it is still putting out similar amount of heat despite running up to 40C cooler.

First we had the stupid articles of 1.5V on Ryzen CPU that is out of spec, blablabla. Do you all think AMD has hired monkeys to make chips?

Please stop being smart asses and play the fuc**** games you bought these cpus and gpus for.

Boost clocks are simular technology as Ryzen CPU's. The current(s) (power limit), temperatures (thermals) and all that are constant monitored. Undervolt is not needed, however, due to a production of different chips that was seen in the Vega series, undervolt could help in a situation where base / boost clocks are sustained compared to the original.

Give me one reason why anyone needs a 12 phase VRM for their CPU or GPU. The thing is; you wont find a real world situation in where you need that 12 phase VRM. Even if you LN2 it it's still sufficient enough (even without heatsinks, too) to bring the power the GPU or CPU needs. Sick and tired of those news posts.

It would be cool tho, @Wizzard, to have software that is able to readout all those tiny sensors as well and prefferable with a location on the chip so we could see in realtime what part of the chip is simply hottest. Dont ya'll think?

If anything you should compare it to the RTX2060 Super (like in your link...was the 2070 a typo?) and then the 5700XT is overall the better option.

- Radeon VII hotspot was fixed with some added mounting pressure, or at least, substantially improved upon

- Not a GPU gen goes by without launch (quality control) problems, be it from a bad batch or small design errors that get fixed through software (Micron VRAM, 2080ti space invaders, bad fan idle profiles, gpu power modes not working correctly, drawing too much power over the PCIe slot, etc etc.)

- AMD is known for several releases with above average temperature-related long term fail rates

As long as companies are not continuously delivering perfect releases, we have reason to question everything out of the ordinary, and 110C on the die is a pretty high temp for silicon and the components around it aren't a fan of it either. It will definitely not improve the longevity of this chip, over, say, a random Nvidia chip doing 80C all the time. You can twist and turn that however you like but we are talking about the same materials doing the same sort of work. And physics don't listen to marketing.

:roll::roll::roll::roll::roll::roll::roll::roll::roll::roll:

Seriously people.

"- AMD is known for several releases with above average temperature-related long term fail rates."

I do not really agree. As long as the product is working within spec, no faillure that occurs or at least survives it's warranty period what is wrong with that? It's not like your going to use your videocard for longer then 3 years. You could always tweak the card to have lower temps. I simply slap on a AIO watercooler and call it a day. GPU hardware is designed to run 'hot'. Have'nt you seen the small heatsinks that they are applying to the Firepro series? Those are single-slotted coolers with small fans that you would see back in laptops and such.

Seriously, people. What the hell are you saying. AMD damage control squad in full effect here, and its preposterous as usual.

This 110C is just as 'in spec' as Intel's K CPUs doing 100 C if you look at them funny. Hot chips are never a great thing.No its not hard, that is why some reviews contain FLIR cam shots, and temps above 100 C are not unheard of, but right on the die is quite a surprise. We've also seen multiple examples over time where hot cards would have much higher return/fail rates, heat does radiate out and not just through the heatsink, VRAM for example really is not a big fan of high temps.

Keep in mind the definition of 'in spec' is subject to change and as performance gets harder to extract, goal posts are going to be moved. And it won't benefit longevity, ever. The headroom we used to have, is now used out of the box, for example.

Thermal camera is measuring the surface temperature of the back of the die.

The working transistors are actually on the side that is bonded to the substrate facing the PCB. You are assuming the Hot Spot is just the middle of the die close to the visible back side.

In really the chip has thickness and even grinding down the chip a faction of a millimeter (0.2mm) can drop the temperature by few (5) degrees.

You think that posting here that you like another forum better is not trolling?

As for your fancy heat story, VRM's are designed to withstand 110 degrees operating temperature. It's not really the VRM's that suffer but more things like the capacitors sitting right next to it. They have a estimated lifespan based on thermals. The hotter the shorter their mbtf basicly is. I woud'nt recommend playing on a card with a 100 degree vrm where GDDR chips are right next to it either, but it works and there are cards that last out many many years before giving their last frame ever.

It's becomes just more and more difficult, to cool a small die area. It's why Intel and AMD are using IHS. Not just to protect it from being crushed by too tense heatsinks or waterblock but to more evenly distribute the heat. That's why all the GPU's these days come with a copper baseplate that extracts heat from the chip faster then a material like aluminium does. AMD is able to release a stock videocard with a great cooler, but what's the purpose of that if the chip is designed to run in the 80 degree mark? The fan ramps up anyway if that is the case. And you can set that up in driver settings as well. Big deal.

so how many ppl are still running 7970s/r9 2xx cards around here,which are 6-8 years old.

I'm staying far away, regardless.

My GTX 1080 is now running into 3 years post-release and I can easily see myself getting another year out of it. And after that, I will probably sell it for close to 100-150 EUR because it still works perfectly fine. If you buy high end cards, 3 years is short and a great moment to start thinking about an upgrade WITH a profitable sale of the old GPU.

You can compare resale value of Nvidia vs AMD cards over the last five to seven years and you'll understand my point. Its almost an Apple vs Android comparison, AMD cards lose value much faster and this is the reason they do. Its too easy to chalk that up to 'branding' alone.