- Joined

- Aug 11, 2011

- Messages

- 4,357 (0.87/day)

- Location

- Mexico

| System Name | Dell-y Driver |

|---|---|

| Processor | Core i5-10400 |

| Motherboard | Asrock H410M-HVS |

| Cooling | Intel 95w stock cooler |

| Memory | 2x8 A-DATA 2999Mhz DDR4 |

| Video Card(s) | UHD 630 |

| Storage | 1TB WD Green M.2 - 4TB Seagate Barracuda |

| Display(s) | Asus PA248 1920x1200 IPS |

| Case | Dell Vostro 270S case |

| Audio Device(s) | Onboard |

| Power Supply | Dell 220w |

| Software | Windows 10 64bit |

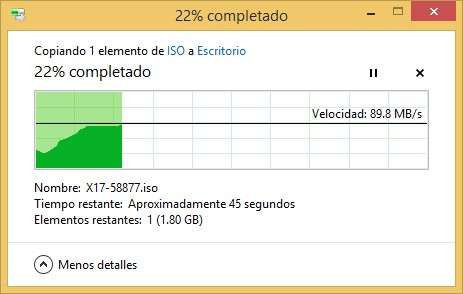

So I've got the task to haul the office "server" (an IOMEGA Home Media case) after the HDD failed.  Thankfully I had an "unofficial" backup

Thankfully I had an "unofficial" backup  and managed to avert disaster.

and managed to avert disaster.

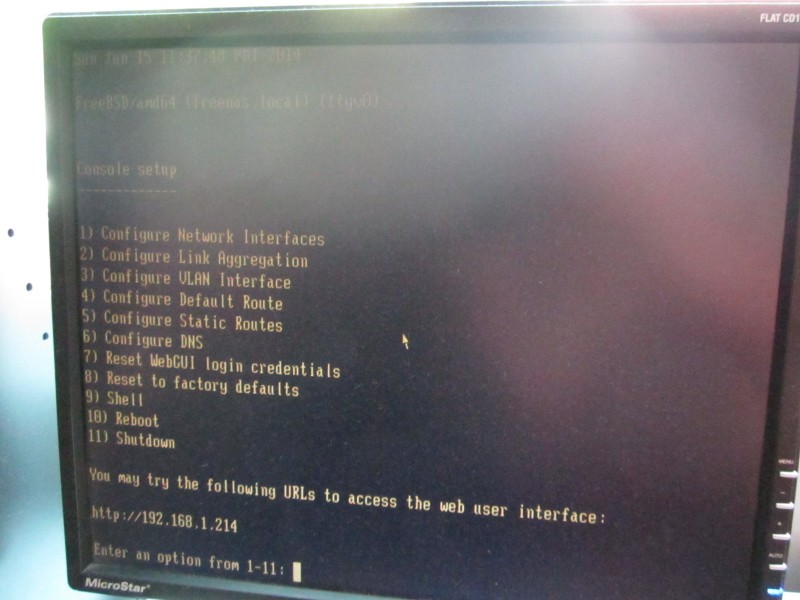

So they said, "here are 10,000 pesos, get me a server" and I thought, OK, FreeNAS it is...

Target size is 2TB. We had 360GB of data on the "server" after 5 years of operation so 2TB should last a lifetime.

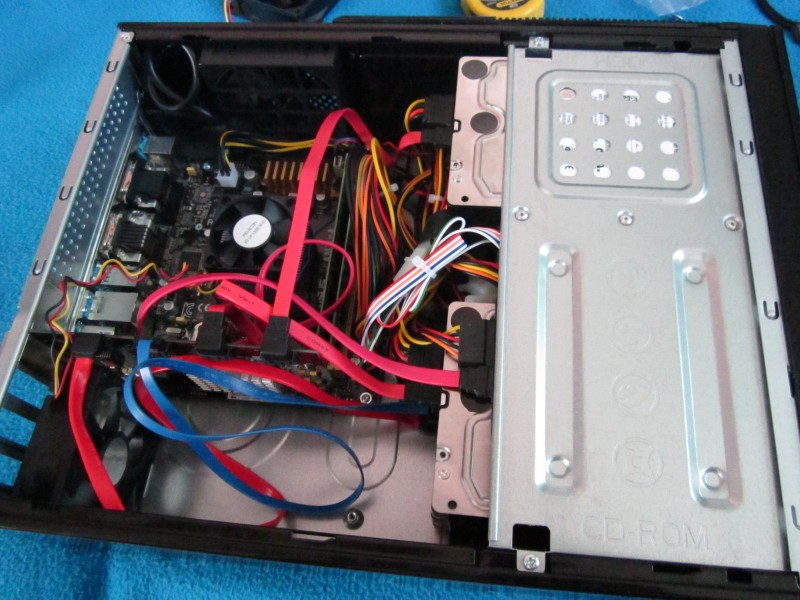

Parts list:

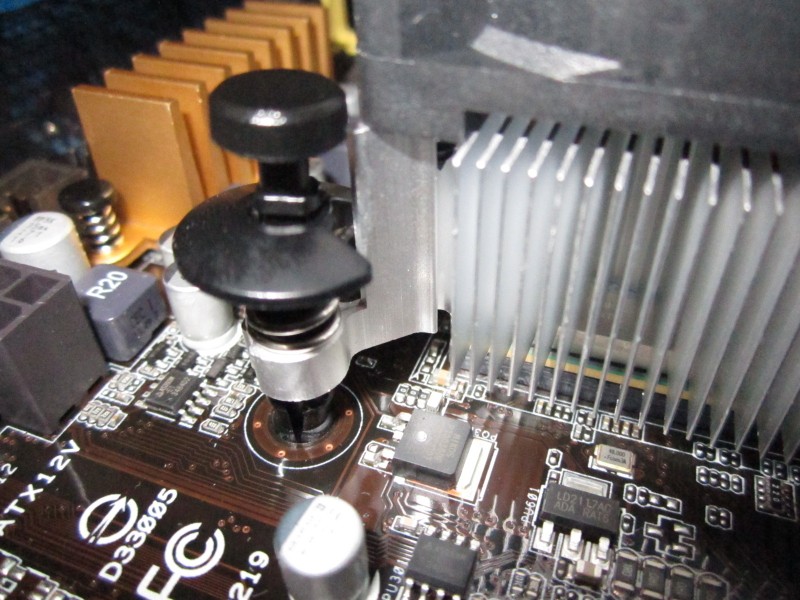

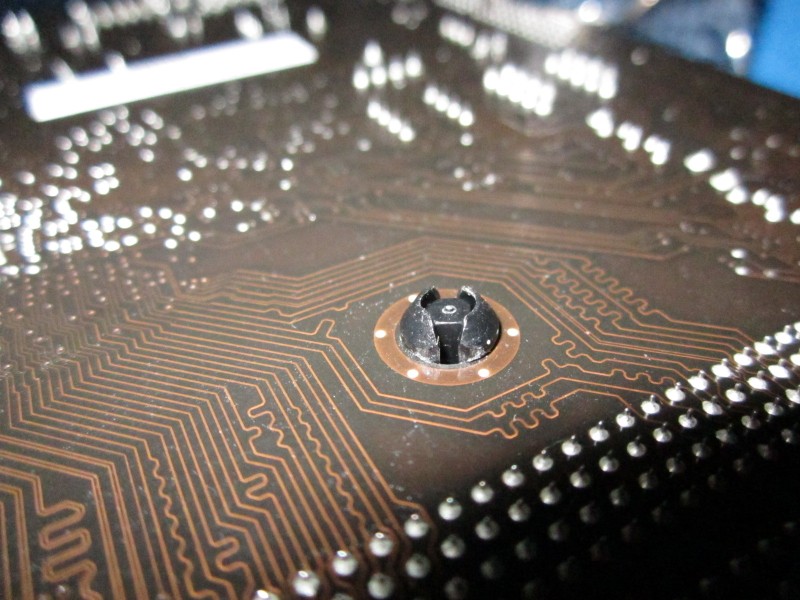

ASUS AM1I-A mITX

Athlon 5350

2x8GB DDR3-1333

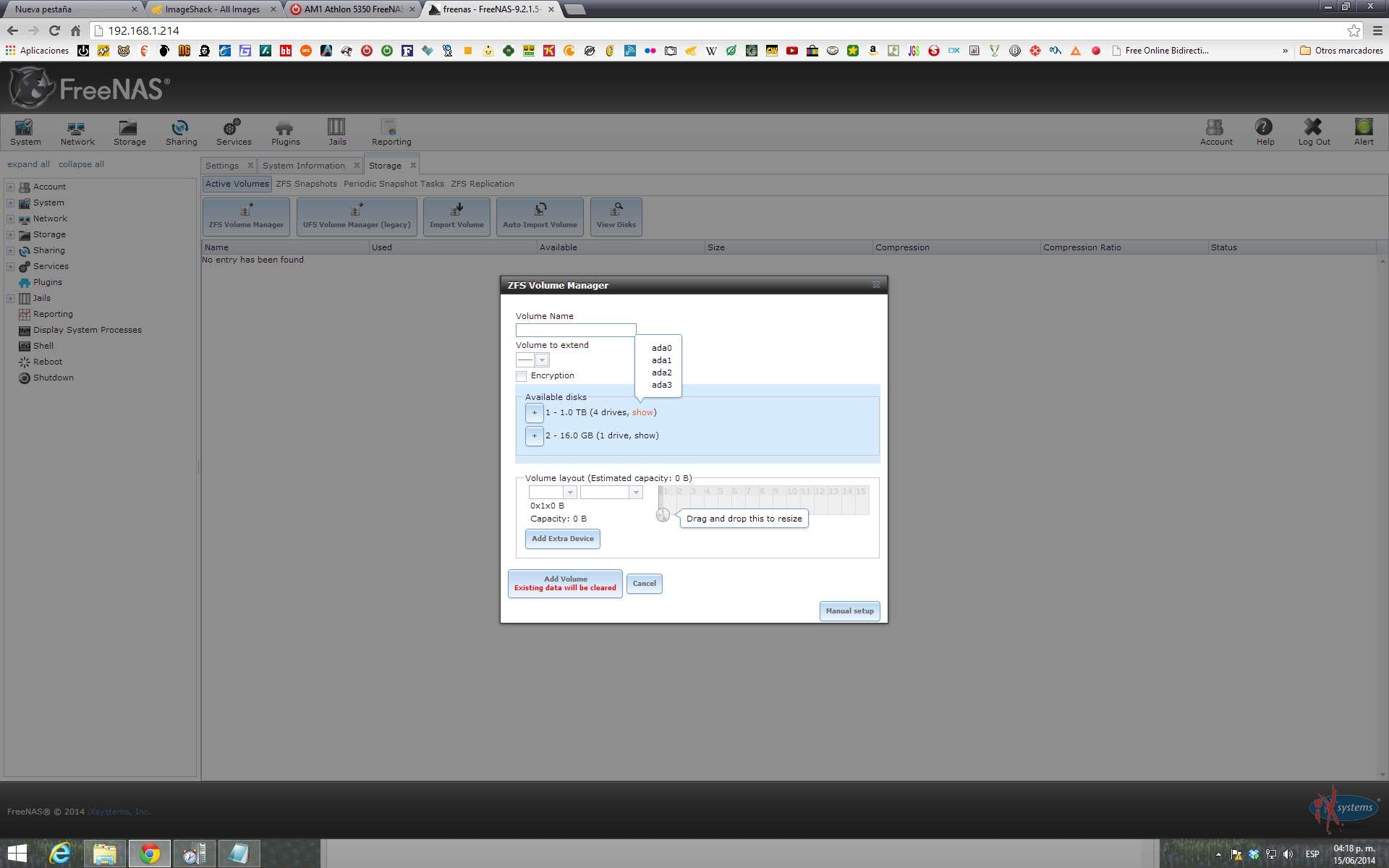

5 x 1TB Black WD drives (4 for RAIDZ2, 1 spare)

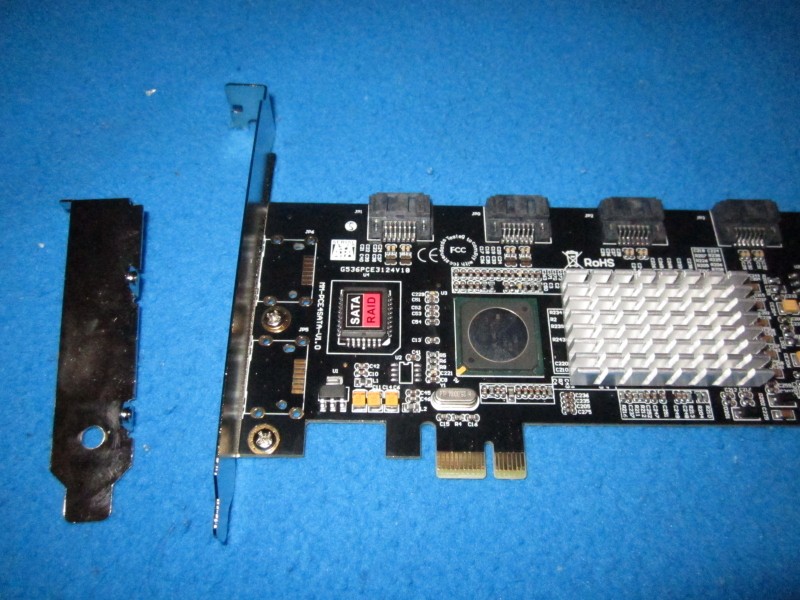

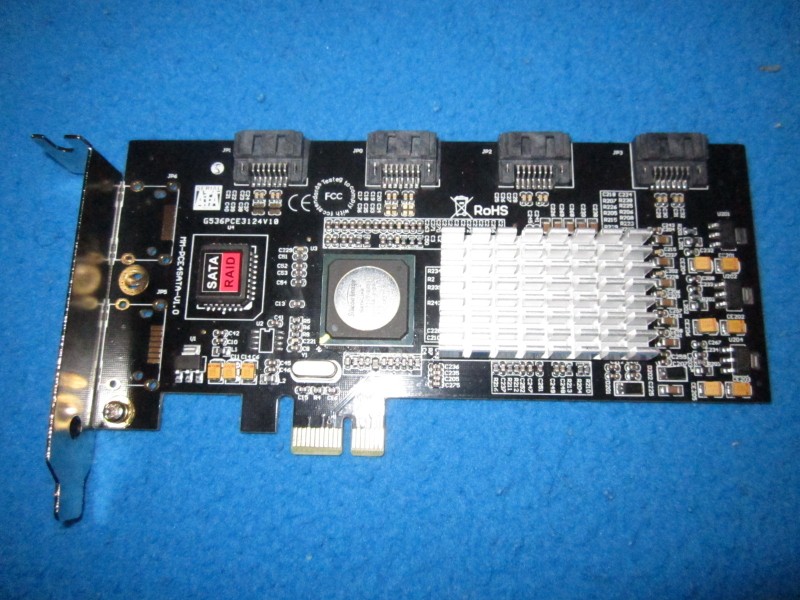

Syba 4 port SATA card (SIL3124)

Samsung 2.5" 16GB MLC SSD (ZIL drive)

Sparkle FSP-400GHS 400w SFX PSU 80+ bronze

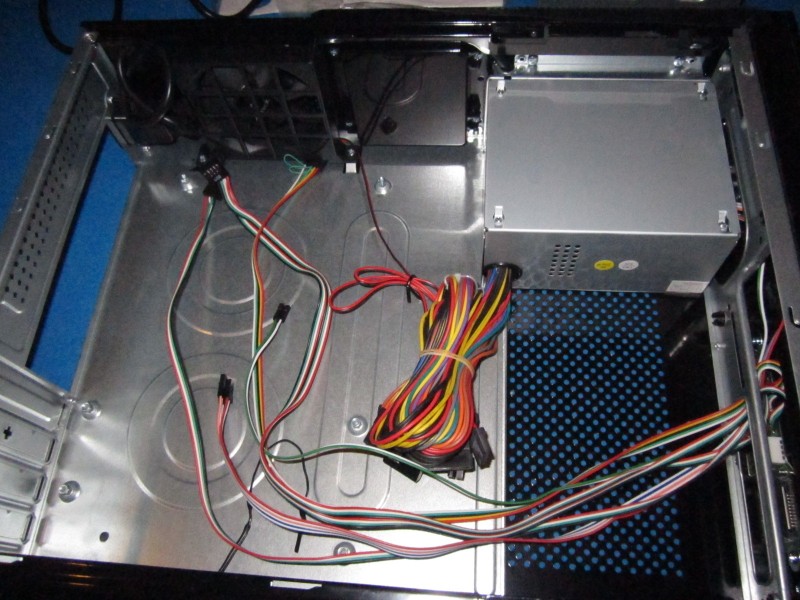

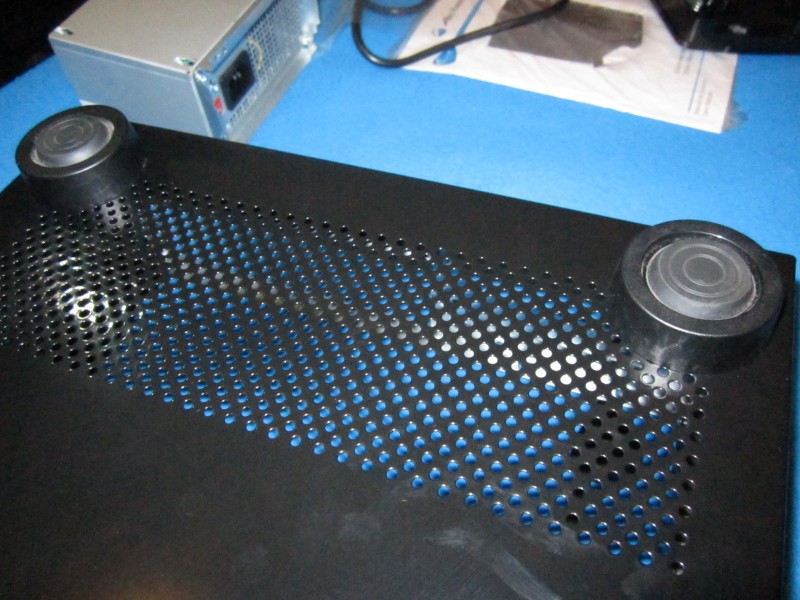

Acteck BERN mATX low profile case.

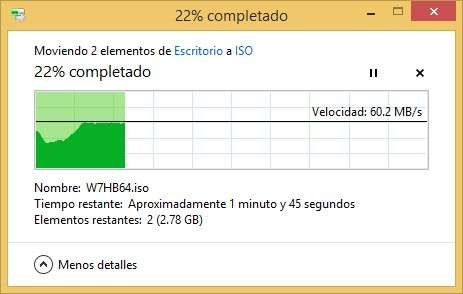

I got a few parts today:

I'll keep you posted

Thankfully I had an "unofficial" backup

Thankfully I had an "unofficial" backup  and managed to avert disaster.

and managed to avert disaster.So they said, "here are 10,000 pesos, get me a server" and I thought, OK, FreeNAS it is...

Target size is 2TB. We had 360GB of data on the "server" after 5 years of operation so 2TB should last a lifetime.

Parts list:

ASUS AM1I-A mITX

Athlon 5350

2x8GB DDR3-1333

5 x 1TB Black WD drives (4 for RAIDZ2, 1 spare)

Syba 4 port SATA card (SIL3124)

Samsung 2.5" 16GB MLC SSD (ZIL drive)

Sparkle FSP-400GHS 400w SFX PSU 80+ bronze

Acteck BERN mATX low profile case.

I got a few parts today:

I'll keep you posted

Last edited:

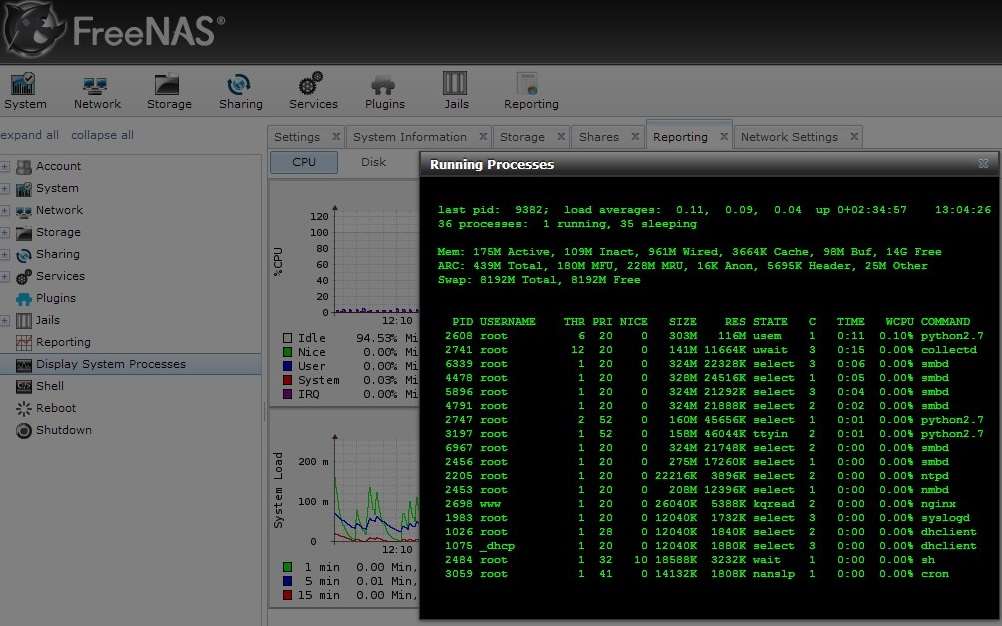

I think I won't need the SSD in there after all.

I think I won't need the SSD in there after all.