- Joined

- Oct 9, 2007

- Messages

- 47,769 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

To the surprise of many, last week, Microsoft rolled out a patch (KB2592546) for Windows that it claimed would improve performance of systems running AMD processors based on the "Bulldozer" architecture. The patch works by making the OS aware of the way Bulldozer cores are structured, so it could effectively make use of the parallelism at its disposal. Sadly, a couple of days later, it pulled that patch. Meanwhile, SweClockers got enough time to do a "before and after" performance test of the AMD FX-8150 processor, using this patch.

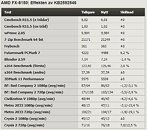

The results of SweClockers' tests are tabled below. "tidigare" is before, "nytt" is after, and "skillnad" is change. The reviewer put the chip through a wide range of tests, including synthetic CPU-intensive tests (both single and multi-threaded), and real-world gaming performance tests. The results are less than impressive. Perhaps, that's why the patch was redacted.

View at TechPowerUp Main Site

The results of SweClockers' tests are tabled below. "tidigare" is before, "nytt" is after, and "skillnad" is change. The reviewer put the chip through a wide range of tests, including synthetic CPU-intensive tests (both single and multi-threaded), and real-world gaming performance tests. The results are less than impressive. Perhaps, that's why the patch was redacted.

View at TechPowerUp Main Site

Just not to our wishful thinking!

Just not to our wishful thinking!