-

Welcome to TechPowerUp Forums, Guest! Please check out our forum guidelines for info related to our community.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Radeon RX 6800 XT

- Thread starter W1zzard

- Start date

- Joined

- Apr 18, 2013

- Messages

- 1,260 (0.31/day)

- Location

- Artem S. Tashkinov

Of course its standard, never said it wasn't. It's being implemented to nVidia standards right now on PC though. I personally just expect an RT on/off toggle in the future with "special" extra effects for nVidia.

Even if it's called "RTX" in games in reality it's D3D12 DXR - there's no such thing as "nVidia standards right now on PC though". It's like saying that there are two different DirectX'es for AMD and NVIDIA.

Has anyone realized that the 6800XT OCs MORE THAN TWICE BETTER than the 3080?WTF, really. I can't even recall a single AMD card in a decade (maybe more?) that was able to OC better by a single 0,1% margin, not to speak of more than double numbers.

5600xt will also clock about 25-30% over reference if you have the right bios

.

.Let us see what AIB can do with the 6800xt for a start, convinced we'll see AIB cards running at least 10% and maybe even 20% faster clocks than this reference version. How that will exactly translate in to real world performance, guess we have to wait a week till the cards are out

In Igor review he also think 6800 vanilla will do 2.5ghz easy out of the box.

"RTRT will be implemented in most triple-A titles from now on. "RTRT will be implemented in most triple-A titles from now on. AMD fans love to boast about how AMD GPUs are future-proof only this time around they are anything but.

It's highly unlikely "fine wine" will not allow AMD's RTRT performance to increase substantially given the current performance difference.

Sorry, is this a fact? Can you quote it from somewhere/someone?

Who cares about wattage, as long as it can be cooled with acceptable noise? Anyone who buys 1k usd gpu doesnt care about 50W more on electricity bill.

Read back to Vega reviews and you will see how many green-eyed people cared about it back to that day.

5600xt will also clock about 25-30% over reference if you have the right bios.

Yeah, but that was a totally different story.

- Joined

- May 14, 2004

- Messages

- 27,055 (3.71/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

Because Metro Last Light isn't a permanent 100% load. It is very dynamic with scenes that don't always use the GPU at 100%. This gives clever GPU algorithms a chance to make a difference. I found this a much more realistic test than just Furmark or stand still in Battlefield V at highest settings@W1zzard, would you happen to know why the average power consumption for the new AMD cards is much lower than the average other (also reputable) sites are reporting?

As a 5900X owner, I found this article to be lackluster as I wanted to see AMD 5000 series CPUs used on the testbed, not an old Intel CPU. I will have to go elsewhere for my benchmark results. But I am extremely pleased to see the AMD Radeon RX 6800 XT perform as well as it did and I expect it will only get better over time.

Last edited:

Thanks for the prompt reply. You already replied before I even modified my post haha.Because Metro Last Light isn't a permanent 100% load. It is very dynamic with scenes that don't always use the GPU at 100%. This gives clever GPU algorithms a chance to make a difference. I found this a much more realistic test than just Furmark or stand still in Battlefield V at highest settings

- Joined

- Jul 8, 2019

- Messages

- 169 (0.10/day)

As a 5900X owner, I found this article to be lack luster as I wanted to see AMD 5000 series CPUs used on the testbed, not an old Intel CPU. I will have to go else for my benchmark results.

Intels are still slightly better in gaming at the current moment, deal with it.

- Joined

- May 14, 2004

- Messages

- 27,055 (3.71/day)

| Processor | Ryzen 7 5700X |

|---|---|

| Memory | 48 GB |

| Video Card(s) | RTX 4080 |

| Storage | 2x HDD RAID 1, 3x M.2 NVMe |

| Display(s) | 30" 2560x1600 + 19" 1280x1024 |

| Software | Windows 10 64-bit |

I have this article in the works, already got 200 results for it, just 60 more test runs or soAs a 5900X owner, I found this article to be lack luster as I wanted to see AMD 5000 series CPUs used on the testbed, not an old Intel CPU. I will have to go else for my benchmark results.

- Joined

- Aug 12, 2020

- Messages

- 1,147 (0.84/day)

Would easily take it over 3080 at same price, similar/slightly lower rasterization, don't care about RT (esp. if it looks like WD:Legion LMAO), and lower power consumption to more than make up for it for me. If there are no weird consumption spikes like on RTX 3080 it means you actually can save a little going for slightly less wattage on a PSU.

Last edited:

TLDR:

Overall: kudos to RTG marketing machine which never fails to overhype and underdeliver. In terms of being future-proof people should probably wait for RDNA 3.0 or buy ... NVIDIA. Even 3-5 years from now you'll be able to play the most demanding titles thanks to DLSS just by lowering resolution.

- Truly stellar rasterization performance per watt thanks to the 7nm node.

- Finally solved multi-monitor idle power consumption!!

- Quite poor RTRT performance (I expected something close to RTX 2080 Ti, nope, far from it).

- No tensor cores (they are not just for DLSS but various handy AI features like background noise removal, background image removal and others).

- Horrible x264 hardware encoder.

- Almost no attempt at creating a decent competition. I remember AMD used to fiercly compete with NVIDIA and Intel. AMD 2020: profits and brand value (fueled by Ryzen 5000) first.

This kind of complete crap on a site with a review that says the opposite is why forums are a waste of time. Laughable.

I suggest you stop lying. Lying is bad.Intels are still slightly better in gaming at the current moment, deal with it.

Or check ANY written/video reviews around.

- Joined

- Apr 1, 2017

- Messages

- 420 (0.16/day)

| System Name | The Cum Blaster |

|---|---|

| Processor | R9 5900x |

| Motherboard | Gigabyte X470 Aorus Gaming 7 Wifi |

| Cooling | Alphacool Eisbaer LT360 |

| Memory | 4x8GB Crucial Ballistix @ 3800C16 |

| Video Card(s) | 7900 XTX Nitro+ |

| Storage | Lots |

| Display(s) | 4k60hz, 4k144hz |

| Case | Obsidian 750D Airflow Edition |

| Power Supply | EVGA SuperNOVA G3 750W |

then add support for itNot supported by our charting engine

surely displaying a second bar of a different color can't be that hard to write some code for

- Joined

- Jul 8, 2019

- Messages

- 169 (0.10/day)

Havent you see that this is with better memory? Compare stock AMD vs stock Intel smoothbrain xD

- Joined

- Apr 21, 2010

- Messages

- 562 (0.11/day)

| System Name | Home PC |

|---|---|

| Processor | Ryzen 5900X |

| Motherboard | Asus Prime X370 Pro |

| Cooling | Thermaltake Contac Silent 12 |

| Memory | 2x8gb F4-3200C16-8GVKB - 2x16gb F4-3200C16-16GVK |

| Video Card(s) | XFX RX480 GTR |

| Storage | Samsung SSD Evo 120GB -WD SN580 1TB - Toshiba 2TB HDWT720 - 1TB GIGABYTE GP-GSTFS31100TNTD |

| Display(s) | Cooler Master GA271 and AoC 931wx (19in, 1680x1050) |

| Case | Green Magnum Evo |

| Power Supply | Green 650UK Plus |

| Mouse | Green GM602-RGB ( copy of Aula F810 ) |

| Keyboard | Old 12 years FOCUS FK-8100 |

Well , true But AMD can do. by looking at P/watt chart , different between RTX 3070 ( Best of Nvidia ) and RT 6800 (Best of AMD ) is a lot.69% vs 100%. so doing undervolt will not help them.Thing is, nvidia cards are pushed to the max and with a decent undervolt you can quickly get 50W less with the same performance.

- Joined

- Jan 8, 2017

- Messages

- 8,962 (3.36/day)

| System Name | Good enough |

|---|---|

| Processor | AMD Ryzen R9 7900 - Alphacool Eisblock XPX Aurora Edge |

| Motherboard | ASRock B650 Pro RS |

| Cooling | 2x 360mm NexXxoS ST30 X-Flow, 1x 360mm NexXxoS ST30, 1x 240mm NexXxoS ST30 |

| Memory | 32GB - FURY Beast RGB 5600 Mhz |

| Video Card(s) | Sapphire RX 7900 XT - Alphacool Eisblock Aurora |

| Storage | 1x Kingston KC3000 1TB 1x Kingston A2000 1TB, 1x Samsung 850 EVO 250GB , 1x Samsung 860 EVO 500GB |

| Display(s) | LG UltraGear 32GN650-B + 4K Samsung TV |

| Case | Phanteks NV7 |

| Power Supply | GPS-750C |

Who cares about wattage, as long as it can be cooled with acceptable noise? Anyone who buys 1k usd gpu doesnt care about 50W more on electricity bill.

6800XT actually has overclocking headroom. That's why 50W, more like a 100W really, matter.

Nice try though.

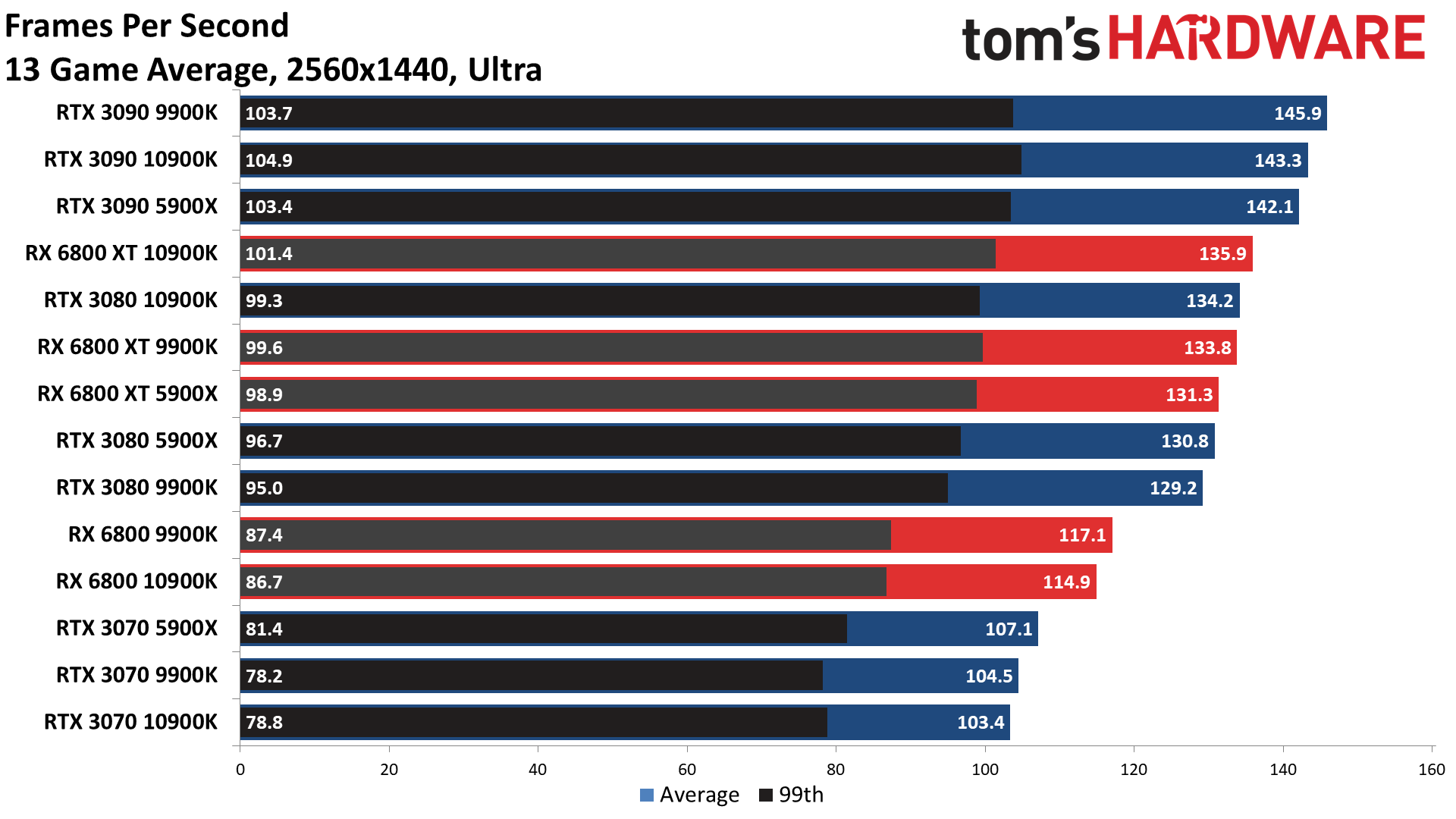

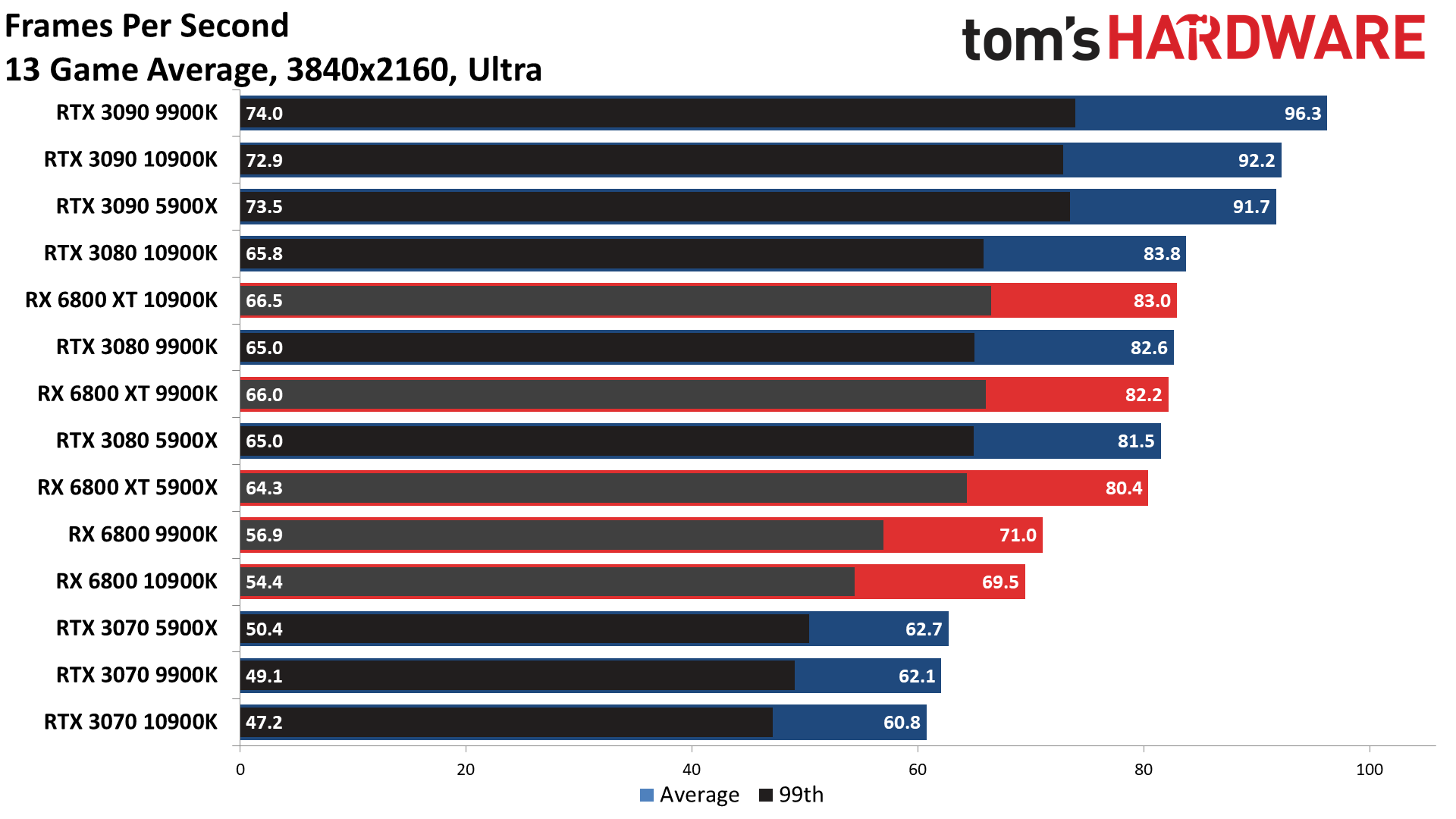

There is no difference in 4K gaming between those CPUs. Why do you post 1080p results? Are you going to use a 5900 ryzen for 1080p gaming?I suggest you stop lying. Lying is bad.

Or check ANY written/video reviews around.

For your sake I hope not

For your sake I hope not

- Joined

- Apr 6, 2015

- Messages

- 246 (0.07/day)

- Location

- Japan

| System Name | ChronicleScienceWorkStation |

|---|---|

| Processor | AMD Threadripper 1950X |

| Motherboard | Asrock X399 Taichi |

| Cooling | Noctua U14S-TR4 |

| Memory | G.Skill DDR4 3200 C14 16GB*4 |

| Video Card(s) | AMD Radeon VII |

| Storage | Samsung 970 Pro*1, Kingston A2000 1TB*2 RAID 0, HGST 8TB*5 RAID 6 |

| Case | Lian Li PC-A75X |

| Power Supply | Corsair AX1600i |

| Software | Proxmox 6.2 |

Not to argue with you, but if you water cool a Vega 64, it overclocks pretty well.Has anyone realized that the 6800XT OCs MORE THAN TWICE BETTER than the 3080?WTF, really. I can't even recall a single AMD card in a decade (maybe more?) that was able to OC better by a single 0,1% margin, not to speak of more than double numbers.

I got mine sustaining 1700 MHz, while stock cooler will average out 1400 - 1500 MHz only.

Certainly, I believe you meant without water cooling.

- Joined

- Apr 18, 2013

- Messages

- 1,260 (0.31/day)

- Location

- Artem S. Tashkinov

As a 5900X owner, I found this article to be lack luster as I wanted to see AMD 5000 series CPUs used on the testbed, not an old Intel CPU. I will have to go else for my benchmark results.

Fanboyism at its finest. There's almost zero difference between 10900K and 5950X at resolutions above 1080p and 10900K is as fast or faster than 5950X at 1080p.

Better memory? Its better memory WITH Intel and better memory WITH AMD.Havent you see that this is with better memory? Compare stock AMD vs stock Intel smoothbrain xD

And, as I said, check EVERY review available online. Written or in video format. Every review says AMD beats Intel in gaming. I understand you can't stand this situation, but this is the fact.

Ok, so now, when AMD is finally able to beat Intel in gaming, 1080p results are irrelevant...There is no difference in 4K gaming between those CPUs. Why do you post 1080p results? Are you going to use a 5900 ryzen for 1080p gaming?For your sake I hope not

That is truly ingenious! You know, the only resolution a reviewer is able to distinguish CPUs from one another is FHD (or even less). That was the case with Ryzen 2000 series vs. Coffee Lake, Ryzen 3000 series vs. Comet Lake and this is the case with Ryzen 5000 series vs. Comet Lake. Flagship GPU on FHD brings out the only difference between CPUs: a flagship GPU even on 1440p or 4K OR a non flagship CPU on FHD is the same performance in every CPU (at least considering several generations).

That is truly ingenious! You know, the only resolution a reviewer is able to distinguish CPUs from one another is FHD (or even less). That was the case with Ryzen 2000 series vs. Coffee Lake, Ryzen 3000 series vs. Comet Lake and this is the case with Ryzen 5000 series vs. Comet Lake. Flagship GPU on FHD brings out the only difference between CPUs: a flagship GPU even on 1440p or 4K OR a non flagship CPU on FHD is the same performance in every CPU (at least considering several generations).

Last edited:

- Joined

- Apr 30, 2011

- Messages

- 2,653 (0.56/day)

- Location

- Greece

| Processor | AMD Ryzen 5 5600@80W |

|---|---|

| Motherboard | MSI B550 Tomahawk |

| Cooling | ZALMAN CNPS9X OPTIMA |

| Memory | 2*8GB PATRIOT PVS416G400C9K@3733MT_C16 |

| Video Card(s) | Sapphire Radeon RX 6750 XT Pulse 12GB |

| Storage | Sandisk SSD 128GB, Kingston A2000 NVMe 1TB, Samsung F1 1TB, WD Black 10TB |

| Display(s) | AOC 27G2U/BK IPS 144Hz |

| Case | SHARKOON M25-W 7.1 BLACK |

| Audio Device(s) | Realtek 7.1 onboard |

| Power Supply | Seasonic Core GC 500W |

| Mouse | Sharkoon SHARK Force Black |

| Keyboard | Trust GXT280 |

| Software | Win 7 Ultimate 64bit/Win 10 pro 64bit/Manjaro Linux |

So, Big Navi and Zen3 are both the best archs for gaming now (both for efficiency and performance up to 1440P), and bring even better results when combined. What a tech revolution from AMD. Kudos to them.

Then link actual 4K results where AMD beats top-of-the-line Intel CPUs in gaming. OR even the 9900K.Better memory? Its better memory WITH Intel and better memory WITH AMD.

And, as I said, check EVERY review available online. Written or in video format. Every review says AMD beats Intel in gaming. I understand you can't stand this situation, but this is the fact.

- Joined

- Jul 8, 2019

- Messages

- 169 (0.10/day)

Wonder how AMD stocks will fare today. That's important question whether these gpus are considered boom or bust by the market.

- Joined

- Apr 21, 2010

- Messages

- 562 (0.11/day)

| System Name | Home PC |

|---|---|

| Processor | Ryzen 5900X |

| Motherboard | Asus Prime X370 Pro |

| Cooling | Thermaltake Contac Silent 12 |

| Memory | 2x8gb F4-3200C16-8GVKB - 2x16gb F4-3200C16-16GVK |

| Video Card(s) | XFX RX480 GTR |

| Storage | Samsung SSD Evo 120GB -WD SN580 1TB - Toshiba 2TB HDWT720 - 1TB GIGABYTE GP-GSTFS31100TNTD |

| Display(s) | Cooler Master GA271 and AoC 931wx (19in, 1680x1050) |

| Case | Green Magnum Evo |

| Power Supply | Green 650UK Plus |

| Mouse | Green GM602-RGB ( copy of Aula F810 ) |

| Keyboard | Old 12 years FOCUS FK-8100 |

on every forum , Nv people were focusing on P/Watt and screaming like missile.. right now their gun are DLSS/RT.6800XT actually has overclocking headroom. That's why 50W, more like a 100W really, matter.

Nice try though.

I don't know about 3070, but 3080 can go from 320W to 220W just by undervolting. Performance will decrease by 5% tops. This means nvidia runs these chips well over their sweet spot.Well , true But AMD can do. by looking at P/watt chart , different between RTX 3070 ( Best of Nvidia ) and RT 6800 (Best of AMD ) is a lot.69% vs 100%. so doing undervolt will not help them.

But yeah, no doubt about it, amd has a clear advantage. Also, this efficiency allows them to overclock better and 10% better perf from a basic OC is very good. Quite sure it can reach 3090 performance via OC, which is awesome. Still, if you want RT in your games...I wouldn't buy a radeon card.