- Joined

- Jan 2, 2009

- Messages

- 1,858 (0.33/day)

- Location

- Pittsburgh, PA

| System Name | Titan |

|---|---|

| Processor | AMD Ryzen™ 7 7950X3D |

| Motherboard | ASUS ROG Strix X670E-I Gaming WiFi |

| Cooling | ID-COOLING SE-207-XT Slim Snow |

| Memory | TEAMGROUP T-Force Delta RGB 2x16GB DDR5-6000 CL30 |

| Video Card(s) | ASRock Radeon RX 7900 XTX 24 GB GDDR6 (MBA) |

| Storage | 2TB Samsung 990 Pro NVMe |

| Display(s) | AOpen Fire Legend 24" (25XV2Q), Dough Spectrum One 27" (Glossy), LG C4 42" (OLED42C4PUA) |

| Case | ASUS Prime AP201 33L White |

| Audio Device(s) | Kanto Audio YU2 and SUB8 Desktop Speakers and Subwoofer, Cloud Alpha Wireless |

| Power Supply | Corsair SF1000L |

| Mouse | Logitech Pro Superlight (White), G303 Shroud Edition |

| Keyboard | Wooting 60HE / NuPhy Air75 v2 |

| VR HMD | Occulus Quest 2 128GB |

| Software | Windows 11 Pro 64-bit 23H2 Build 22631.3447 |

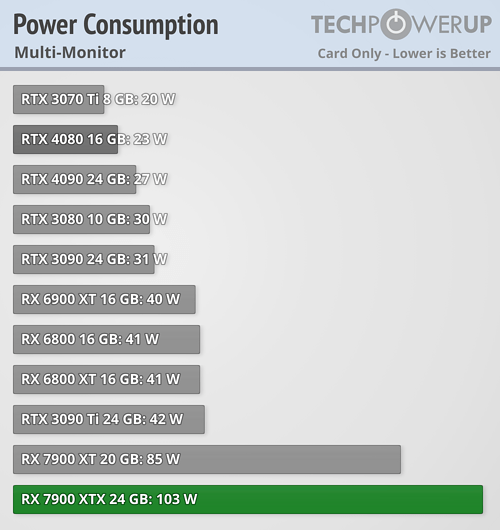

They need to get their drivers to read the monitor's EDID properly so that the idle clocks would be on par with both NVIDIA and Intel. Using CRU is still the workaround for that issue.

After that, pretty much Adrenalin is fine for what it is (aside from Enhanced Sync being useless nowadays).

Yes, of course I've reported this issue through their Bug Report tool. It's been 3 years actually and still no notable movement on this. (Find my 5700 XT thread)

After that, pretty much Adrenalin is fine for what it is (aside from Enhanced Sync being useless nowadays).

Yes, of course I've reported this issue through their Bug Report tool. It's been 3 years actually and still no notable movement on this. (Find my 5700 XT thread)

Last edited: