Aquinus

Resident Wat-man

- Joined

- Jan 28, 2012

- Messages

- 13,236 (2.69/day)

- Location

- Concord, NH, USA

| System Name | Apollo |

|---|---|

| Processor | Intel Core i9 9880H |

| Motherboard | Some proprietary Apple thing. |

| Memory | 64GB DDR4-2667 |

| Video Card(s) | AMD Radeon Pro 5600M, 8GB HBM2 |

| Storage | 1TB Apple NVMe, 2TB external SSD, 4TB external HDD for backup. |

| Display(s) | 32" Dell UHD, 27" LG UHD, 28" LG 5k |

| Case | MacBook Pro (16", 2019) |

| Audio Device(s) | AirPods Pro, AirPods Max |

| Power Supply | Display or Thunderbolt 4 Hub |

| Mouse | Logitech G502 |

| Keyboard | Logitech G915, GL Clicky |

| Software | MacOS 15.5 |

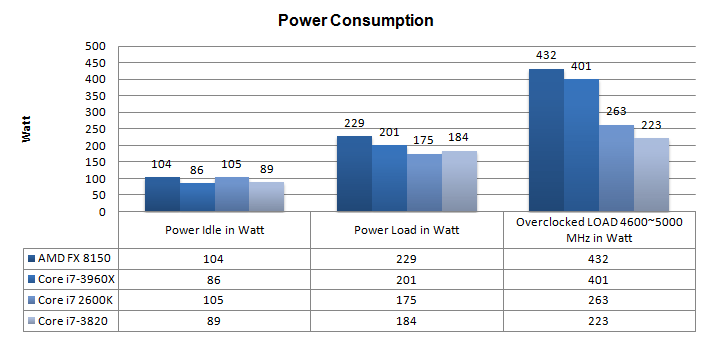

this got me intrigued cause any reviews I looked at, the i7-3820 seems to consume more power than the old 940

it is understandable that 940 consumes around what 10-15w? more than the 945? But even if we add it up that would still fit in the not negligible bracket between the i7-3820 so I'm curious where you pulled that 80w difference.

Okay, first of all you can't just pull screenshots from anywhere and expect that you're talking about the right thing. First of all, if you read what they were doing:

For our overall system load test, we ran Prime 95 In-place large FFTs on all available threads for 15 minutes, while simultaneously loading the GPU with OCCT v3.1.0 GPU:OCCT stress test at 1680x1050@60Hz in full screen mode.

Also are those overclocked power draws? Yeah, I don't think so. Those are stock. So consider for a moment that SB-E idles like a champ even when overclocking.

So okay, assuming they did the same amount of work, they're the same. Oh wait, how much slower is the 945 against the 3820 again? Look at the numbers, most of them show the 3820 to be twice as fast as the 945, according to the review that you took that screenshot from. So lets assume you have both CPUs and the 3820 spends half as much time doing the same job because it does twice as much in the same amount of time (more or less, but on average I would say that is correct.)

So lets assume we record over a few days of load where the 3820 takes 0.75 days instead of 1.5 days like the 945 would. That's the 3820 running at "166-watts" for 0.75 hour (124.5 watt/hours) plus idle which is hours at 64-watts @ 1.75 (80 watt/hours) for a total of 204.5 watt/hours.

Take the 945, loaded for twice as long (1.50 days) and idle for only (0.5 days). So 173-watts would be 259.5 watt/hours a day plus idle of 0.5 days @ 79-watts (39.5 watt/hours) which totals ~300 watt/hours.

So the actual power used difference is right there. If the 945 draws more power, the result of subtracting the value of the 945 from the 3820 should yield a negative number.

300 watt/hours - 204.5 watt/hours = 95.5 watt/hours difference for the same workload which would be recorded for twice the length of time of the longest running CPU.

Now that's the calculated difference from Cannucks. Hilbert Hagedoorn would disagree with the 3820 power consumption figures there. It doesn't help that their stressing the GPU which has nothing to do with CPU load if you're already maxxing it out.

What they leave out is what happens when you overclock the 945 and it ate power in a similar manner as Hilbert's graph does for the 8150 but not as bad, but it got up there.

So not only am I talking about machines that are overclocked, my idea of saving power over it holds true even for stock speeds, the power issue will only become more apparent the more you overclock.

I thank you for the challenge, but a little more research might be in order before making such claims.

Last edited: