Raevenlord

News Editor

- Joined

- Aug 12, 2016

- Messages

- 3,755 (1.17/day)

- Location

- Portugal

| System Name | The Ryzening |

|---|---|

| Processor | AMD Ryzen 9 5900X |

| Motherboard | MSI X570 MAG TOMAHAWK |

| Cooling | Lian Li Galahad 360mm AIO |

| Memory | 32 GB G.Skill Trident Z F4-3733 (4x 8 GB) |

| Video Card(s) | Gigabyte RTX 3070 Ti |

| Storage | Boot: Transcend MTE220S 2TB, Kintson A2000 1TB, Seagate Firewolf Pro 14 TB |

| Display(s) | Acer Nitro VG270UP (1440p 144 Hz IPS) |

| Case | Lian Li O11DX Dynamic White |

| Audio Device(s) | iFi Audio Zen DAC |

| Power Supply | Seasonic Focus+ 750 W |

| Mouse | Cooler Master Masterkeys Lite L |

| Keyboard | Cooler Master Masterkeys Lite L |

| Software | Windows 10 x64 |

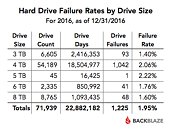

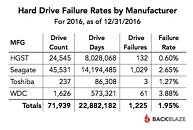

Backblaze has just revealed their HDD failure rates statistics, with updates regarding 2016's Q4 and full-year analysis. These 2016 results join the company's statistics, which started being collected and collated in April 2013, to shed some light on the most - and least reliable - manufacturers. A total of 1,225 drives failed in 2016, which means the drive failure rate for 2016 was just 1.95 percent, a improving over the 2.47 percent that died in 2015 and miles below the 6.39 percent that hit the garbage bin in 2014.

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

View at TechPowerUp Main Site

Organizing 2016's failure rates by drive size, independent of manufacturer, we see that 3 TB hard drives are the most reliable (with 1,40% failure rates), with 5 TB hard drives being the least reliable (at a 2,22% failure rate). When we organize the drives by manufacturer, HGST, which powers 34% (24,545) of the total drives (71,939), claims the reliability crown, with a measly 0,60% failure rate, and WDC bringing up the rear on reliability terms, with an average 3,88% failure rate, while simultaneously being one of the least represented manufacturers, with only 1,626 HDDs being used from the manufacturer.

View at TechPowerUp Main Site