- Joined

- Aug 21, 2015

- Messages

- 1,890 (0.53/day)

- Location

- North Dakota

| System Name | Office |

|---|---|

| Processor | Core i7 10700K |

| Motherboard | Gigabyte Z590 Aourus Ultra |

| Cooling | be quiet! Shadow Rock LP |

| Memory | 16GB Patriot Viper Steel DDR4-3200 |

| Video Card(s) | Intel ARC A750 |

| Storage | PNY CS1030 250GB, Crucial MX500 2TB |

| Display(s) | Dell S2719DGF |

| Case | Fractal Define 7 Compact |

| Power Supply | EVGA 550 G3 |

| Mouse | Logitech M705 Marthon |

| Keyboard | Logitech G410 |

| Software | Windows 10 Pro 22H2 |

The hot-button (ha!) issue of the moment is melting 12VHPWR adapters provided with RTX 4090 graphics cards. Many of us (hi!) downplayed the trouble to begin with, chalking the furor to new-launch hype and anti-NV sentiment. Turns out there is a real issue, and I'd like to discuss it specifically, and hopefully rationally. Igor's Lab has an excellent teardown of the connector, and goes top-level into what the problems with it are. Please read that first.

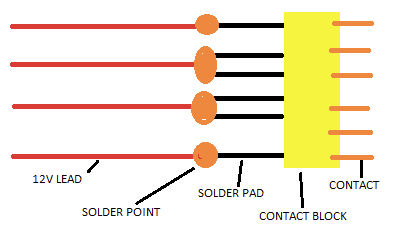

I'd like to dive a bit further into why the connector is designed the way it is, and fill gaps in my own understanding, of which there are many. I don't have any credentials in electronics engineering, and am in fact an EE dropout. What I do have is a reasonable grasp of DC circuit fundamentals (P=I*V, V=I^2*R, etc.) and a strong analytical bent. Anyway, based on Igor's article, I drew a crude block diagram in MS Paint of how Nvidia's PCIe-to-12VHPWR connector is configured, and it doesn't make a lot of sense at first blush:

The questions that pose themselves include:

1) Why 4-14ga rather than 6-16ga, including two 1-into-2 nodes?

2) Why a monolithic contact array instead of individual contacts?

To the 14ga question, there are some conflicting pro/con factors. 4-14 can carry as much or more current than 6-16. The draw back is that it is thicker, and thus necessarily less flexible. One of my hopotheses is that the extra bending resistance encountered when routing cabling is putting more strain on weak solder joints than 16ga would, increasing risk of broken joints. The existence of only four 12V leads helps answer a question I didn't list above, which is why soldered rather than crimped. Well, because you can't crimp one wire onto two connectors, also soldering is faster and cheaper to implement. The other is that given the adapter draws from four 8-pins, combining those four groups of three wires (twice, once for hot and once for ground) into six leads rather than four is unnecessarily complicated given that it's all going to one node anyway.

The contact array/block is honestly my biggest question. Something I originally typed up in this post but deleted was something about the GPU not knowing that a connection had been lost when one or more 12V leads breaks, since all current is running through the same node. I deleted it due to a lack of knowledge of how things work on the GPU side. Igor posted a block diagram of the connector that indicates that all 12V leads run to the same node by design. Why would this be? If a graphics card isn't treating each 12V connection as an individual circuit but all together as a single node, what's the point of a 12-pin connector? It would be just (or almost) as space-efficient to implement a two- or four-pin with nice robust contacts. Two pins is maybe optimistic because it would require 50A capacity, but 25A for a four-pin seems like it would be doable. If, by contrast, those separate circuits are meaningful, why run all the supply circuits through a single node?

This is meant to be a technical discussion. Do not flame, complain, troll, or hijack. Report button will be employed without hesitation or remorse.

I'd like to dive a bit further into why the connector is designed the way it is, and fill gaps in my own understanding, of which there are many. I don't have any credentials in electronics engineering, and am in fact an EE dropout. What I do have is a reasonable grasp of DC circuit fundamentals (P=I*V, V=I^2*R, etc.) and a strong analytical bent. Anyway, based on Igor's article, I drew a crude block diagram in MS Paint of how Nvidia's PCIe-to-12VHPWR connector is configured, and it doesn't make a lot of sense at first blush:

The questions that pose themselves include:

1) Why 4-14ga rather than 6-16ga, including two 1-into-2 nodes?

2) Why a monolithic contact array instead of individual contacts?

To the 14ga question, there are some conflicting pro/con factors. 4-14 can carry as much or more current than 6-16. The draw back is that it is thicker, and thus necessarily less flexible. One of my hopotheses is that the extra bending resistance encountered when routing cabling is putting more strain on weak solder joints than 16ga would, increasing risk of broken joints. The existence of only four 12V leads helps answer a question I didn't list above, which is why soldered rather than crimped. Well, because you can't crimp one wire onto two connectors, also soldering is faster and cheaper to implement. The other is that given the adapter draws from four 8-pins, combining those four groups of three wires (twice, once for hot and once for ground) into six leads rather than four is unnecessarily complicated given that it's all going to one node anyway.

The contact array/block is honestly my biggest question. Something I originally typed up in this post but deleted was something about the GPU not knowing that a connection had been lost when one or more 12V leads breaks, since all current is running through the same node. I deleted it due to a lack of knowledge of how things work on the GPU side. Igor posted a block diagram of the connector that indicates that all 12V leads run to the same node by design. Why would this be? If a graphics card isn't treating each 12V connection as an individual circuit but all together as a single node, what's the point of a 12-pin connector? It would be just (or almost) as space-efficient to implement a two- or four-pin with nice robust contacts. Two pins is maybe optimistic because it would require 50A capacity, but 25A for a four-pin seems like it would be doable. If, by contrast, those separate circuits are meaningful, why run all the supply circuits through a single node?

This is meant to be a technical discussion. Do not flame, complain, troll, or hijack. Report button will be employed without hesitation or remorse.