- Joined

- Oct 9, 2007

- Messages

- 47,692 (7.42/day)

- Location

- Dublin, Ireland

| System Name | RBMK-1000 |

|---|---|

| Processor | AMD Ryzen 7 5700G |

| Motherboard | Gigabyte B550 AORUS Elite V2 |

| Cooling | DeepCool Gammax L240 V2 |

| Memory | 2x 16GB DDR4-3200 |

| Video Card(s) | Galax RTX 4070 Ti EX |

| Storage | Samsung 990 1TB |

| Display(s) | BenQ 1440p 60 Hz 27-inch |

| Case | Corsair Carbide 100R |

| Audio Device(s) | ASUS SupremeFX S1220A |

| Power Supply | Cooler Master MWE Gold 650W |

| Mouse | ASUS ROG Strix Impact |

| Keyboard | Gamdias Hermes E2 |

| Software | Windows 11 Pro |

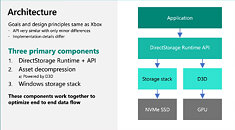

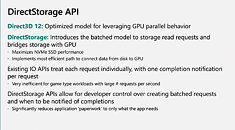

Microsoft on Tuesday, in a developer presentation, confirmed that the DirectStorage API, designed to speed up the storage sub-system, is compatible even with NVMe SSDs that use the PCI-Express Gen 3 host interface. It also confirmed that all GPUs compatible with DirectX 12 support the feature. A feature making its way to the PC from consoles, DirectStorage enables the GPU to directly access an NVMe storage device, paving the way for GPU-accelerated decompression of game assets.

This works to reduce latencies at the storage sub-system level, and offload the CPU. Any DirectX 12-compatible GPU technically supports DirectStorage, according to Microsoft. The company however recommends DirectX 12 Ultimate GPUs "for the best experience." The GPU-accelerated game asset decompression is handled via compute shaders. In addition to reducing latencies; DirectStorage is said to accelerate the Sampler Feedback feature in DirectX 12 Ultimate.

More slides from the presentation follow.

View at TechPowerUp Main Site

This works to reduce latencies at the storage sub-system level, and offload the CPU. Any DirectX 12-compatible GPU technically supports DirectStorage, according to Microsoft. The company however recommends DirectX 12 Ultimate GPUs "for the best experience." The GPU-accelerated game asset decompression is handled via compute shaders. In addition to reducing latencies; DirectStorage is said to accelerate the Sampler Feedback feature in DirectX 12 Ultimate.

More slides from the presentation follow.

View at TechPowerUp Main Site