In 2009, NTSC broadcasts ended. If you wanted to watch anything on an NTSC TV, you had to use an external ATSC tuner. They sucked. People put up with them for a while but when they could, they just bought a new TV.

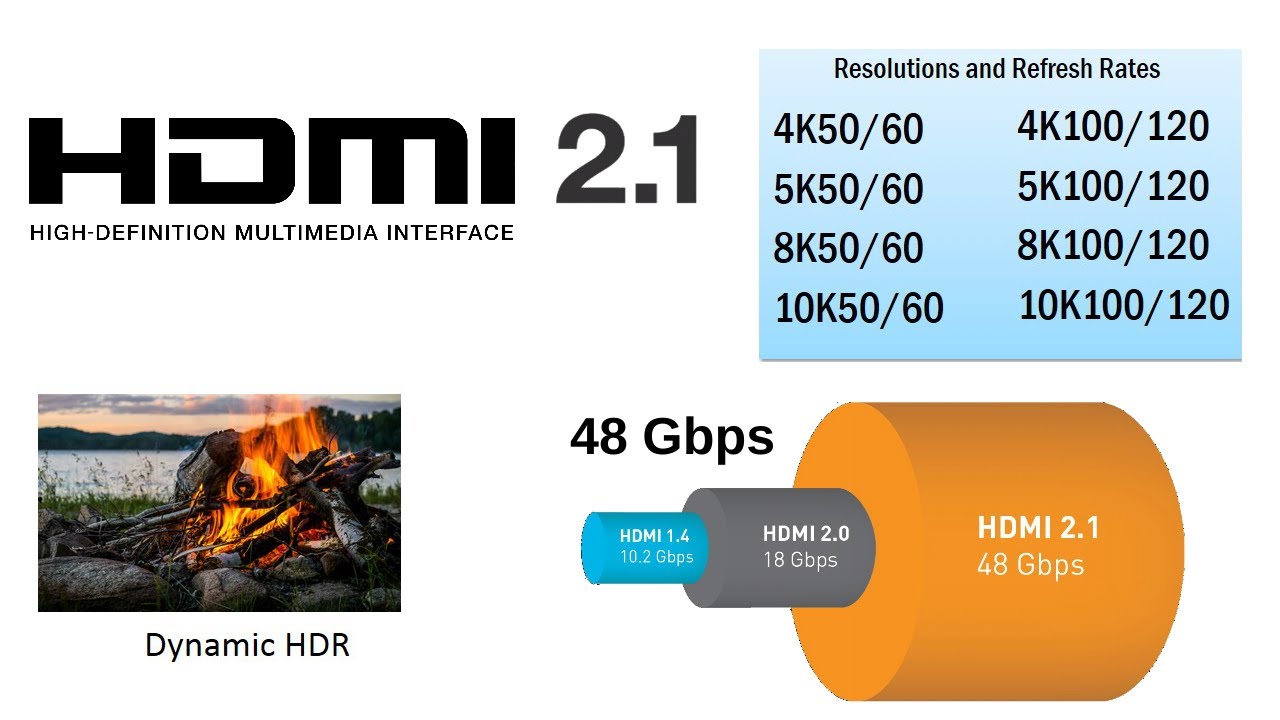

Now, 4K TVs are all over the place but content is not. ATSC is to NTSC as ATSC 3.0 is to ATSC 1.0. It will introduce HEVC, 4K, and HDR standards making them as benign as MPEG2, 720p, and 1080i are today. HDMI 2.0 was needed to transmit that signal. HDMI 2.1? First it was black and white TV, then it was color, then it was DTV, then it was HDTV, now we're getting to 4K UHD, and HDMI 2.1 exists solely to begin the push to 8K UHD. Not only does everyone have to buy new TVs to support 8K UHD, broadcasters have to buy new cameras, recording, editing, and transmission equipment, and people have to buy new receivers so their content isn't downscaled. We're talking billions of dollars easily, and repeatable about every decade or less.