While browsing through Instagram, I came across a company offering a computer equipped with an X670e AMD-based motherboard. This particular motherboard has only one 4.0 x 16 PCI slot, which poses a limitation on the number of GPUs that can be installed. However, the vendor has found a way to overcome this limitation by running up to 5 Nvidia A-GPUs on a single PCI slot.

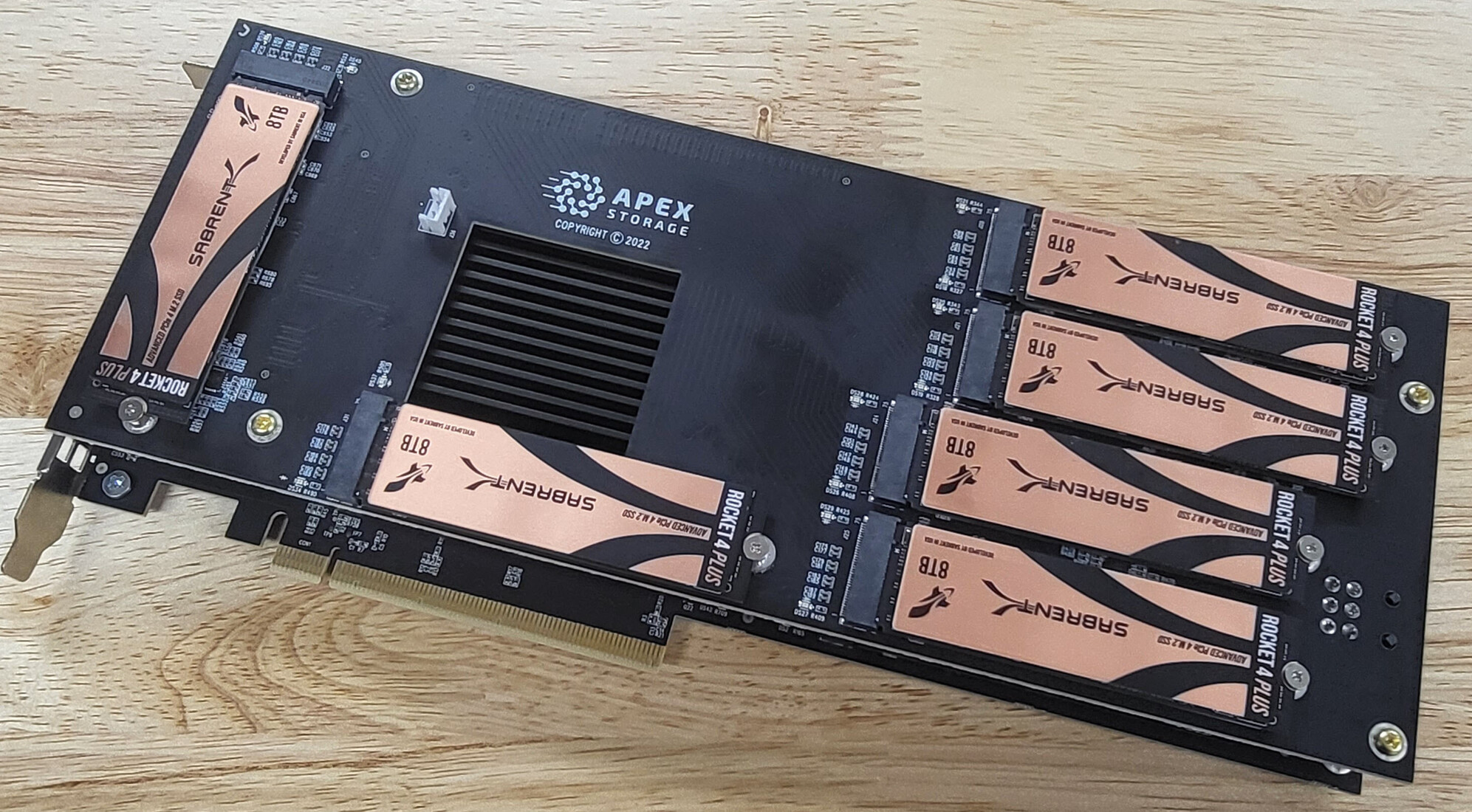

The GPUs are connected to the motherboard via single risers, which are then plugged into a specialized type of PCI card capable of accepting all of these PCI cables. This unique setup enables the vendor to operate all the cards from a single PCI lane as if they were running on multiple PCI lanes.

This is a significant advantage, as the everyday AMD chips are not able to accommodate multiple GPUs due to the motherboard's single PCI 4.0 x 16 lane. If there is more than one GPU or lane occupied on the motherboard, the second PCI lane, when used in conjunction with the first, brings the PCI lanes to a dual 4.0 X 8 PCI lane configuration. Moreover, to achieve multiple GPUs at full 4.0x16 lane, processors with Xeon/Threadripper/Epyc chipsets are necessary.

I am intrigued by this setup and curious about the type of "PCI card" utilized to enable multiple GPUs to connect to a single PCI lane. And get full access and results on each GPU. As an end-user, this presents an opportunity to make the most of the PC without having to invest in processors costing over $2000. With AMD's best processor available at $800, multiple GPUs can be utilized to achieve optimal results, even with an ITX motherboard with only a single PCI lane.

How does this work?

I really appreciate any help you can provide.

The GPUs are connected to the motherboard via single risers, which are then plugged into a specialized type of PCI card capable of accepting all of these PCI cables. This unique setup enables the vendor to operate all the cards from a single PCI lane as if they were running on multiple PCI lanes.

This is a significant advantage, as the everyday AMD chips are not able to accommodate multiple GPUs due to the motherboard's single PCI 4.0 x 16 lane. If there is more than one GPU or lane occupied on the motherboard, the second PCI lane, when used in conjunction with the first, brings the PCI lanes to a dual 4.0 X 8 PCI lane configuration. Moreover, to achieve multiple GPUs at full 4.0x16 lane, processors with Xeon/Threadripper/Epyc chipsets are necessary.

I am intrigued by this setup and curious about the type of "PCI card" utilized to enable multiple GPUs to connect to a single PCI lane. And get full access and results on each GPU. As an end-user, this presents an opportunity to make the most of the PC without having to invest in processors costing over $2000. With AMD's best processor available at $800, multiple GPUs can be utilized to achieve optimal results, even with an ITX motherboard with only a single PCI lane.

How does this work?

I really appreciate any help you can provide.